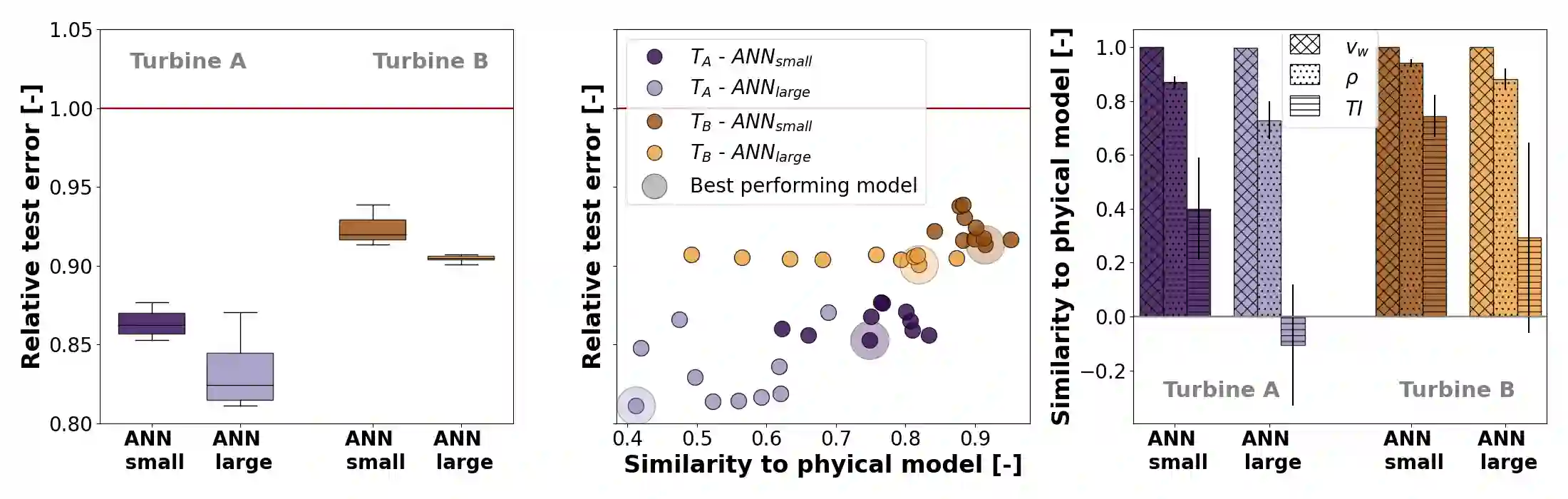

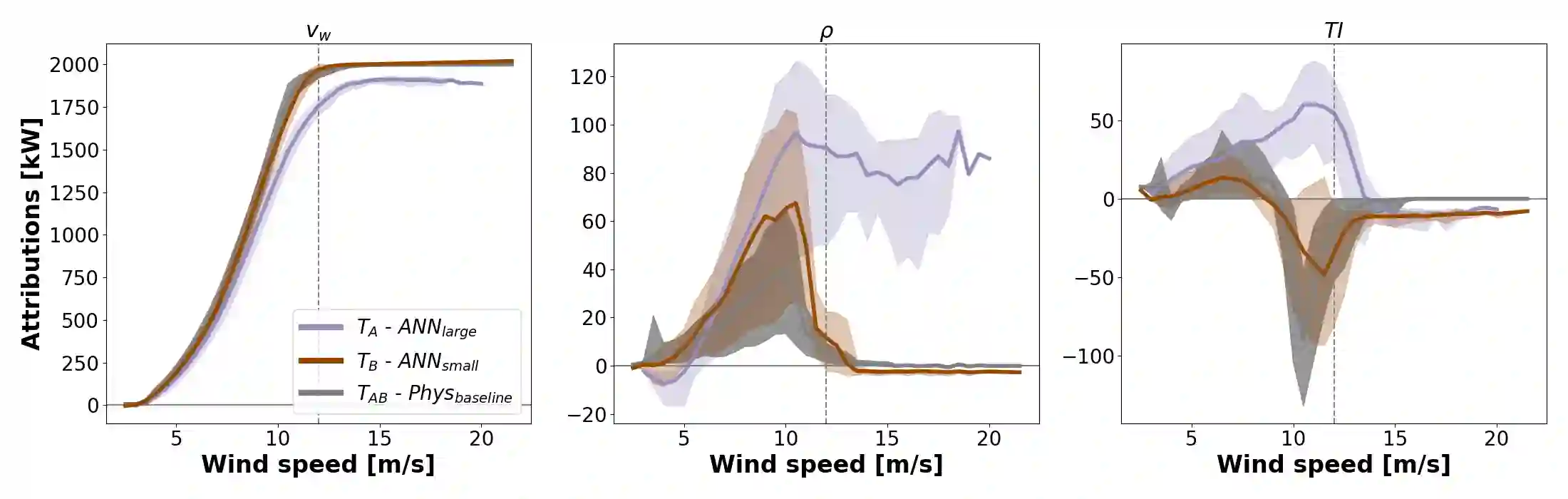

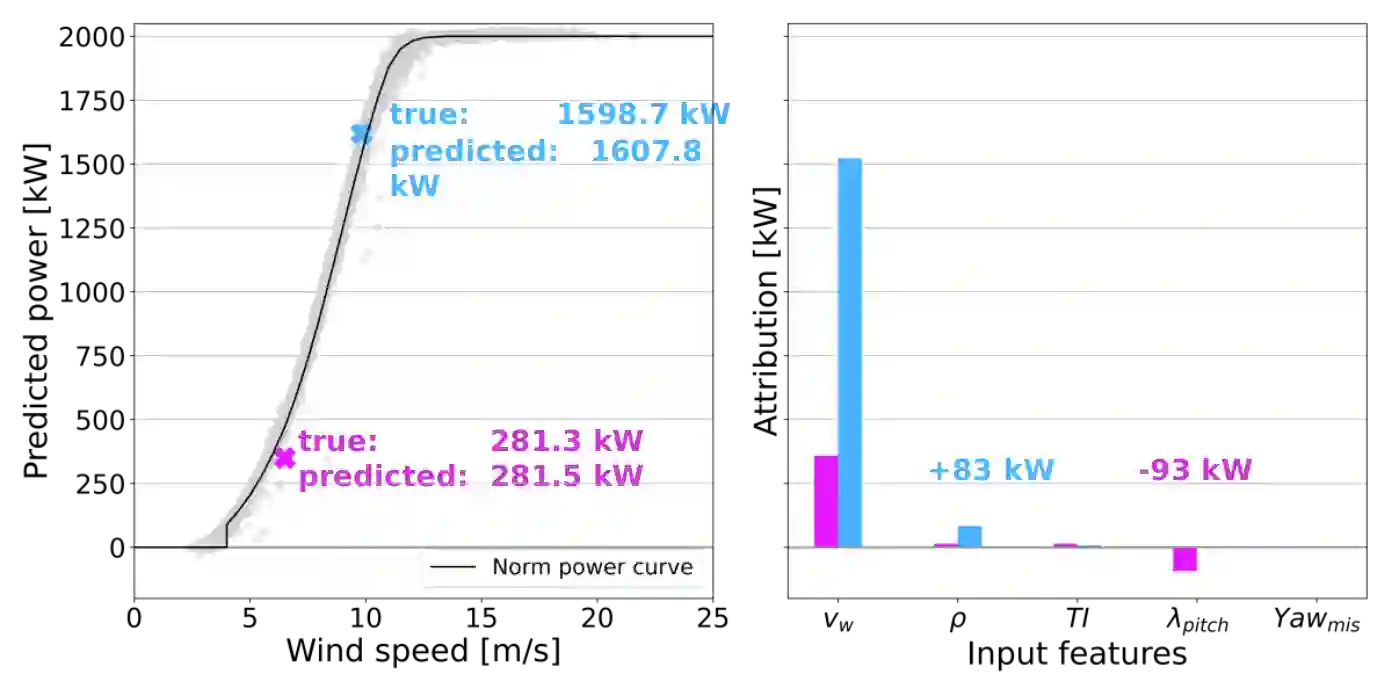

Accurate wind turbine power curve models, which translate ambient conditions into turbine power output, are crucial for wind energy to scale and fulfil its proposed role in the global energy transition. Machine learning methods, in particular deep neural networks (DNNs), have shown significant advantages over parametric, physics-informed power curve modelling approaches. Nevertheless, they are often criticised as opaque black boxes with no physical understanding of the system they model, which hinders their application in practice. We apply Shapley values, a popular explainable artificial intelligence (XAI) method, to, for the first time, uncover and validate the strategies learned by DNNs from operational wind turbine data. Our findings show that the trend towards ever larger model architectures, driven by the focus on test-set performance, can result in physically implausible model strategies, similar to the Clever Hans effect observed in classification. We, therefore, call for a more prominent role of XAI methods in model selection and additionally offer a practical strategy to use model explanations for wind turbine condition monitoring.

翻译:精确的风力涡轮机动力曲线模型将环境条件转化为涡轮机动力输出,对于风能扩大规模并发挥其在全球能源转型中的拟议作用至关重要。机器学习方法,特别是深神经网络,比物理上了解的物理电路曲线模型模型方法具有相当大的优势。然而,它们往往被批评为不透明的黑盒,对其模型系统缺乏实际了解,从而妨碍其在实践中的应用。我们应用了“毛绒”价值,一种受欢迎的可解释人工智能(XAI)方法,首次发现和验证DNT从运行的风力涡轮数据中学习的战略。我们的调查结果显示,由于以测试设定性能为重点,日益扩大的模型结构趋势可能会导致物理上无法令人信服的模型战略,类似于分类中观察到的克莱弗·汉斯效应。因此,我们呼吁在模型选择中更突出XAI方法的作用,并另外提供一种实用战略,用模型解释来监测风轮机状况。