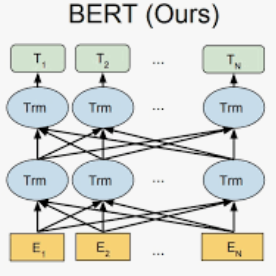

Recently, knowledge distillation (KD) has shown great success in BERT compression. Instead of only learning from the teacher's soft label as in conventional KD, researchers find that the rich information contained in the hidden layers of BERT is conducive to the student's performance. To better exploit the hidden knowledge, a common practice is to force the student to deeply mimic the teacher's hidden states of all the tokens in a layer-wise manner. In this paper, however, we observe that although distilling the teacher's hidden state knowledge (HSK) is helpful, the performance gain (marginal utility) diminishes quickly as more HSK is distilled. To understand this effect, we conduct a series of analysis. Specifically, we divide the HSK of BERT into three dimensions, namely depth, length and width. We first investigate a variety of strategies to extract crucial knowledge for each single dimension and then jointly compress the three dimensions. In this way, we show that 1) the student's performance can be improved by extracting and distilling the crucial HSK, and 2) using a tiny fraction of HSK can achieve the same performance as extensive HSK distillation. Based on the second finding, we further propose an efficient KD paradigm to compress BERT, which does not require loading the teacher during the training of student. For two kinds of student models and computing devices, the proposed KD paradigm gives rise to training speedup of 2.7x ~ 3.4x.

翻译:最近,知识蒸馏(KD)在BERT压缩中表现出了巨大的成功。 研究人员不仅从教师的软标签上学习传统KD中的软标签,而且发现BERT隐藏层所含的丰富信息有助于学生的表现。 为了更好地利用隐藏的知识,一个常见的做法是迫使学生以层化的方式深度模仿教师隐藏的所有代币的状态。 然而,在本文件中,我们观察到,虽然将教师的隐蔽的国家知识(HSK)蒸馏出来是有益的,但随着HSK的蒸馏,业绩增益(边际效用)却迅速减少。为了理解这一效果,我们进行了一系列分析。具体地说,我们把BERT的HSK分成三个层面,即深度、长度和宽度。我们首先调查各种策略,以提取每个单一层面的关键知识,然后共同压缩这三个层面。我们通过提取和提取关键HSK学生的速率,我们展示学生的成绩(MGK)和2,使用HS-K的微小版模型,我们用一个小的D级模型来进一步提升学习。