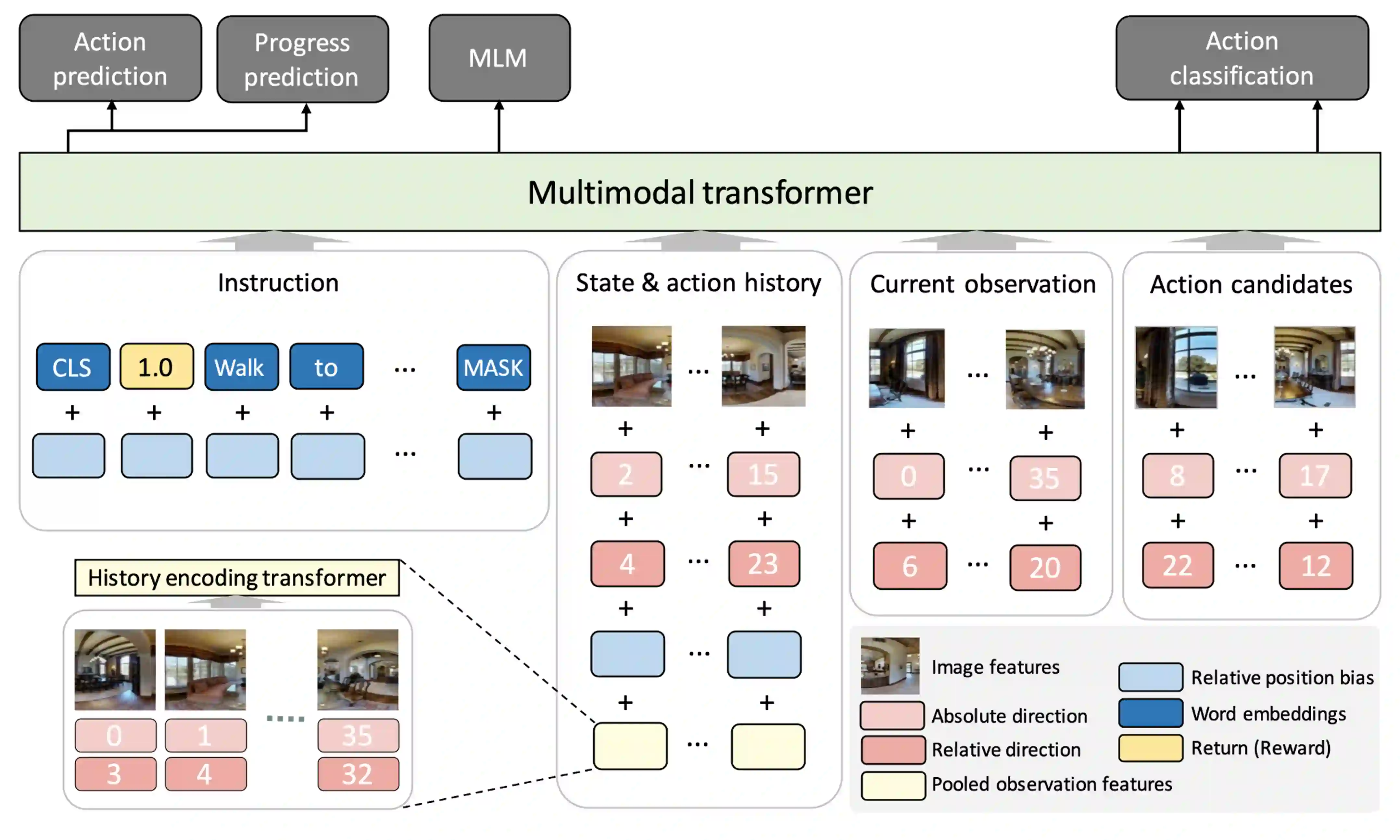

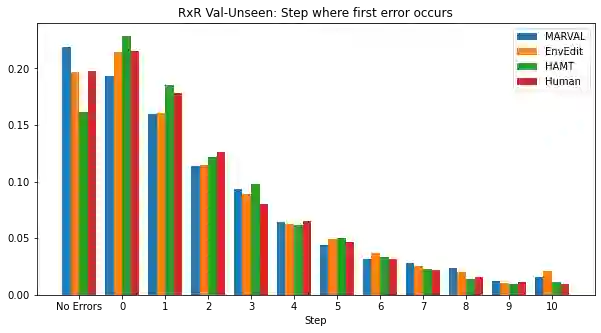

Recent studies in Vision-and-Language Navigation (VLN) train RL agents to execute natural-language navigation instructions in photorealistic environments, as a step towards intelligent agents or robots that can follow human instructions. However, given the scarcity of human instruction data and limited diversity in the training environments, these agents still struggle with complex language grounding and spatial language understanding. Pre-training on large text and image-text datasets from the web has been extensively explored but the improvements are limited. To address the scarcity of in-domain instruction data, we investigate large-scale augmentation with synthetic instructions. We take 500+ indoor environments captured in densely-sampled 360 deg panoramas, construct navigation trajectories through these panoramas, and generate a visually-grounded instruction for each trajectory using Marky (Wang et al., 2022), a high-quality multilingual navigation instruction generator. To further increase the variability of the trajectories, we also synthesize image observations from novel viewpoints using an image-to-image GAN. The resulting dataset of 4.2M instruction-trajectory pairs is two orders of magnitude larger than existing human-annotated datasets, and contains a wider variety of environments and viewpoints. To efficiently leverage data at this scale, we train a transformer agent with imitation learning for over 700M steps of experience. On the challenging Room-across-Room dataset, our approach outperforms all existing RL agents, improving the state-of-the-art NDTW from 71.1 to 79.1 in seen environments, and from 64.6 to 66.8 in unseen test environments. Our work points to a new path to improving instruction-following agents, emphasizing large-scale imitation learning and the development of synthetic instruction generation capabilities.

翻译:64年《视觉和语言导航》(VLN)最近对《视觉和语言导航(VLN)》进行了研究,培训RL代理机构,以便在光现实环境中执行自然语言导航指令,作为向智能剂或机器人迈出的一步,可以遵循人类指令的智能剂或机器人;然而,由于人类教学数据稀缺,培训环境的多样性有限,这些代理机构仍在与复杂的语言定位和空间语言理解中挣扎;对网上的大型文本和图像文本数据集进行了广泛探讨,但改进程度有限;为了解决内部教学数据稀缺的问题,我们用合成指令来调查大规模扩增。 我们从高密度的360分光环境中捕获的500+室内环境,通过这些全色图像仪构建导航轨迹,并用马基(Wang等人,2022年)和高品质的多语种导航指令生成器为每一轨迹提供直观的教学指导。为了进一步提高轨迹,我们还利用从图像到模拟GAN的所有新视角将图像显示图像的图像和图像转换方式的图像放大观测结果。