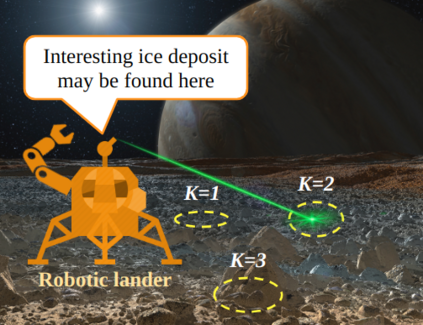

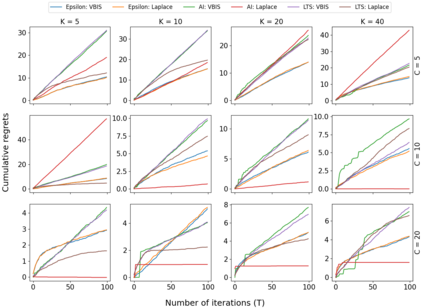

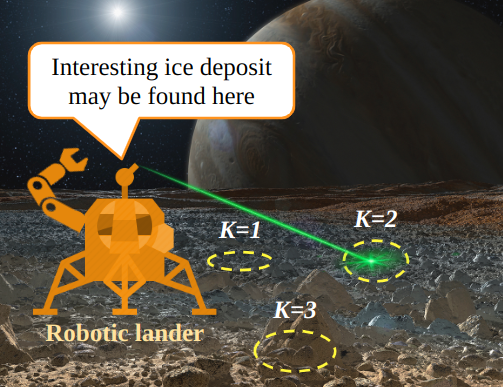

In autonomous robotic decision-making under uncertainty, the tradeoff between exploitation and exploration of available options must be considered. If secondary information associated with options can be utilized, such decision-making problems can often be formulated as a contextual multi-armed bandits (CMABs). In this study, we apply active inference, which has been actively studied in the field of neuroscience in recent years, as an alternative action selection strategy for CMABs. Unlike conventional action selection strategies, it is possible to rigorously evaluate the uncertainty of each option when calculating the expected free energy (EFE) associated with the decision agent's probabilistic model, as derived from the free-energy principle. We specifically address the case where a categorical observation likelihood function is used, such that EFE values are analytically intractable. We introduce new approximation methods for computing the EFE based on variational and Laplace approximations. Extensive simulation study results demonstrate that, compared to other strategies, active inference generally requires far fewer iterations to identify optimal options and generally achieves superior cumulative regret, for relatively low extra computational cost.

翻译:在不确定情况下自主的机器人决策中,必须考虑开发与探索现有选项之间的权衡。如果能够利用与选项相关的次要信息,这种决策问题往往可以作为一种背景性多武装强盗(CMABs)来形成。在本研究中,我们采用了积极的推论,近年来在神经科学领域对此进行了积极研究,作为CMABs的替代行动选择战略。与常规行动选择战略不同,在计算与决策代理人根据自由能源原则制定的概率模型相关的预期自由能源(EFE)时,可以严格评估每种选项的不确定性。我们具体处理了使用绝对观测概率功能的情况,例如EFE值在分析上是难以解决的。我们采用了新的近似方法,根据变式和拉比近法计算EFE。广泛的模拟研究结果表明,与其他战略相比,积极推论通常需要少得多的反复来确定最佳选项,并普遍实现较高的累积遗憾,因为额外的计算成本相对较低。