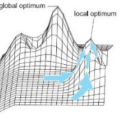

We introduce a new algorithm for expected log-likelihood maximization in situations where the objective function is multi-modal and/or has saddle points, that we term G-PFSO. The key idea underpinning G-PFSO is to define a sequence of probability distributions which (a) is shown to concentrate on the target parameter value and (b) can be efficiently estimated by means of a standard particle filter algorithm. These distributions depends on a learning rate, where the faster the learning rate the quicker they concentrate on the desired parameter value, but the less is the ability of G-PFSO to escape from a local optimum of the objective function. To reconcile ability to escape from a local optimum and fast convergence rate, the proposed estimator exploits the acceleration property of averaging, well-known in the stochastic gradient literature. Based on challenging estimation problems, our numerical experiments suggest that the estimator introduced in this paper converges at the optimal rate, and illustrate the practical usefulness of G-PFSO for parameter inference in large datasets. If the focus of this work is expected log-likelihood maximization the proposed approach and its theory apply more generally for optimizing a function defined through an expectation.

翻译:在目标功能为多模式和/或具有马鞍点的情况下,我们引入了预期日志最大化的新算法,即我们称为G-PFSO。G-PFSO的关键理念是确定概率分布序列,以(a) 显示集中于目标参数值,和(b) 可以通过标准的粒子过滤算法有效估算。这些分布取决于学习率,学习率越快,学习率越快,就越集中到理想参数值,但越少的是G-PFSO摆脱目标函数当地最佳化的能力。为了调和从当地最佳和快速趋同率中逃出的能力,拟议的估计数字利用平均加速特性,这是在随机梯度文献中广为人知的。基于具有挑战性的估算问题,我们的数值实验表明,本文中引入的估算器与最佳率一致,并表明G-PFSO对大数据集参数推断的实际效用。如果预期这项工作的重点将是日志最大化,那么,则其理论性期望会更普遍地适用于一种最优化的功能。