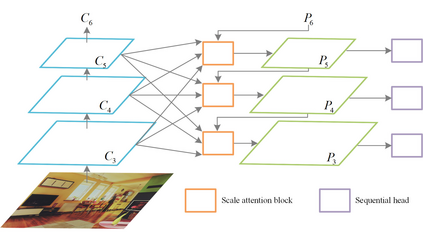

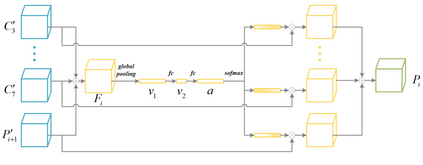

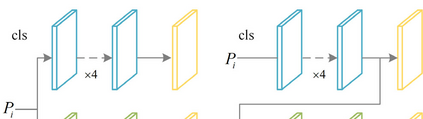

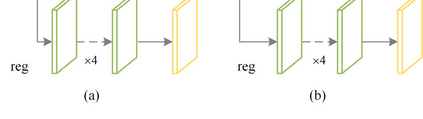

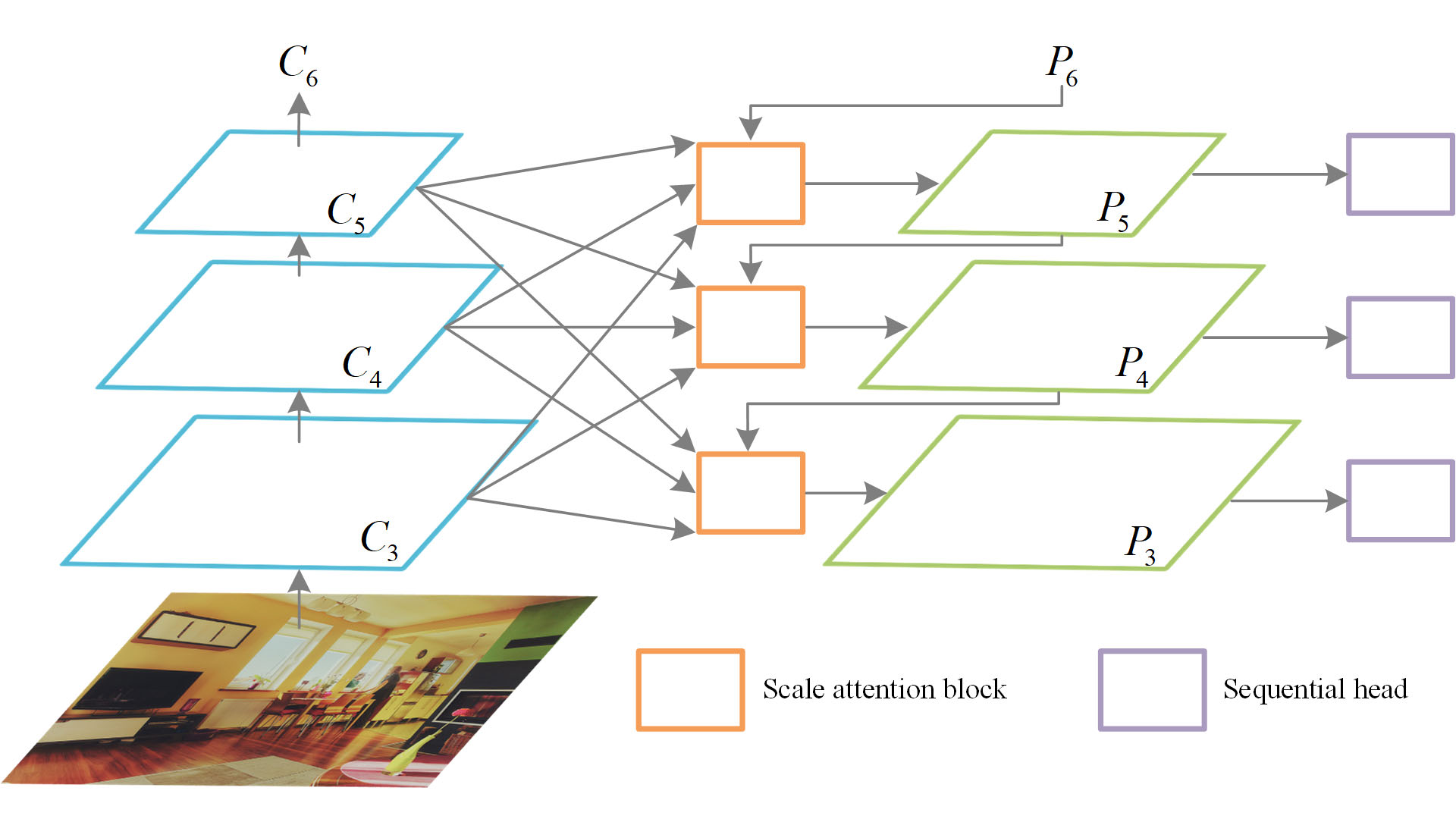

Modern object detection networks pursuit higher precision on general object detection datasets, at the same time the computation burden is also increasing along with the improvement of precision. Nevertheless, the inference time and precision are both critical to object detection system which needs to be real-time. It is necessary to research precision improvement without extra computation cost. In this work, two modules are proposed to improve detection precision with zero cost, which are focus on FPN and detection head improvement for general object detection networks. We employ the scale attention mechanism to efficiently fuse multi-level feature maps with less parameters, which is called SA-FPN module. Considering the correlation of classification head and regression head, we use sequential head to take the place of widely-used parallel head, which is called Seq-HEAD module. To evaluate the effectiveness, we apply the two modules to some modern state-of-art object detection networks, including anchor-based and anchor-free. Experiment results on coco dataset show that the networks with the two modules can surpass original networks by 1.1 AP and 0.8 AP with zero cost for anchor-based and anchor-free networks, respectively. Code will be available at https://git.io/JTFGl.

翻译:现代物体探测网络追求一般物体探测数据集的更精准性,同时计算负担随着精确度的提高也在增加,但是,推断时间和精确度对于需要实时的物体探测系统都至关重要,必须研究精确性改进而无需额外计算费用。在这项工作中,提议采用两个模块,以零成本提高探测精确度,重点是FPN和普通物体探测网络的探测头改进。我们使用比例关注机制,以有效结合具有较低参数的多级特征图,称为SA-FPN模块。考虑到分类头和回归头的关联性,我们使用顺序头取代广泛使用的平行头,称为Seq-HEAD模块。为了评估有效性,我们将将这两个模块应用到一些现代化的状态物体探测网络上,包括锚定和无锚。对 Coco数据集的实验结果表明,使用两个模块的网络可以超过原有网络1.1 AP和0.8 AP,锚定和无锚定网络的费用分别为0.8 。代码将可在https://gitio./JTFGGl上查阅。