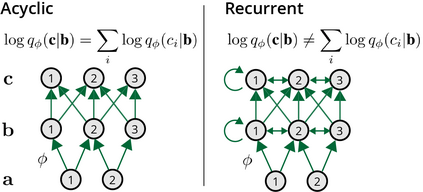

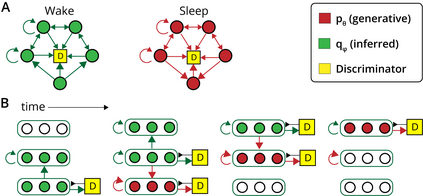

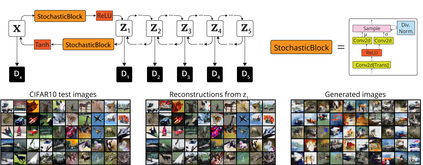

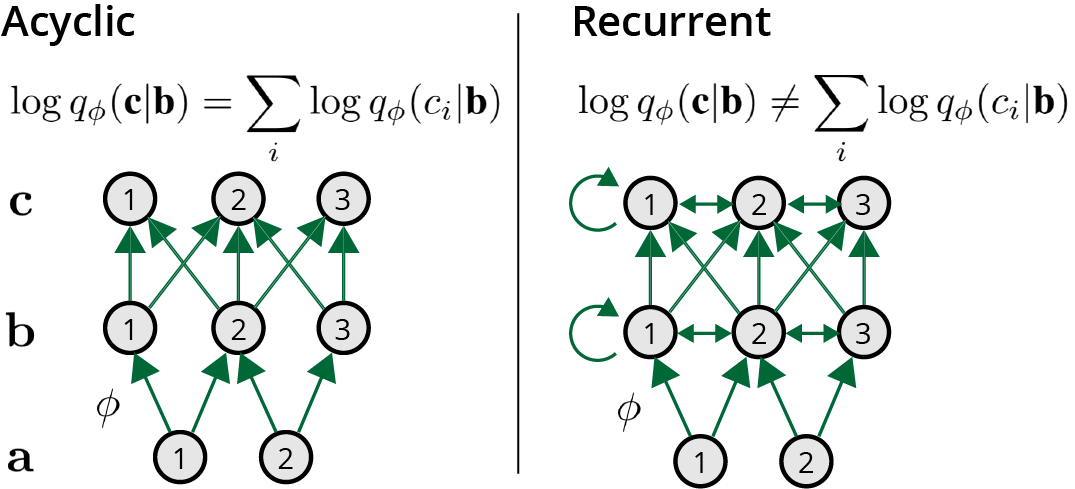

A popular theory of perceptual processing holds that the brain learns both a generative model of the world and a paired recognition model using variational Bayesian inference. Most hypotheses of how the brain might learn these models assume that neurons in a population are conditionally independent given their common inputs. This simplification is likely not compatible with the type of local recurrence observed in the brain. Seeking an alternative that is compatible with complex inter-dependencies yet consistent with known biology, we argue here that the cortex may learn with an adversarial algorithm. Many observable symptoms of this approach would resemble known neural phenomena, including wake/sleep cycles and oscillations that vary in magnitude with surprise, and we describe how further predictions could be tested. We illustrate the idea on recurrent neural networks trained to model image and video datasets. This framework for learning brings variational inference closer to neuroscience and yields multiple testable hypotheses.

翻译:一种流行的感知处理理论认为,大脑既学习一种世界基因模型,又学习一种使用变异贝叶斯感推法的对称识别模型。多数假设是,大脑如何学习这些模型,假设人口中的神经因共同投入而有条件地独立。这种简化可能与在大脑中观察到的局部复发类型不相容。我们在这里争论,寻找一种与复杂的相互依存性兼容但又与已知生物学相一致的替代方法。我们在这里争论说,皮层可能用对抗算法来学习。这种方法的许多可见症状将类似于已知的神经现象,包括醒/睡周期和规模不同且出乎意料的振荡现象。我们描述了如何进一步测试预测。我们讲述了经过训练的用于模拟图像和视频数据集的经常性神经网络的想法。这个学习框架将变化的推论引力更接近神经科学,并产生多种可测试的假说。