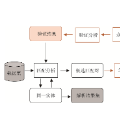

The same real-world entity (e.g., a movie, a restaurant, a person) may be described in various ways on different datasets. Entity Resolution (ER) aims to find such different descriptions of the same entity, this way improving data quality and, therefore, data value. However, an ER pipeline typically involves several steps (e.g., blocking, similarity estimation, clustering), with each step requiring its own configurations and tuning. The choice of the best configuration, among a vast number of possible combinations, is a dataset-specific and labor-intensive task both for novice and expert users, while it often requires some ground truth knowledge of real matches. In this work, we examine ways of automatically configuring a state of-the-art end-to-end ER pipeline based on pre-trained language models under two settings: (i) When ground truth is available. In this case, sampling strategies that are typically used for hyperparameter optimization can significantly restrict the search of the configuration space. We experimentally compare their relative effectiveness and time efficiency, applying them to ER pipelines for the first time. (ii) When no ground truth is available. In this case, labelled data extracted from other datasets with available ground truth can be used to train a regression model that predicts the relative effectiveness of parameter configurations. Experimenting with 11 ER benchmark datasets, we evaluate the relative performance of existing techniques that address each problem, but have not been applied to ER before.

翻译:暂无翻译