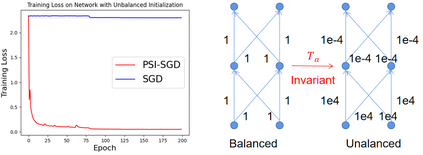

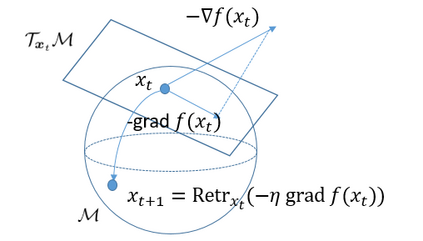

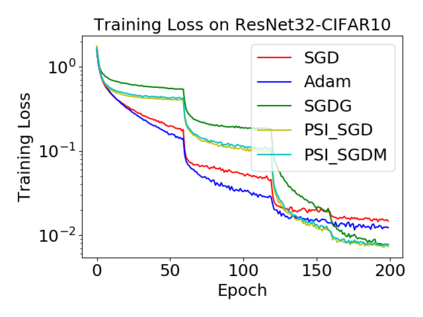

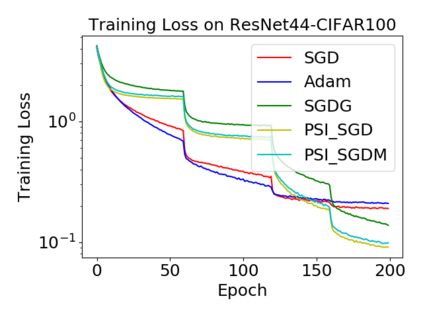

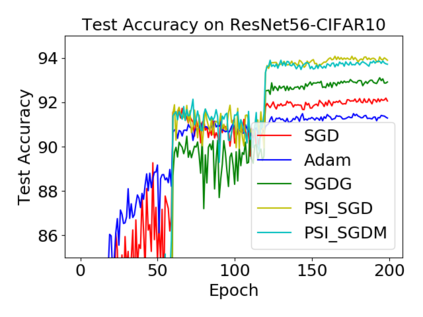

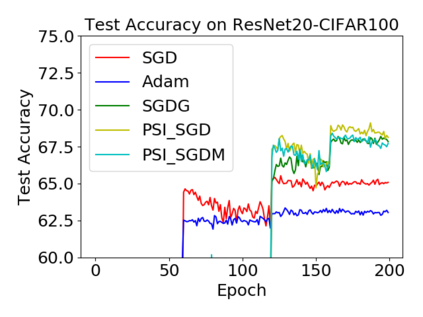

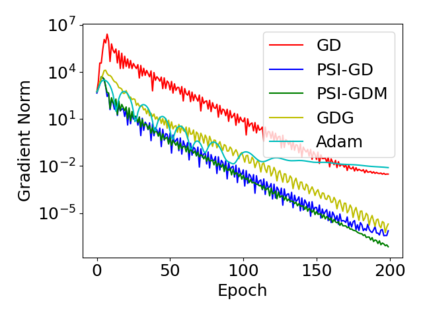

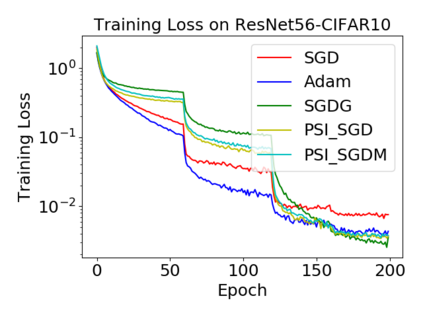

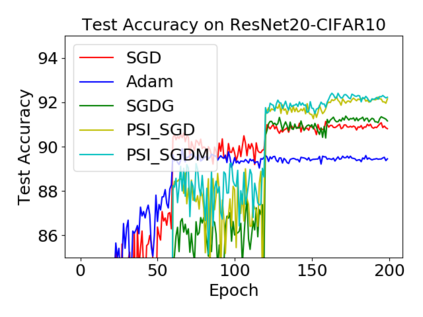

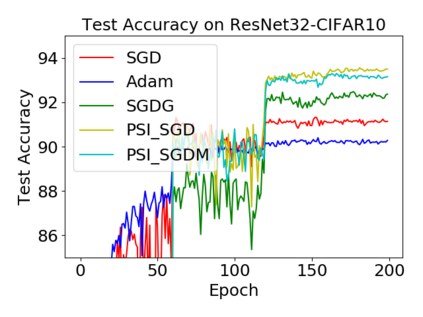

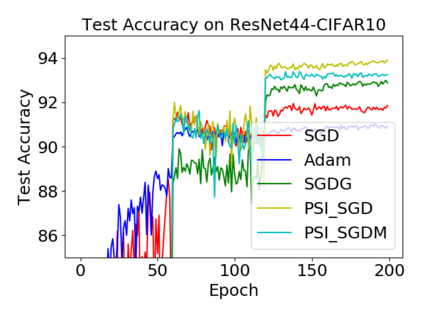

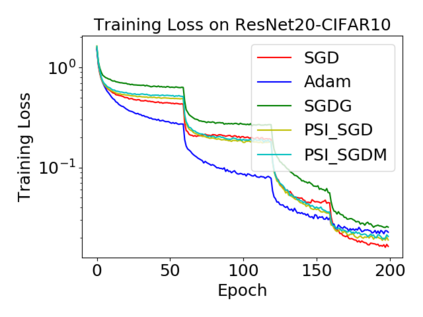

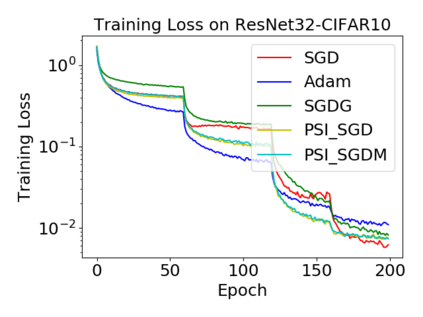

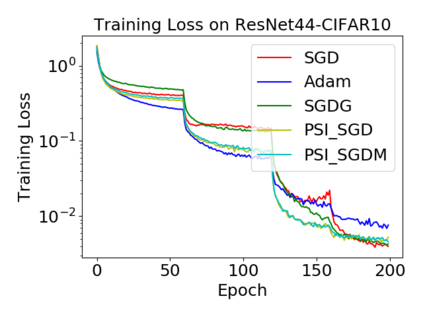

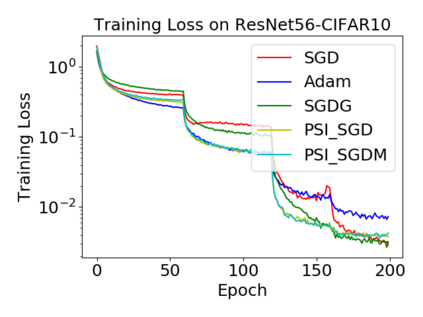

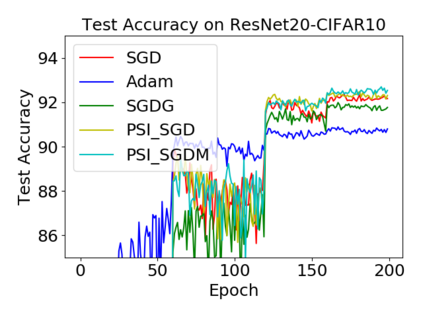

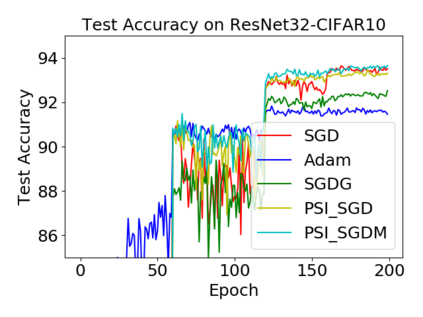

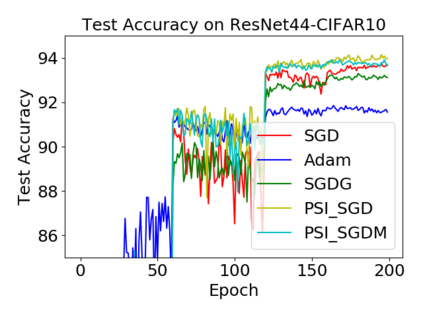

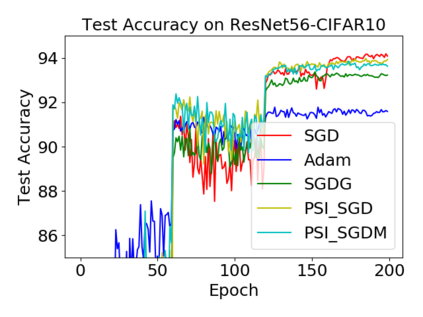

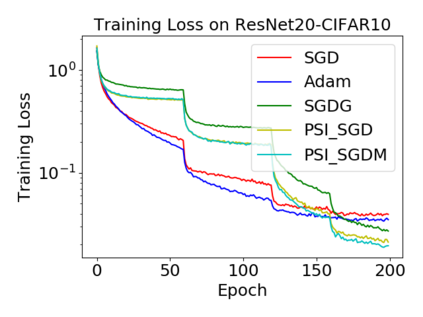

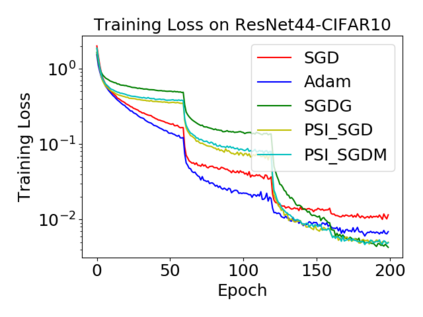

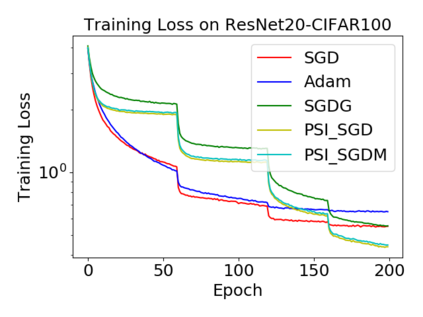

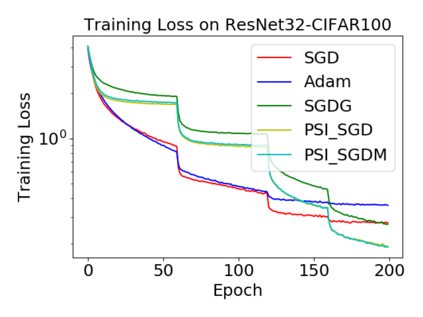

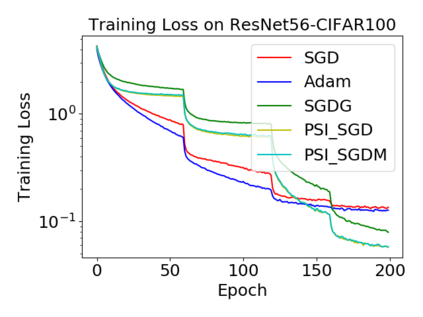

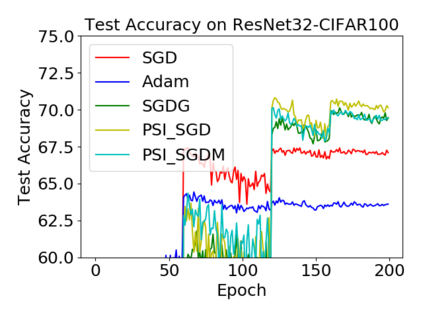

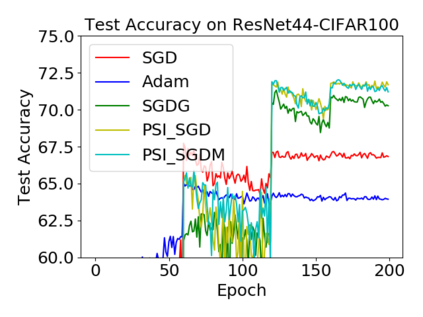

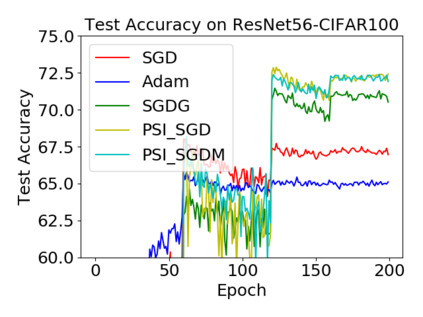

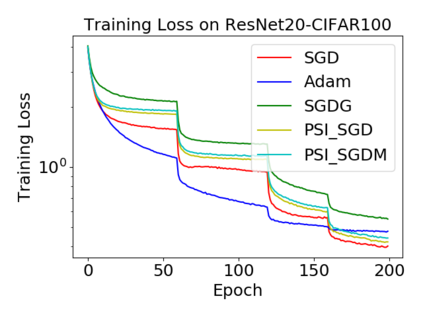

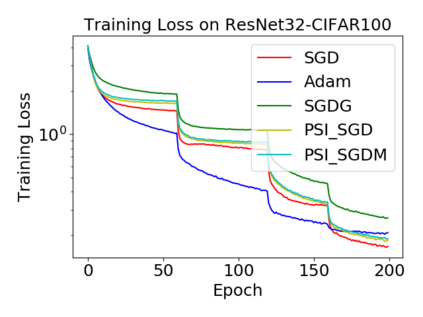

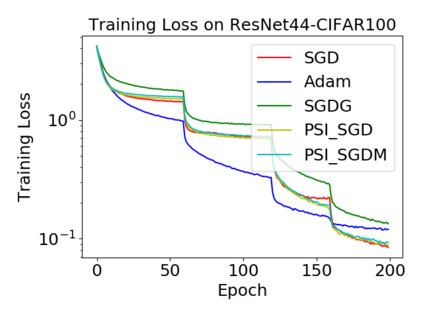

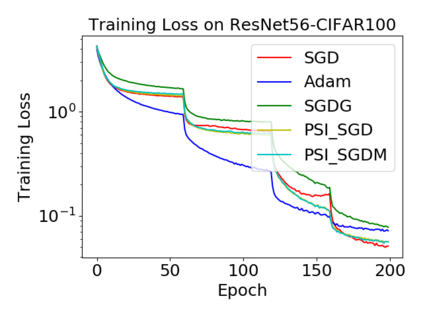

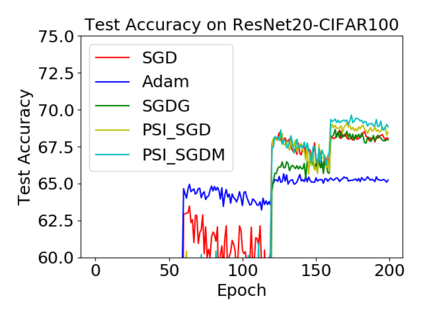

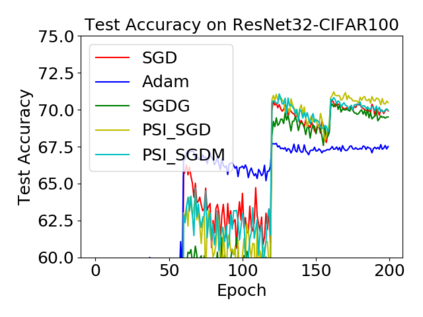

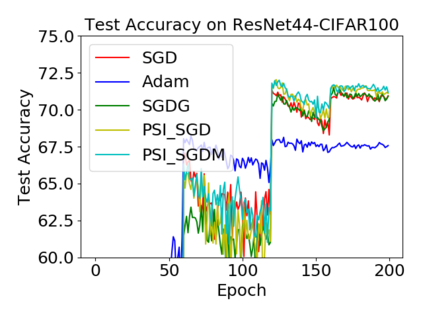

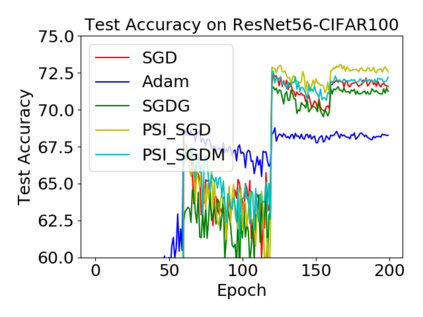

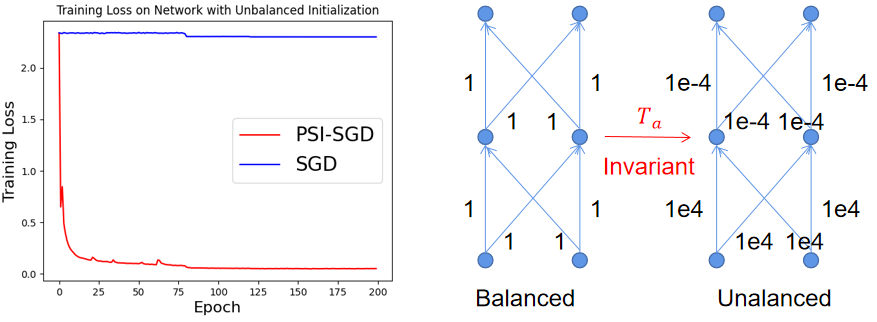

Batch normalization (BN) has become a critical component across diverse deep neural networks. The network with BN is invariant to positively linear re-scale transformation, which makes there exist infinite functionally equivalent networks with different scales of weights. However, optimizing these equivalent networks with the first-order method such as stochastic gradient descent will obtain a series of iterates converging to different local optima owing to their different gradients across training. To obviate this, we propose a quotient manifold \emph{PSI manifold}, in which all the equivalent weights of the network with BN are regarded as the same element. Next, we construct gradient descent and stochastic gradient descent on the proposed PSI manifold to train the network with BN. The two algorithms guarantee that every group of equivalent weights (caused by positively re-scaling) converge to the equivalent optima. Besides that, we give convergence rates of the proposed algorithms on the PSI manifold. The results show that our methods accelerate training compared with the algorithms on the Euclidean weight space. Finally, empirical results verify that our algorithms consistently improve the existing methods in both convergence rate and generalization ability under various experimental settings.

翻译:批量正常化(BN) 已经成为不同深度神经网络的一个关键组成部分。 BN 网络对于正线线性重新规模转换是一成不变的,使得存在无限功能等效网络,其重量尺度不同。 然而,优化这些等效网络,采用第一阶方法,例如随机梯度梯度下降法,将获得一系列的迭代相相趋同于不同的本地optima, 因为他们在训练过程中的梯度不同。 为了避免这种情况, 我们建议使用一个数位数的多重方位 emph{PSI 公式}, 将 BN 网络的所有等量视为相同的元素。 下一步, 我们在拟议的 PSI 中建立梯度下位和随机梯度梯度梯度梯度梯度梯度梯度梯度梯度梯度梯度梯度梯度下降, 以培训 BN 。 两种算法保证每组等量的重量( 由正面再缩放导致) 将聚集到相同的选择度。 此外, 我们给出了 PSI 数中的拟议算法的趋同率率。 。 结果显示, 我们的方法与 Euclideidean 重量空间的算算法都加快了相同的训练。 最后, 实验性能力在一般情况下不断改进现有方法的趋同率 。