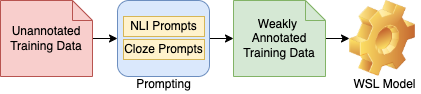

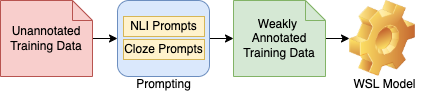

In Weak Supervised Learning (WSL), a model is trained over noisy labels obtained from semantic rules and task-specific pre-trained models. Rules offer limited generalization over tasks and require significant manual efforts while pre-trained models are available only for limited tasks. In this work, we propose to utilize prompt-based methods as weak sources to obtain the noisy labels on unannotated data. We show that task-agnostic prompts are generalizable and can be used to obtain noisy labels for different Spoken Language Understanding (SLU) tasks such as sentiment classification, disfluency detection and emotion classification. These prompts could additionally be updated to add task-specific contexts, thus providing flexibility to design task-specific prompts. We demonstrate that prompt-based methods generate reliable labels for the above SLU tasks and thus can be used as a universal weak source to train a weak-supervised model (WSM) in absence of labeled data. Our proposed WSL pipeline trained over prompt-based weak source outperforms other competitive low-resource benchmarks on zero and few-shot learning by more than 4% on Macro-F1 on all of the three benchmark SLU datasets. The proposed method also outperforms a conventional rule based WSL pipeline by more than 5% on Macro-F1.

翻译:在微弱监督学习(WSL)中,一个模型是针对从语义规则和特定任务事先培训的模式中获得的噪音标签进行训练的,该模型是针对从语义规则和特定任务事先培训的模式中获得的噪音标签的。规则对任务作了有限的概括,需要大量的人工努力,而经过预先培训的模型只可用于有限的任务。在这项工作中,我们提议利用基于迅速的方法作为薄弱的来源,在无附加说明的数据上获得噪音标签。我们表明,任务保密的提示是普遍适用的,可用于为不同的波斯语理解(SLU)任务获得噪音标签,如情绪分类、消散检测和情绪分类。这些提示可以补充来增加具体任务的背景,从而提供设计具体任务具体任务时所需的灵活性。我们表明,基于迅速的方法为上述SLU任务创造可靠的标签,因此,可以作为一个普遍的薄弱来源,在没有标签数据的情况下,训练一个薄弱、超强的模型。我们提议的WSLL输油管线优于其他有竞争力的低资源基准,在零度和微分分的环境下,以4%以上的人学习了基于常规规则的SMA-F格式的SLSL1系统,而以4%以上的SLSLSLSLS-Risma-F格式取代了常规方法。