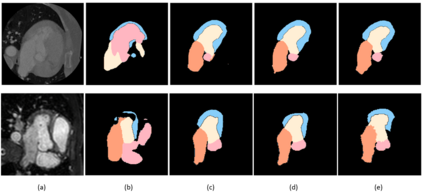

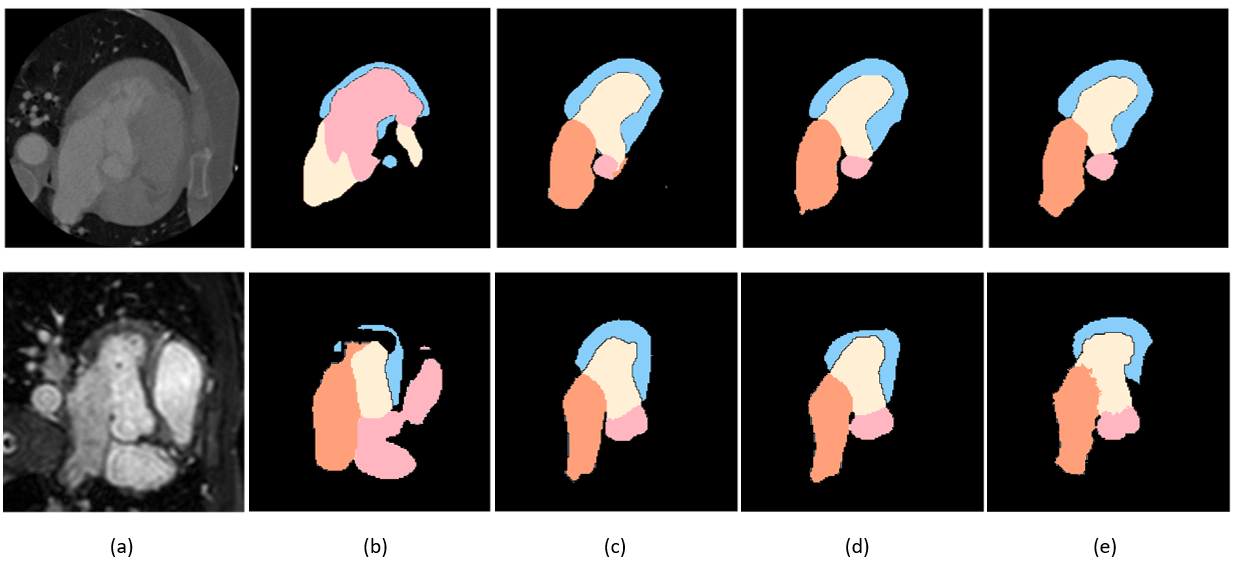

Image translation across domains for unpaired datasets has gained interest and great improvement lately. In medical imaging, there are multiple imaging modalities, with very different characteristics. Our goal is to use cross-modality adaptation between CT and MRI whole cardiac scans for semantic segmentation. We present a segmentation network using synthesised cardiac volumes for extremely limited datasets. Our solution is based on a 3D cross-modality generative adversarial network to share information between modalities and generate synthesized data using unpaired datasets. Our network utilizes semantic segmentation to improve generator shape consistency, thus creating more realistic synthesised volumes to be used when re-training the segmentation network. We show that improved segmentation can be achieved on small datasets when using spatial augmentations to improve a generative adversarial network. These augmentations improve the generator capabilities, thus enhancing the performance of the Segmentor. Using only 16 CT and 16 MRI cardiovascular volumes, improved results are shown over other segmentation methods while using the suggested architecture.

翻译:用于未受损害数据集的跨域图像翻译最近引起了人们的兴趣并取得了很大的改进。 在医学成像中,有多种成像模式,具有非常不同的特性。 我们的目标是使用CT和MRI整个心脏扫描的跨模式适应来进行语义分化。 我们展示了使用合成心积分解网络的分化网络,用于极有限的数据集。 我们的解决方案基于3D跨模式对立网络,以共享模式间的信息并利用未受损害的数据集生成合成数据。 我们的网络使用语义分解法来改进发电机形状的一致性,从而创造出更现实的合成体积,用于再培训分解网络。 我们显示,在使用空间扩增来改进基因对抗网络时,可以在小数据集上实现更好的分化。 这些增强作用提高了生成器的能力,从而提高了分辨器的性能。 我们仅使用16个CT和16个 MRI 心血管量,在使用建议的结构使用其他分解法时,就展示了更好的结果。