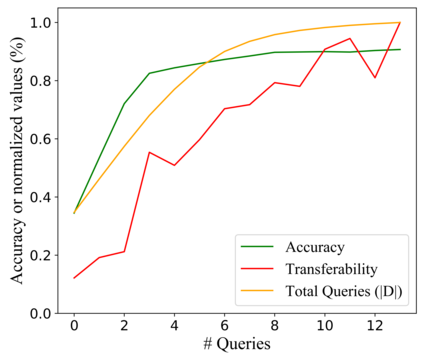

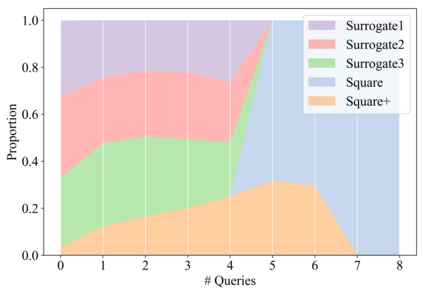

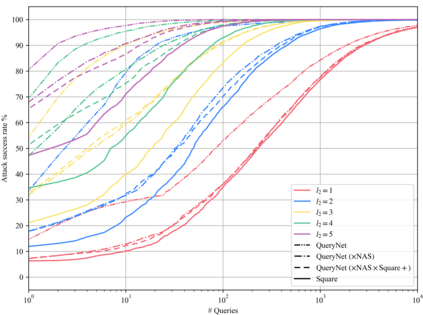

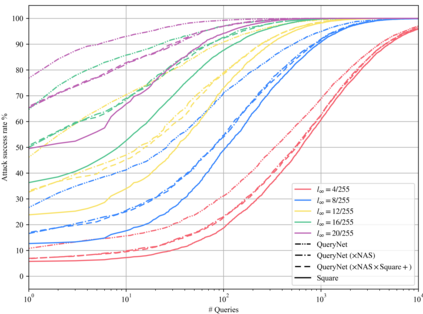

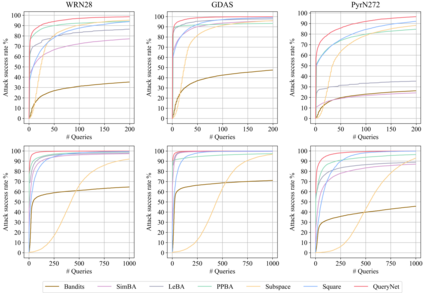

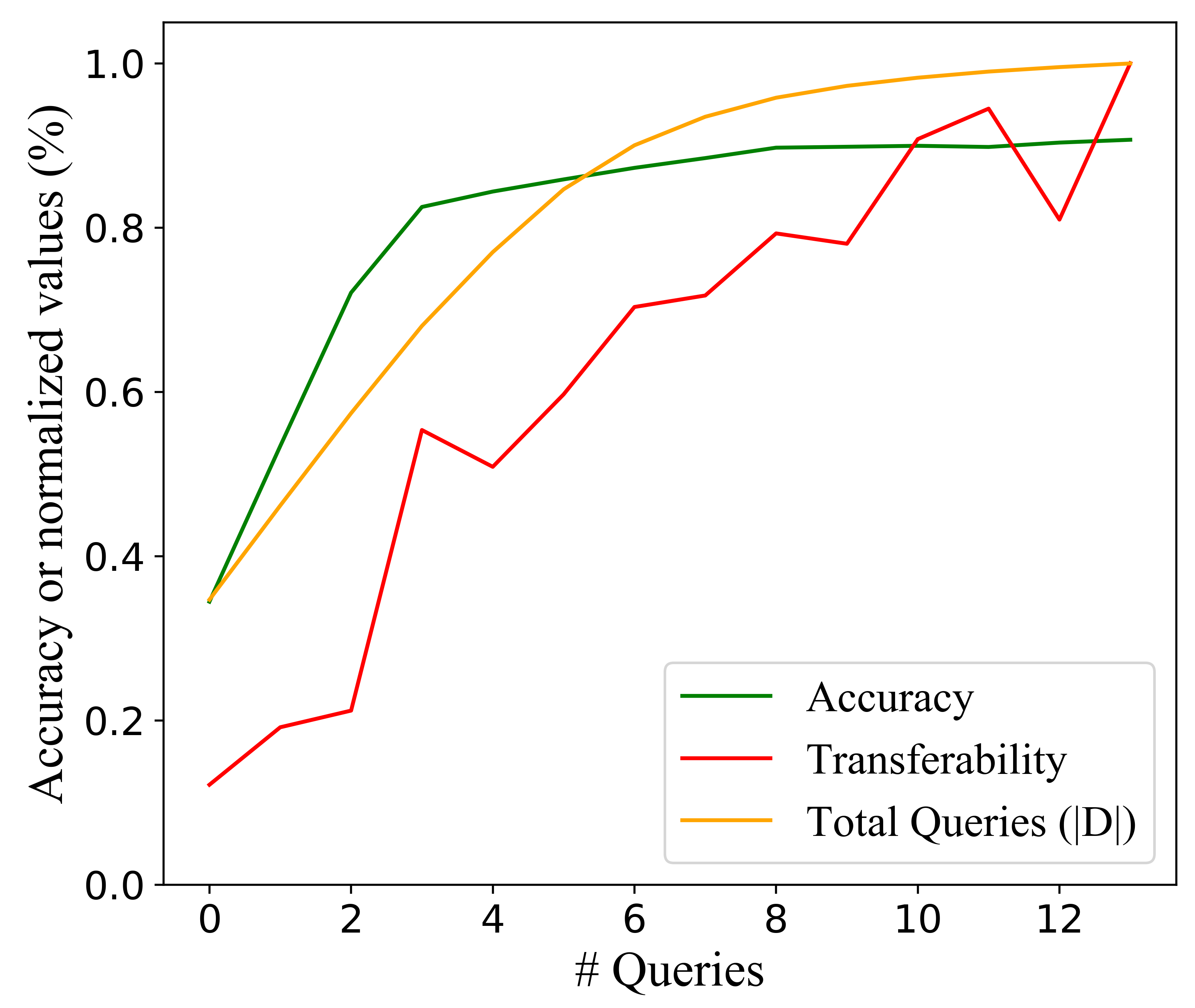

Deep Neural Networks (DNNs) are acknowledged as vulnerable to adversarial attacks, while the existing black-box attacks require extensive queries on the victim DNN to achieve high success rates. For query-efficiency, surrogate models of the victim are adopted as transferable attackers in consideration of their Gradient Similarity (GS), i.e., surrogates' attack gradients are similar to the victim's ones to some extent. However, it is generally neglected to exploit their similarity on outputs, namely the Prediction Similarity (PS), to filter out inefficient queries. To jointly utilize and also optimize surrogates' GS and PS, we develop QueryNet, an efficient attack network that can significantly reduce queries. QueryNet crafts several transferable Adversarial Examples (AEs) by surrogates, and then decides also by surrogates on the most promising AE, which is then sent to query the victim. That is to say, in QueryNet, surrogates are not only exploited as transferable attackers, but also as transferability evaluators for AEs. The AEs are generated using surrogates' GS and evaluated based on their FS, and therefore, the query results could be back-propagated to optimize surrogates' parameters and also their architectures, enhancing both the GS and the FS. QueryNet has significant query-efficiency, i.e., reduces queries by averagely about an order of magnitude compared to recent SOTA methods according to our comprehensive and real-world experiments: 11 victims (including 2 commercial models) on MNIST/CIFAR10/ImageNet, allowing only 8-bit image queries, and no access to the victim's training data.

翻译:深神经网络(DNN)被公认为易受对抗性攻击,而现有的黑箱攻击要求广泛询问受害者DNN,以达到高成功率。关于查询效率,受害者代用模型被采纳为可转移攻击者,以考虑其渐变相似性(GS),即代用机器人的攻击梯度在某种程度上与受害者最有希望的AE相似。然而,通常忽视了利用产出的相似性,即预测相似性(PS)过滤无效的查询。为了共同利用和优化代理国的GS和PS,我们开发了QueryNet,这是一个高效的攻击网络,可以大大减少查询。QueryNet通过套用套装来制造若干可转移的Aversarial示例(AEs),然后又通过在最有希望的AE(A)上进行猜测,然后发送给受害者。也就是说,在QueryNet(P)中,srogates不仅被利用作为可转移攻击者,而且作为AE的可转移性评估者,而且还作为AE的可转移性评估者。AENet(Calalgeralal der) 和SFSergate(Qalate) 数据也被复制到最近的快速数据。A-ratealates)。