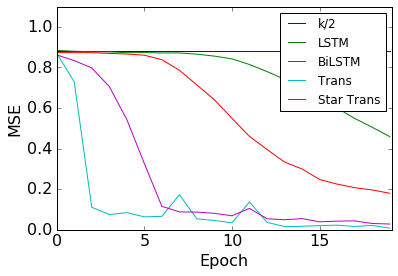

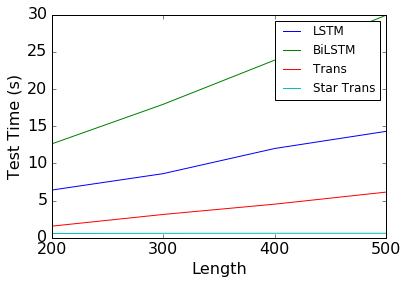

Although Transformer has achieved great successes on many NLP tasks, its heavy structure with fully-connected attention connections leads to dependencies on large training data. In this paper, we present Star-Transformer, a lightweight alternative by careful sparsification. To reduce model complexity, we replace the fully-connected structure with a star-shaped topology, in which every two non-adjacent nodes are connected through a shared relay node. Thus, complexity is reduced from quadratic to linear, while preserving capacity to capture both local composition and long-range dependency. The experiments on four tasks (22 datasets) show that Star-Transformer achieved significant improvements against the standard Transformer for the modestly sized datasets.

翻译:虽然变异器在许多NLP任务上取得了巨大成功,但其具有完全连接关注连接的重体结构导致依赖大型培训数据。 在本文中,我们介绍星-变异器,这是一个由谨慎的超度组成的轻量替代品。为了降低模型复杂性,我们用一个恒星形状的地形学来取代完全连通的结构,在这个结构中,每两个非相邻的节点通过共享的中继节点连接。因此,复杂性从四面形到线形,同时保留捕捉本地构成和远程依赖的能力。对四个任务(22个数据集)的实验表明,星-变异器相对于规模不大的数据集的标准变异器,取得了显著的改进。