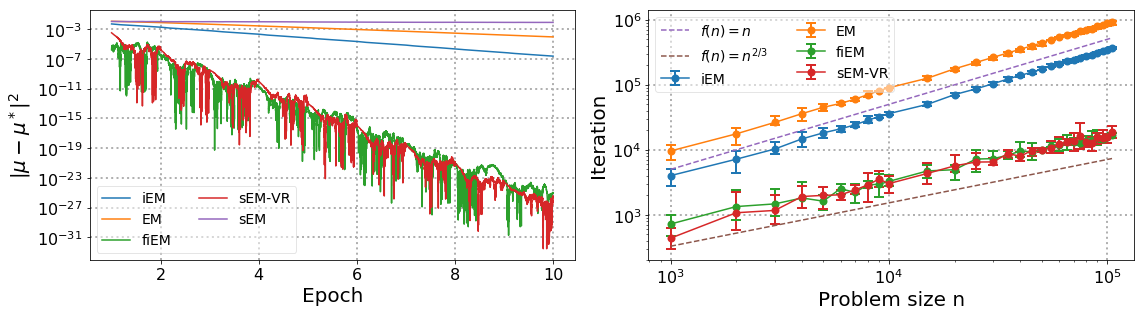

The EM algorithm is one of the most popular algorithm for inference in latent data models. The original formulation of the EM algorithm does not scale to large data set, because the whole data set is required at each iteration of the algorithm. To alleviate this problem, Neal and Hinton have proposed an incremental version of the EM (iEM) in which at each iteration the conditional expectation of the latent data (E-step) is updated only for a mini-batch of observations. Another approach has been proposed by Capp\'e and Moulines in which the E-step is replaced by a stochastic approximation step, closely related to stochastic gradient. In this paper, we analyze incremental and stochastic version of the EM algorithm as well as the variance reduced-version of Chen et. al. in a common unifying framework. We also introduce a new version incremental version, inspired by the SAGA algorithm by Defazio et. al. We establish non-asymptotic convergence bounds for global convergence. Numerical applications are presented in this article to illustrate our findings.

翻译:EM 算法是潜伏数据模型中最受欢迎的推算算法之一。 EM 算法的最初配方不是大数据集, 因为算法的每次迭代都需要整个数据集。 为了缓解这一问题, Neal 和 Hinton 提出了一个EM (iEM) 递增版本, 在每个迭代中, 对潜伏数据( E-step) 的有条件期望只为小型批量的观测更新。 Capp\'e 和 Molines 提出了另一种方法, 即E 步骤由与随机梯度密切相关的随机近似步骤取代。 在本文中, 我们分析了电离子算法的递增和随机版本, 以及在共同的统一框架中陈等人的变换变。 我们还引入了一个新版本的递增版本, 受Defazio 等人的SAGA 算法的启发。 我们为全球趋同建立了非抽调的趋同线。 本文章中介绍了数字应用来说明我们的调查结果。