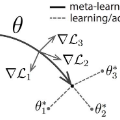

The aim of Few-Shot learning methods is to train models which can easily adapt to previously unseen tasks, based on small amounts of data. One of the most popular and elegant Few-Shot learning approaches is Model-Agnostic Meta-Learning (MAML). The main idea behind this method is to learn the general weights of the meta-model, which are further adapted to specific problems in a small number of gradient steps. However, the model's main limitation lies in the fact that the update procedure is realized by gradient-based optimisation. In consequence, MAML cannot always modify weights to the essential level in one or even a few gradient iterations. On the other hand, using many gradient steps results in a complex and time-consuming optimization procedure, which is hard to train in practice, and may lead to overfitting. In this paper, we propose HyperMAML, a novel generalization of MAML, where the training of the update procedure is also part of the model. Namely, in HyperMAML, instead of updating the weights with gradient descent, we use for this purpose a trainable Hypernetwork. Consequently, in this framework, the model can generate significant updates whose range is not limited to a fixed number of gradient steps. Experiments show that HyperMAML consistently outperforms MAML and performs comparably to other state-of-the-art techniques in a number of standard Few-Shot learning benchmarks.

翻译:少微的学习方法的目的是根据少量数据,训练能够很容易地适应先前的不为人知的任务的模型。最受欢迎和优雅的少微少微的学习方法之一是模型 -- -- 高级元学习(MAML),这一方法的主要理念是学习元模型的一般重量,这种模型在少量梯度步骤中进一步适应具体问题。然而,该模型的主要局限性在于更新程序是通过基于梯度的优化实现的。因此,MAML不能总是将重量改变到一个或甚至几个梯度迭代中的基本水平。另一方面,在复杂和耗时的优化程序中,使用许多梯度步骤的结果很难在实践中进行训练,并可能导致过度适应。在本文中,我们提出HyMAMLML的一个新概论,即对更新程序的培训也是模型的一部分。在HyMMLML中,我们为此使用一个可训练的高级网络。因此,在SilverML的模型中,一个固定的升级到MAML的模型范围,这个固定的模型将产生一个不固定的模型。