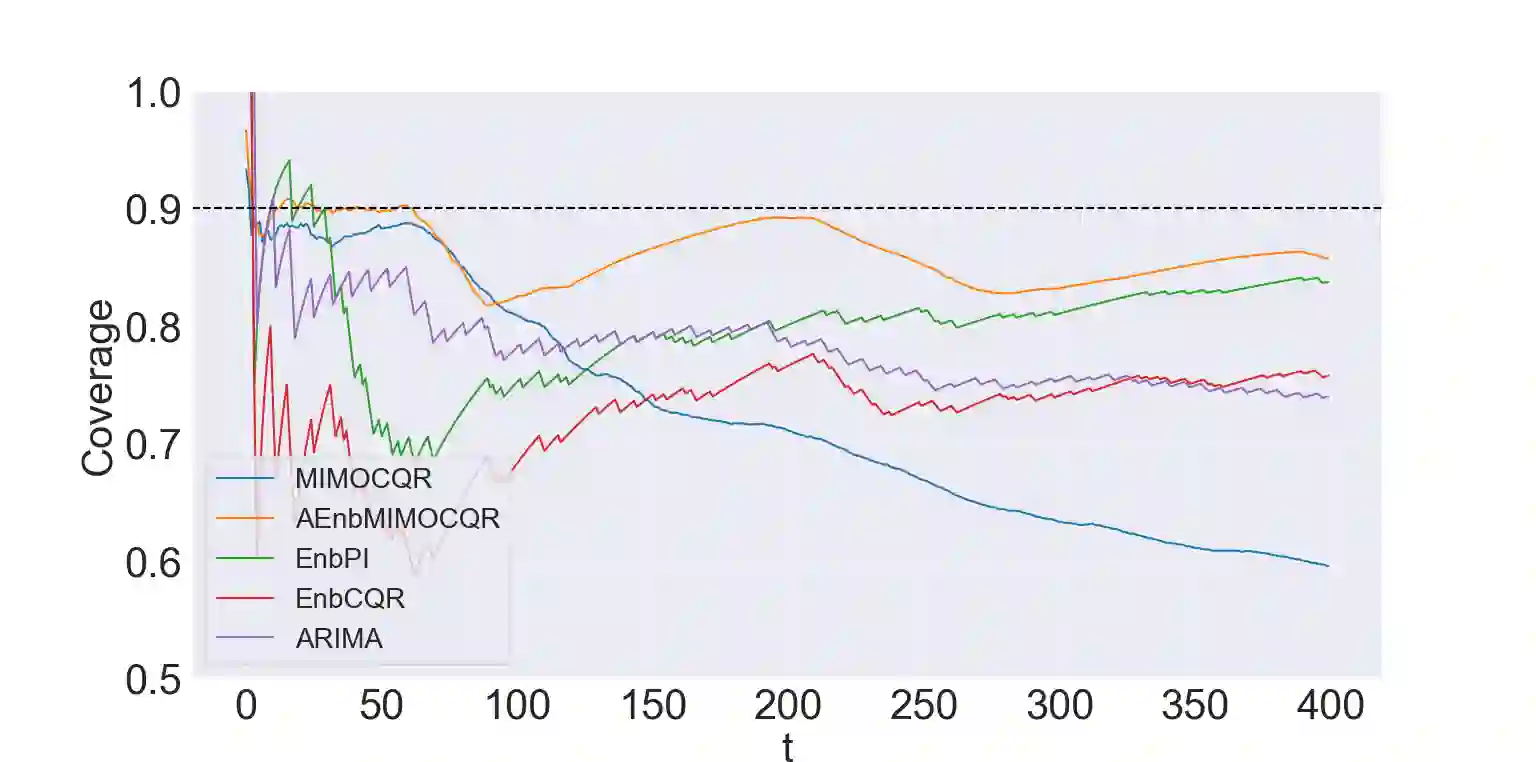

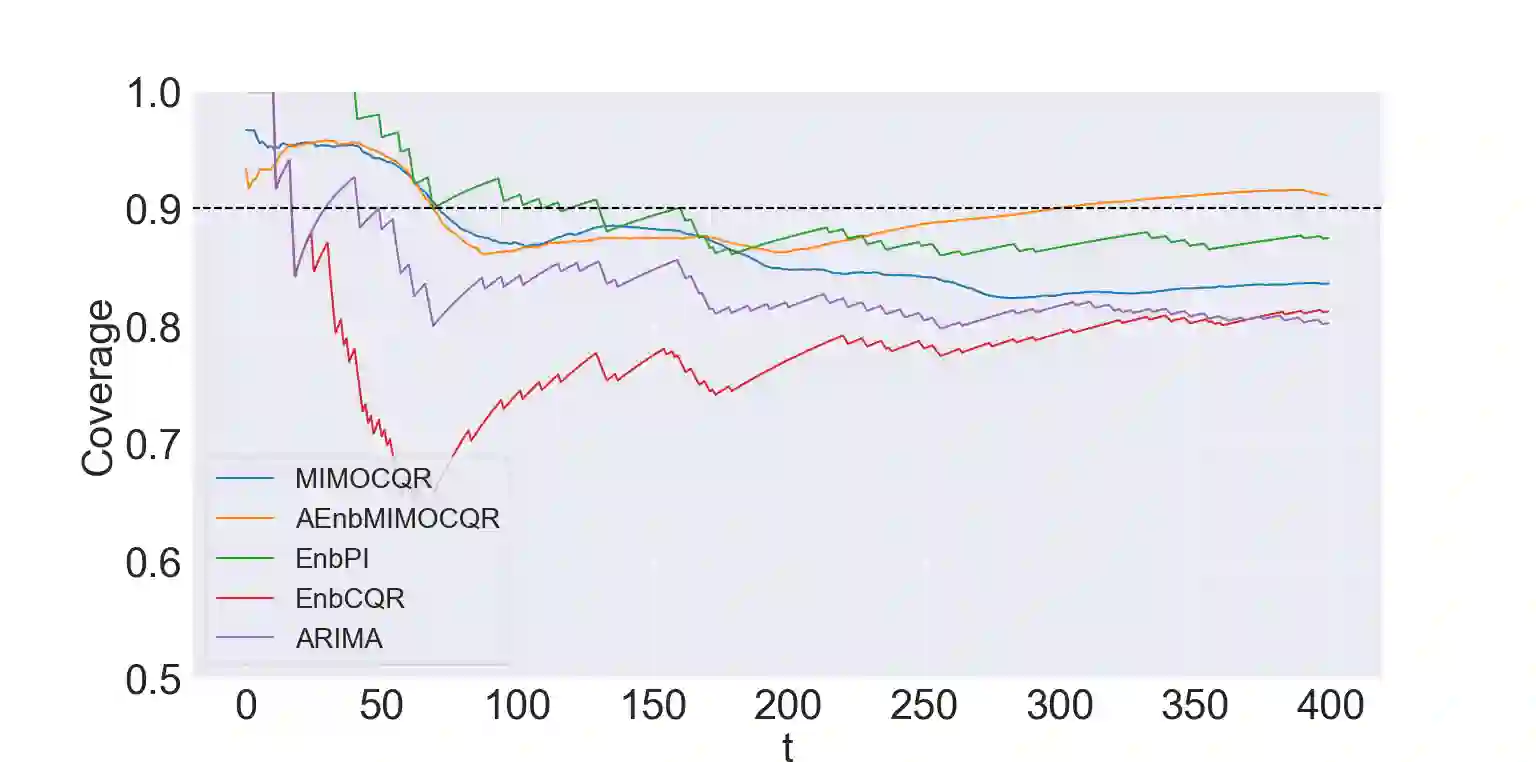

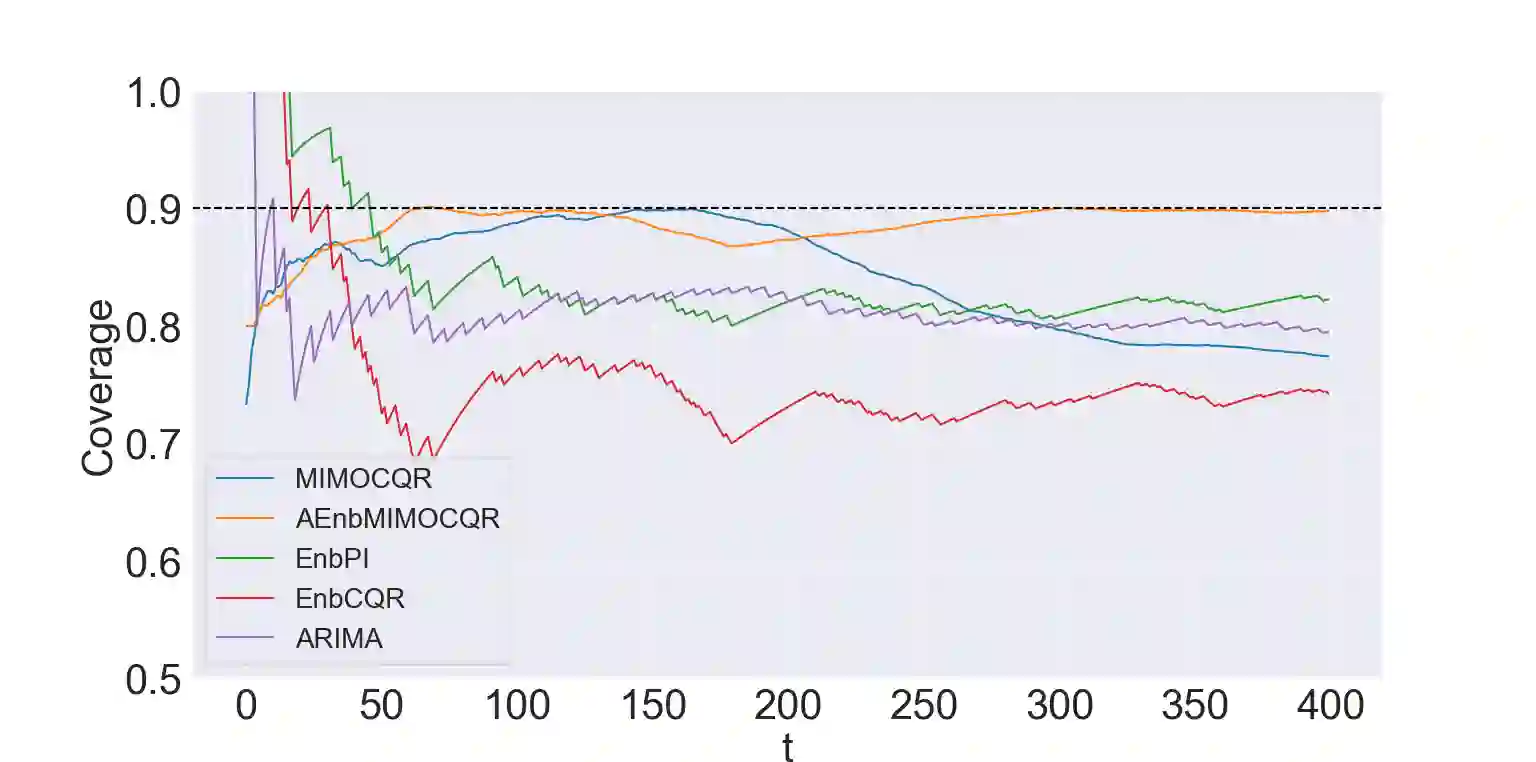

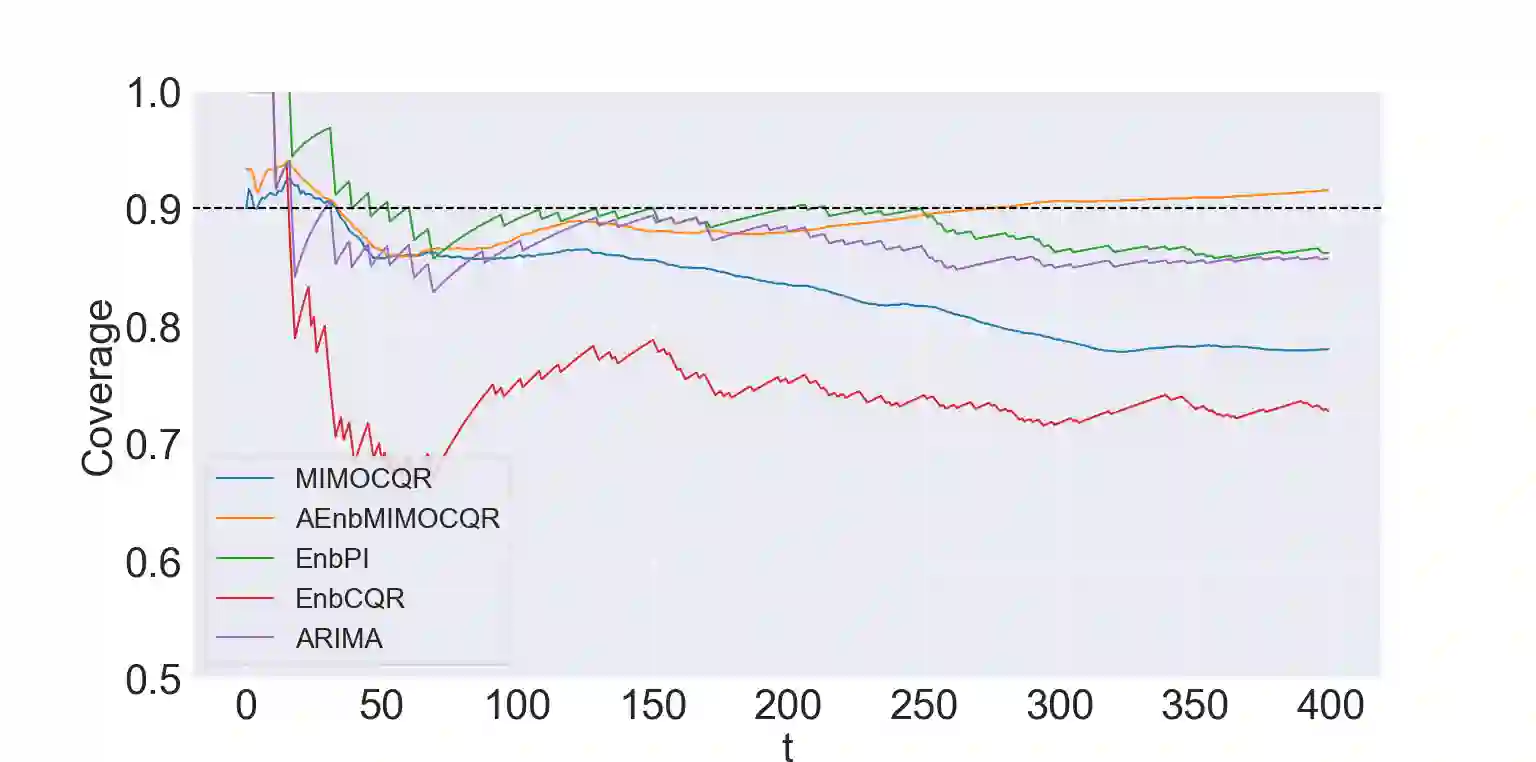

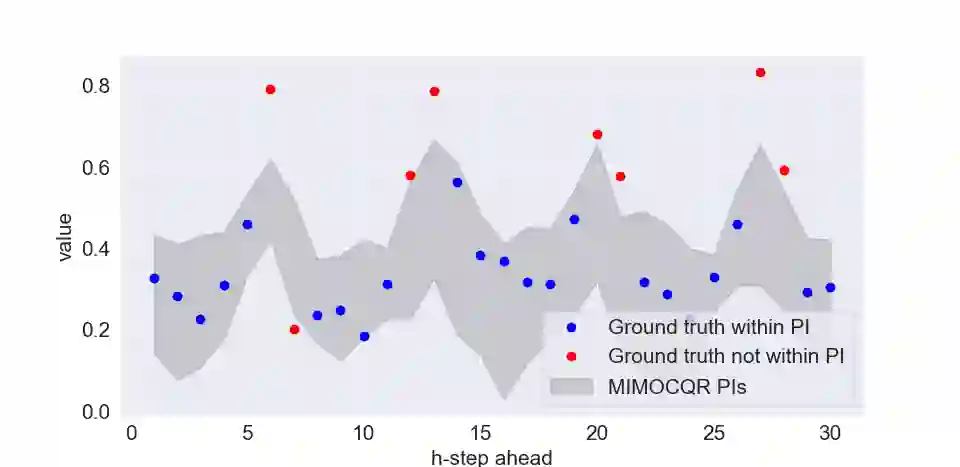

The exponential growth of machine learning (ML) has prompted a great deal of interest in quantifying the uncertainty of each prediction for a user-defined level of confidence since nowadays ML is increasingly being used in high-stakes settings. Reliable ML via prediction intervals (PIs) that take into account jointly the epistemic and aleatory uncertainty is therefore imperative and is a step towards increased trust in model forecasts. Conformal prediction (CP) is a lightweight distribution-free uncertainty quantification framework that works for any black-box model, yielding PIs that are valid under the mild assumption of exchangeability. CP-type methods are gaining popularity due to being easy to implement and computationally cheap; however, the exchangeability assumption immediately excludes time series forecasting from the stage. Although recent papers tackle distribution shift and asymptotic versions of CP, this is not enough for the general time series forecasting problem of producing H-step ahead valid PIs. To attain such a goal, we propose a new method called AEnbMIMOCQR (Adaptive ensemble batch multi-input multi-output conformalized quantile regression), which produces valid PIs asymptotically and is appropriate for heteroscedastic time series. We compare the proposed method against state-of-the-art competitive methods in the NN5 forecasting competition dataset. All the code and data to reproduce the experiments are made available.

翻译:机器学习(ML)的指数增长激发了人们对量化每种预测的不确定性的极大兴趣,因为现在ML越来越多地用于高摄入量的环境下。因此,通过预测间隔(PI)的可靠 ML势必要,这种预测间隔同时考虑到认知性和感知性不确定性,是提高模型预测信任度的一个步骤。非正式预测(CP)是一个轻量的无分配性不确定性量化框架,对任何黑盒模型都有效,产生在温和的互换性假设下有效的PIS。由于很容易执行和计算成本低廉,CP型方法越来越受欢迎;然而,互换性假设立即将时间序列预测从阶段排除在外。尽管最近的文件处理的是发行变化和CP的微量性版本,但这还不足以解决总的时间序列预测问题,即生产出有效的PIS。为了达到这一目标,我们提议了一种名为AENBMIMOCQR的新方法,即分批的多投入多用途多用途多用途数据,因为易于执行和计算;然而,互换性假设的假设立即排除了阶段预测。虽然最近的文件处理发行的分布式和微量性版本,但对于总的时间序列数据是有效的,我们提出的Sental-neval-deal-de-deal-deal-dal-dal-dal-dal-dal-daldal-dal-daldal-dalvicalvical-dal-dalviewdalgaldaldalddaldaldalgalgalgalgaldaldaldalgalgalgalgationdalgalgaldalddddddaldaldaldalddddddddddaldalddalddddddald) 。