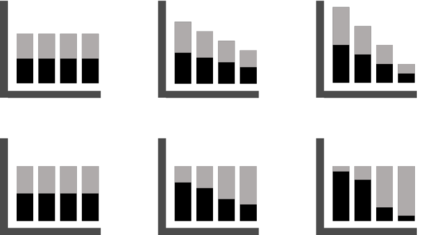

Deep network models perform excellently on In-Distribution (ID) data, but can significantly fail on Out-Of-Distribution (OOD) data. While developing methods focus on improving OOD generalization, few attention has been paid to evaluating the capability of models to handle OOD data. This study is devoted to analyzing the problem of experimental ID test and designing OOD test paradigm to accurately evaluate the practical performance. Our analysis is based on an introduced categorization of three types of distribution shifts to generate OOD data. Main observations include: (1) ID test fails in neither reflecting the actual performance of a single model nor comparing between different models under OOD data. (2) The ID test failure can be ascribed to the learned marginal and conditional spurious correlations resulted from the corresponding distribution shifts. Based on this, we propose novel OOD test paradigms to evaluate the generalization capacity of models to unseen data, and discuss how to use OOD test results to find bugs of models to guide model debugging.

翻译:深度网络模型在分布(ID)数据方面表现极好,但在分布(OOD)数据方面可能严重失灵。在开发方法侧重于改进OOOD一般化的同时,很少注意评价处理OOD数据模型的能力。这项研究专门分析实验ID测试问题和设计OOOD测试模式以准确评价实际性能。我们的分析基于对三种分布转移的引进分类,以生成OOD数据。主要意见包括:(1) ID测试既不能反映单一模型的实际性能,也不能反映OOOD数据下不同模型之间的比较。(2) ID测试失败可归因于相应的分布变化所产生的边际和有条件的虚假关联。在此基础上,我们提出新的OOOD测试模式,以评价模型对看不见数据的一般性能力,并讨论如何使用OOD测试结果寻找模型的错误来指导模型调试。