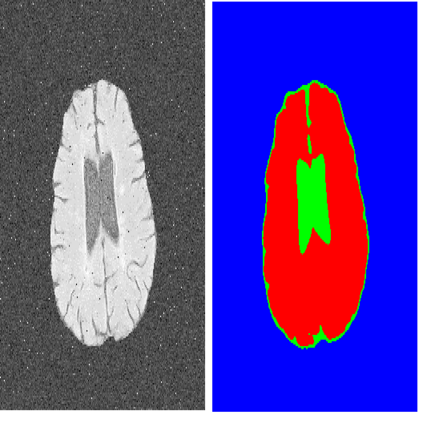

Fully Convolutional Neural Networks (FCNNs) with contracting and expansive paths (e.g. encoder and decoder) have shown prominence in various medical image segmentation applications during the recent years. In these architectures, the encoder plays an integral role by learning global contextual representations which will be further utilized for semantic output prediction by the decoder. Despite their success, the locality of convolutional layers , as the main building block of FCNNs limits the capability of learning long-range spatial dependencies in such networks. Inspired by the recent success of transformers in Natural Language Processing (NLP) in long-range sequence learning, we reformulate the task of volumetric (3D) medical image segmentation as a sequence-to-sequence prediction problem. In particular, we introduce a novel architecture, dubbed as UNEt TRansformers (UNETR), that utilizes a pure transformer as the encoder to learn sequence representations of the input volume and effectively capture the global multi-scale information. The transformer encoder is directly connected to a decoder via skip connections at different resolutions to compute the final semantic segmentation output. We have extensively validated the performance of our proposed model across different imaging modalities(i.e. MR and CT) on volumetric brain tumour and spleen segmentation tasks using the Medical Segmentation Decathlon (MSD) dataset, and our results consistently demonstrate favorable benchmarks.

翻译:完全革命神经网络(FCNN)与承包和扩展路径(例如编码器和解码器)在最近几年里在各种医学图像分割应用中表现出了显著的优势。在这些结构中,编码器通过学习全球背景表达方式发挥着不可或缺的作用,这些表达方式将进一步用于解码器的语义输出预测。尽管它们取得了成功,但作为FCNN的主要构件之一的共进层地点限制了学习这些网络远程空间依赖的能力。在自然语言处理(NLP)变异器在远程序列学习中最近的成功激励下,我们重新配置了体积(3D)医学图像分割任务,将其作为一个序列到序列的预测问题。特别是,我们引入了一个新的结构,以UNEt TRanters(UNETR)为标志,利用纯变异变器学习输入卷的序列表达方式和有效捕捉全球多尺度信息。在长序列处理(NLP)变异器变异器的变异器在远程序列处理中成功学习了变异器(NLP)在远程序列学习过程中的成功,我们将医学图像分割任务重新定位(DDD) 重新配置任务重新配置任务作为从不同的SDMDMSDMSDD 的运行中直接连接连接到不同的运行。在不同的计算中,我们的拟议变动的变动器和变动图图图中,在不同的SDLDDDDDDDDDDDD 上,通过连接了不同的运行中,在不同的计算中,在不同的计算中,在不同的解了我们。