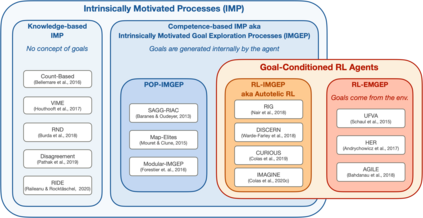

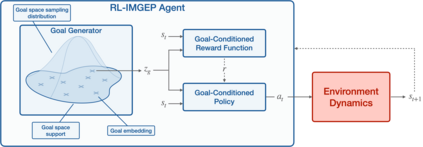

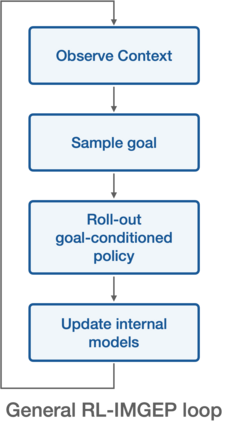

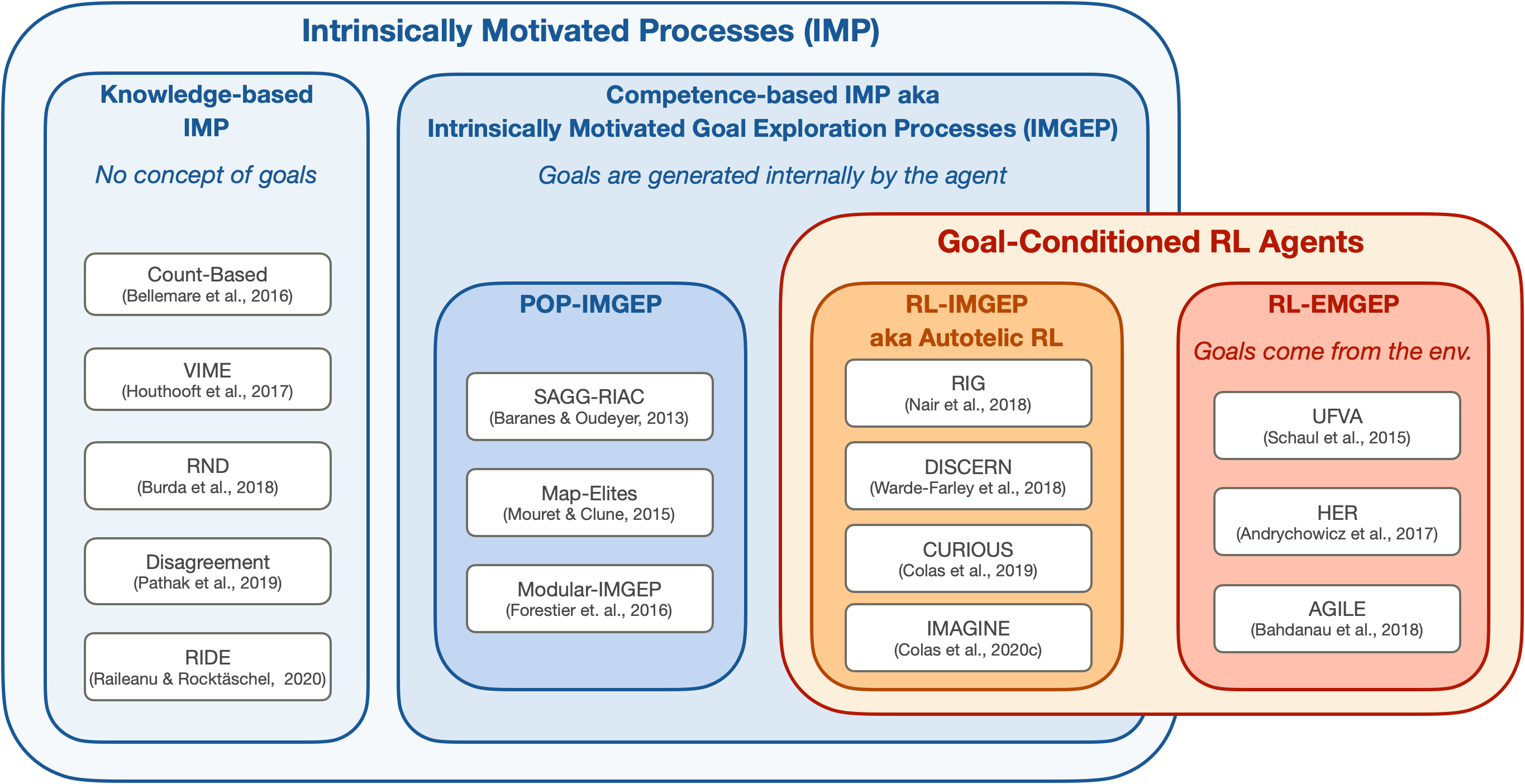

Building autonomous machines that can explore open-ended environments, discover possible interactions and autonomously build repertoires of skills is a general objective of artificial intelligence. Developmental approaches argue that this can only be achieved by autonomous and intrinsically motivated learning agents that can generate, select and learn to solve their own problems. In recent years, we have seen a convergence of developmental approaches, and developmental robotics in particular, with deep reinforcement learning (RL) methods, forming the new domain of developmental machine learning. Within this new domain, we review here a set of methods where deep RL algorithms are trained to tackle the developmental robotics problem of the autonomous acquisition of open-ended repertoires of skills. Intrinsically motivated goal-conditioned RL algorithms train agents to learn to represent, generate and pursue their own goals. The self-generation of goals requires the learning of compact goal encodings as well as their associated goal-achievement functions, which results in new challenges compared to traditional RL algorithms designed to tackle pre-defined sets of goals using external reward signals. This paper proposes a typology of these methods at the intersection of deep RL and developmental approaches, surveys recent approaches and discusses future avenues.

翻译:建立自主的机器,探索开放的环境,发现可能的相互作用,自主地建立技能库,这是人工智能的总目标。 发展方法认为,这只能通过自主的、具有内在动机的学习机构才能实现,这些机构能够产生、选择和学习解决自己的问题。 近年来,我们看到了发展方法,特别是发展机器人方法的趋同,特别是发展型机器人方法,有了深度强化学习(RL)方法,形成了开发型机器学习的新领域。在这个新领域,我们审查了一系列方法,其中深层RL算法经过培训,以解决自主获取开放技能库的发展型机器人问题。具有内在动机的、有目标的RL算法培训这些代理机构学习代表、产生和追求自己的目标。目标的自我生成需要学习紧凑的目标编码以及与之相关的目标实现功能,这与传统的RL算法相比产生了新的挑战,而传统的RL算法旨在利用外部奖励信号处理预先确定的一系列目标。本文建议将这些方法在深度RL和开发方法的交叉点上进行分类。