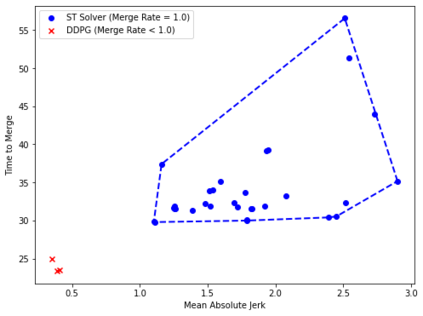

We consider the problem of designing an algorithm to allow a car to autonomously merge on to a highway from an on-ramp. Two broad classes of techniques have been proposed to solve motion planning problems in autonomous driving: Model Predictive Control (MPC) and Reinforcement Learning (RL). In this paper, we first establish the strengths and weaknesses of state-of-the-art MPC and RL-based techniques through simulations. We show that the performance of the RL agent is worse than that of the MPC solution from the perspective of safety and robustness to out-of-distribution traffic patterns, i.e., traffic patterns which were not seen by the RL agent during training. On the other hand, the performance of the RL agent is better than that of the MPC solution when it comes to efficiency and passenger comfort. We subsequently present an algorithm which blends the model-free RL agent with the MPC solution and show that it provides better trade-offs between all metrics -- passenger comfort, efficiency, crash rate and robustness.

翻译:我们考虑设计一种算法,使汽车能够自动地从车顶上合并到高速公路的问题。为了解决自动驾驶的机动规划问题,提出了两大类技术:模型预测控制(MPC)和强化学习(RL)。在本文中,我们首先通过模拟确定最先进的MPC和基于RL的先进技术的优缺点。我们从安全和稳健到超出分配的交通模式(即交通模式在培训期间没有被RL代理看到)的角度,表明RL代理的性能比MPC的高效和乘客舒适解决方案的优劣。我们随后提出一种算法,将无模型的RL代理与MPC的解决方案相结合,并表明它在所有标准 -- -- 乘客舒适、效率、崩溃率和稳健之间提供更好的权衡。