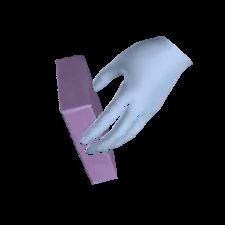

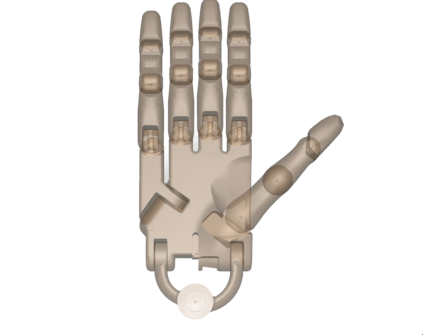

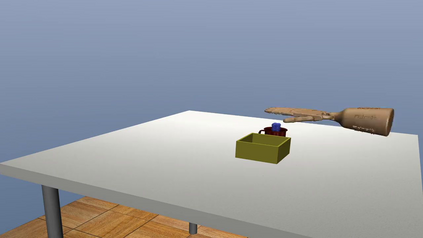

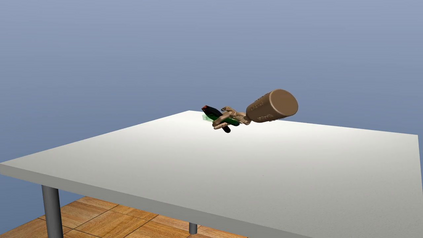

While we have made significant progress on understanding hand-object interactions in computer vision, it is still very challenging for robots to perform complex dexterous manipulation. In this paper, we propose a new platform and pipeline, DexMV (Dex Manipulation from Videos), for imitation learning to bridge the gap between computer vision and robot learning. We design a platform with: (i) a simulation system for complex dexterous manipulation tasks with a multi-finger robot hand and (ii) a computer vision system to record large-scale demonstrations of a human hand conducting the same tasks. In our new pipeline, we extract 3D hand and object poses from the videos, and convert them to robot demonstrations via motion retargeting. We then apply and compare multiple imitation learning algorithms with the demonstrations. We show that the demonstrations can indeed improve robot learning by a large margin and solve the complex tasks which reinforcement learning alone cannot solve. Project page with video: https://yzqin.github.io/dexmv/

翻译:虽然我们在理解计算机视觉中的人工物体相互作用方面取得了显著进展,但机器人进行复杂的超模操纵仍然非常困难。 在本文中,我们提议建立一个新的平台和管道DexMV(DexMV(来自视频的Dex Monition)),用于模仿学习,以弥合计算机视觉与机器人学习之间的差距。我们设计了一个平台,其内容包括:(一) 多指机器人手的复杂超模操作任务模拟系统,以及(二) 用于记录执行相同任务的大型人体手演示的计算机视觉系统。在我们的新管道中,我们从视频中提取3D手和物件,并通过运动再定向将其转换为机器人演示。我们随后应用并比较了多个模拟学习算法和演示法。我们证明演示确实可以改进大距离的机器人学习,并解决单手加强学习的复杂任务。项目网页有视频: https://yzqin.githuub.io/dexmv/。