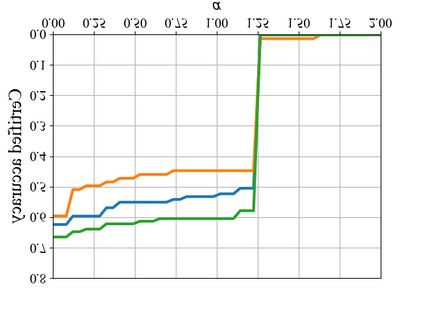

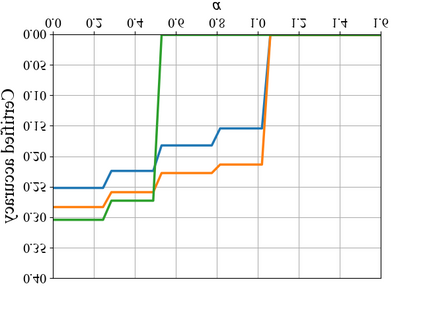

Certified defenses such as randomized smoothing have shown promise towards building reliable machine learning systems against $\ell_p$-norm bounded attacks. However, existing methods are insufficient or unable to provably defend against semantic transformations, especially those without closed-form expressions (such as defocus blur and pixelate), which are more common in practice and often unrestricted. To fill up this gap, we propose generalized randomized smoothing (GSmooth), a unified theoretical framework for certifying robustness against general semantic transformations via a novel dimension augmentation strategy. Under the GSmooth framework, we present a scalable algorithm that uses a surrogate image-to-image network to approximate the complex transformation. The surrogate model provides a powerful tool for studying the properties of semantic transformations and certifying robustness. Experimental results on several datasets demonstrate the effectiveness of our approach for robustness certification against multiple kinds of semantic transformations and corruptions, which is not achievable by the alternative baselines.

翻译:随机平滑等经认证的防御方法,如随机平滑等,表明有希望建立可靠的机器学习系统,对抗$\ ell_p$- norm 受约束的攻击。然而,现有方法不足以或无法以可变方式防御语义变换,特别是没有封闭式表达法(例如脱焦模糊和像素酸)的表达法,这些表达法在实践中比较常见,而且往往不受限制。为了填补这一空白,我们建议采用通用的随机平滑法(GSmooth),建立一个统一的理论框架,通过新颖的维度增强战略,证明对普通语义变换的稳健性。在GSmooth框架下,我们提出了一个可伸缩的算法,使用代用图像到图像网络来估计复杂的变形。代用模型为研究语义变形的特性和验证稳健性提供了有力的工具。几个数据集的实验结果表明我们针对多种语义变和腐败的稳性认证方法的有效性,而替代基线无法做到这一点。