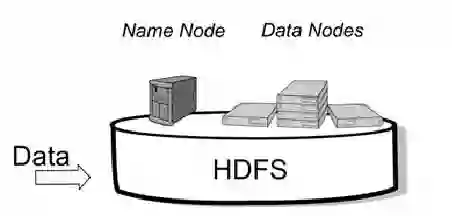

Distributed File Systems (DFS) are essential for managing vast datasets across multiple servers, offering benefits in scalability, fault tolerance, and data accessibility. This paper presents a comprehensive evaluation of three prominent DFSs - Google File System (GFS), Hadoop Distributed File System (HDFS), and MinIO - focusing on their fault tolerance mechanisms and scalability under varying data loads and client demands. Through detailed analysis, how these systems handle data redundancy, server failures, and client access protocols, ensuring reliability in dynamic, large-scale environments is assessed. In addition, the impact of system design on performance, particularly in distributed cloud and computing architectures is assessed. By comparing the strengths and limitations of each DFS, the paper provides practical insights for selecting the most appropriate system for different enterprise needs, from high availability storage to big data analytics.

翻译:暂无翻译