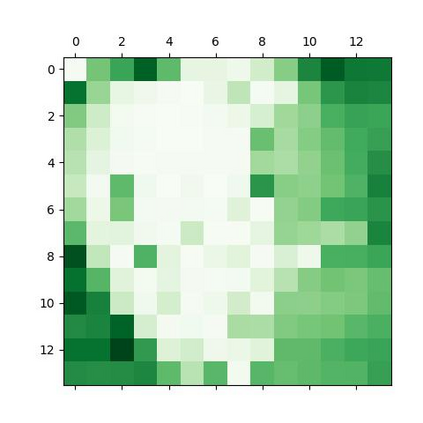

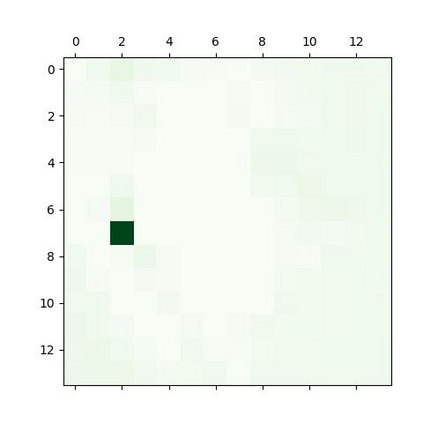

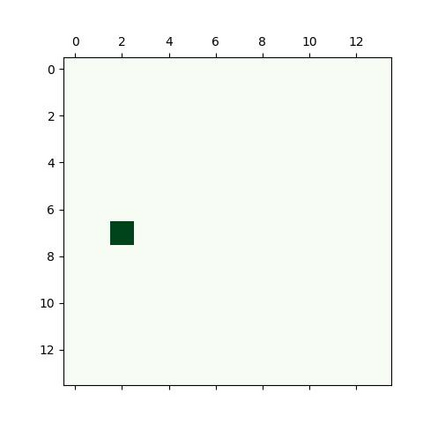

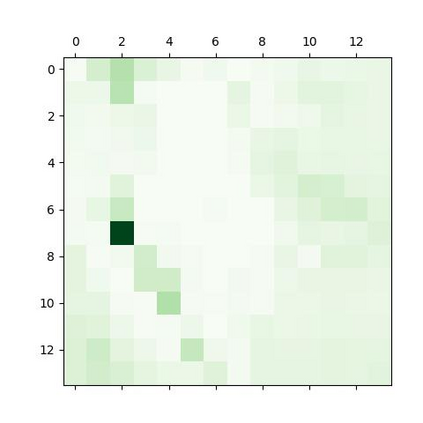

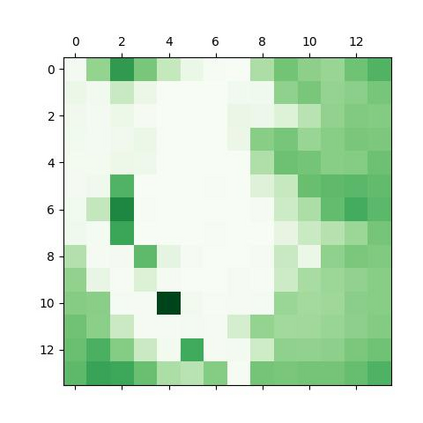

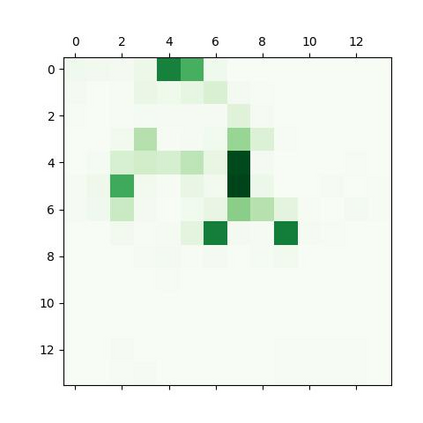

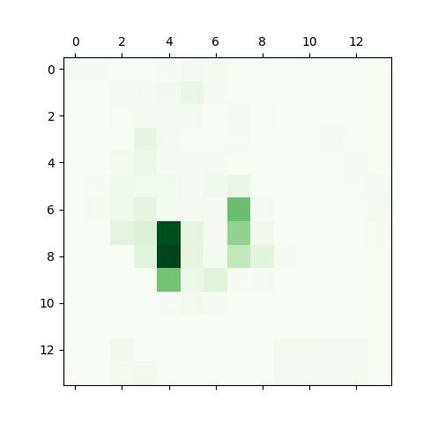

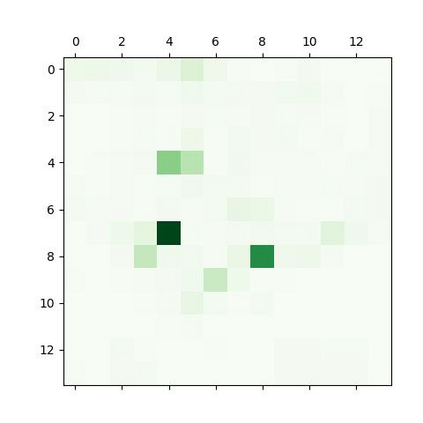

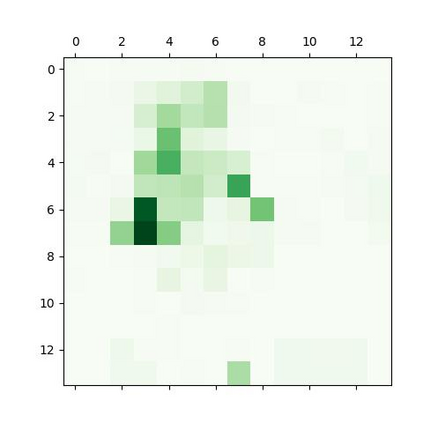

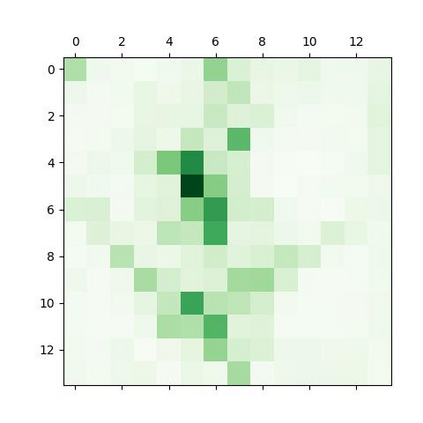

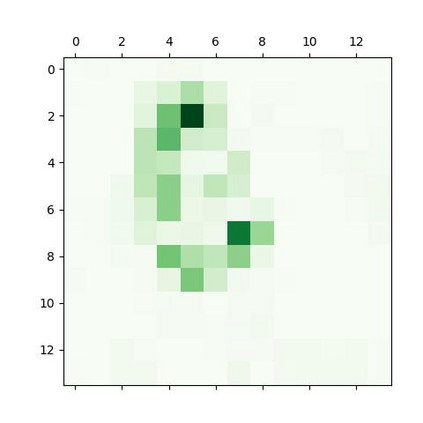

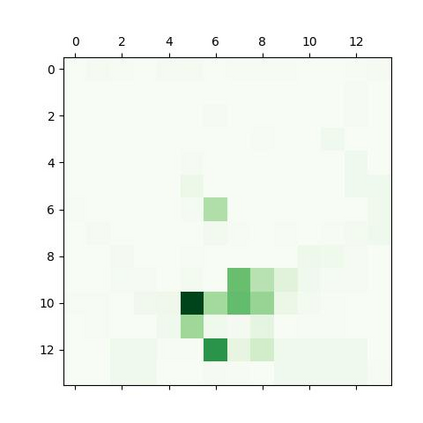

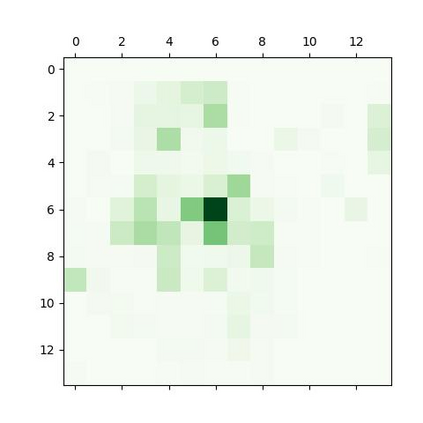

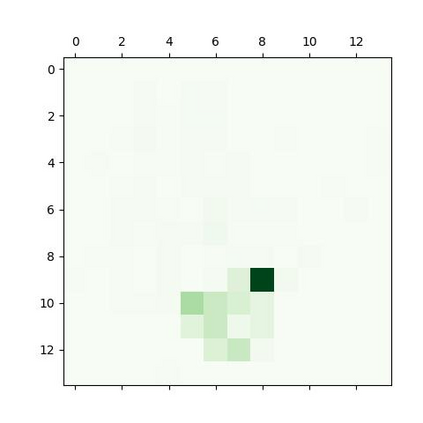

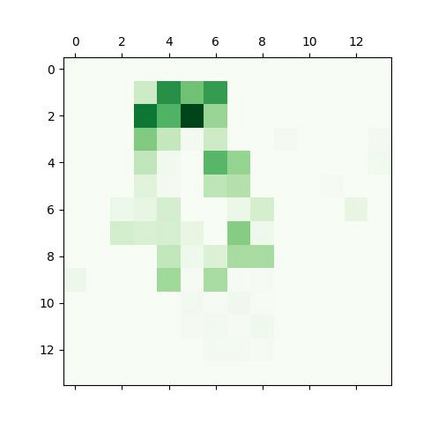

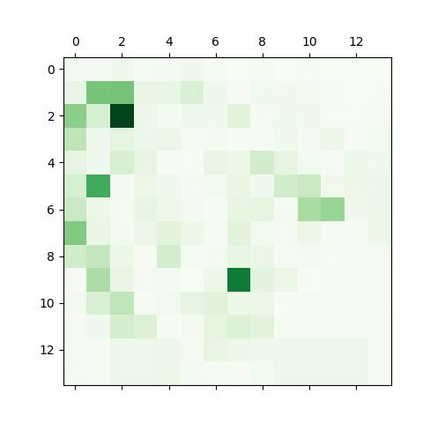

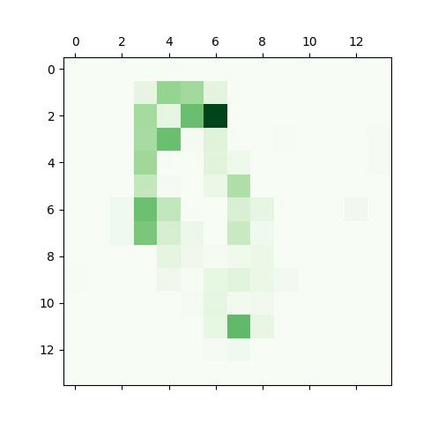

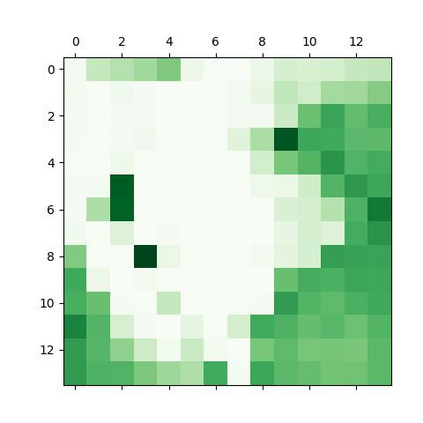

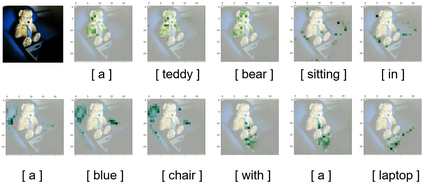

In this paper, we consider the image captioning task from a new sequence-to-sequence prediction perspective and propose CaPtion TransformeR (CPTR) which takes the sequentialized raw images as the input to Transformer. Compared to the "CNN+Transformer" design paradigm, our model can model global context at every encoder layer from the beginning and is totally convolution-free. Extensive experiments demonstrate the effectiveness of the proposed model and we surpass the conventional "CNN+Transformer" methods on the MSCOCO dataset. Besides, we provide detailed visualizations of the self-attention between patches in the encoder and the "words-to-patches" attention in the decoder thanks to the full Transformer architecture.

翻译:在本文中,我们从新的序列到序列的预测角度来考虑图像说明任务,并提议CaPtion 变换R(CPTR), 将序列原始图像作为输入变换器的输入。 与“ CNN+ Transforn't” 设计范式相比, 我们的模型可以从一开始就在每一个编码层建模全球背景, 并且完全没有进化。 广泛的实验显示了拟议模型的有效性, 并且我们超越了在 MCCO 数据集中的常规的“ CNN+ Transformex” 方法。 此外, 我们提供了详细图像化了编码器中的补丁和解码器中的“ 字对字” 之间的自控关注, 这是由于完整的变换结构。