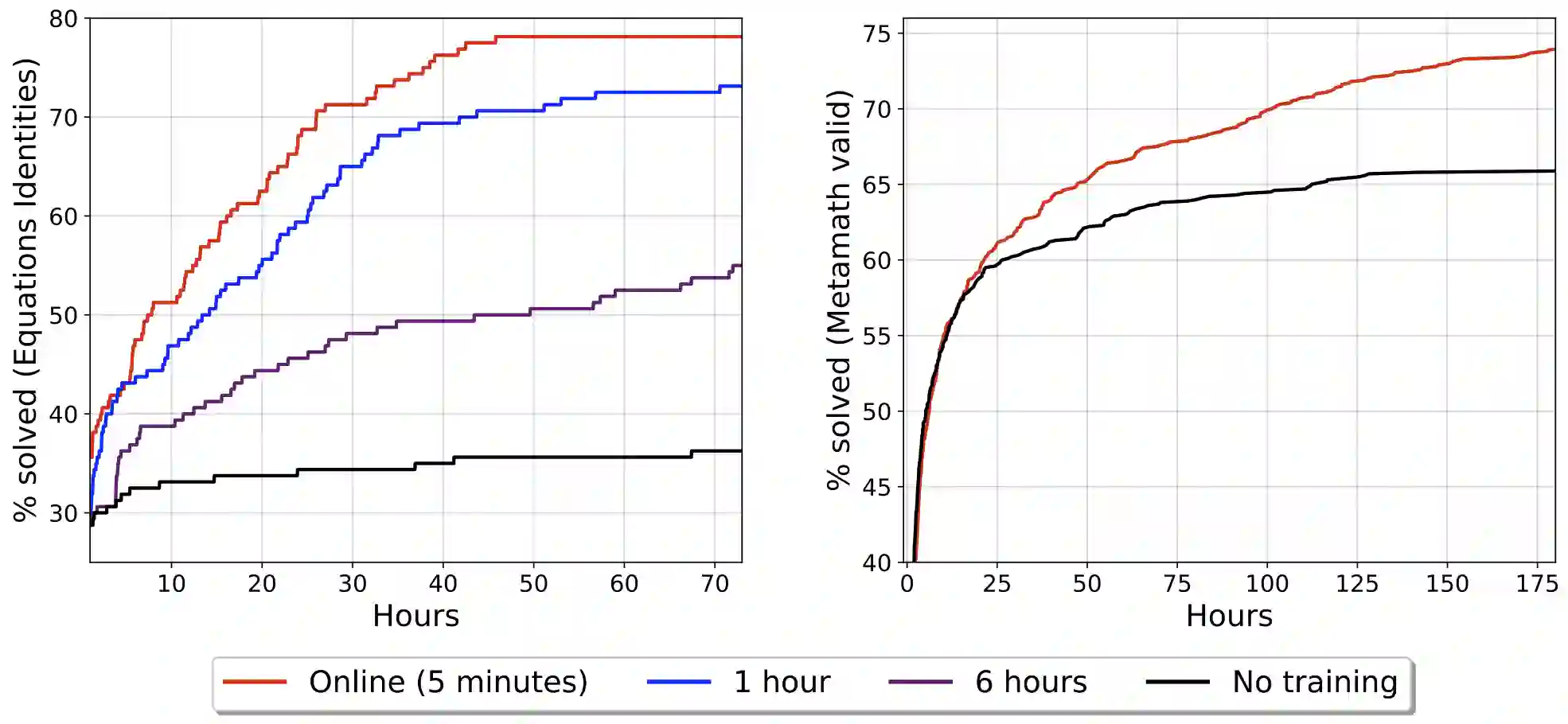

We propose an online training procedure for a transformer-based automated theorem prover. Our approach leverages a new search algorithm, HyperTree Proof Search (HTPS), inspired by the recent success of AlphaZero. Our model learns from previous proof searches through online training, allowing it to generalize to domains far from the training distribution. We report detailed ablations of our pipeline's main components by studying performance on three environments of increasing complexity. In particular, we show that with HTPS alone, a model trained on annotated proofs manages to prove 65.4% of a held-out set of Metamath theorems, significantly outperforming the previous state of the art of 56.5% by GPT-f. Online training on these unproved theorems increases accuracy to 82.6%. With a similar computational budget, we improve the state of the art on the Lean-based miniF2F-curriculum dataset from 31% to 42% proving accuracy.

翻译:我们为基于变压器的自动定理验证器提议了一个在线培训程序。 我们的方法利用了一个新的搜索算法,即超轨校对搜索(HTPS),这是受阿尔法零星最近成功启发的启发。 我们的模型通过在线培训从以往的校对搜索中学习,使得它能够向远离培训分布的领域进行普及。 我们通过研究三个日益复杂的环境的性能来报告我们输油管的主要部件的详细分类。 特别是,我们用HTPS来显示,一个仅用附加说明的证明来培训的模型就能够证明一个持有的Metamath定理数据集的65.4%,大大超过GPT-f先前56.5%的艺术状态。 关于这些未规范定理的在线培训的精度提高到82.6%。 我们用类似的计算预算,将基于Lean的小型F2F-曲线数据集的艺术状况从31%提高到42%,证明准确性能。