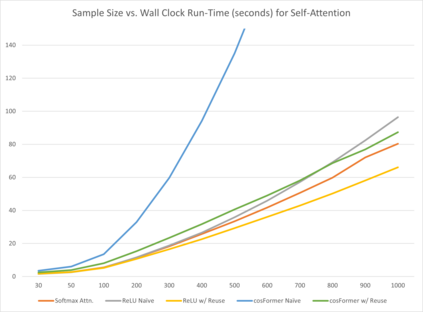

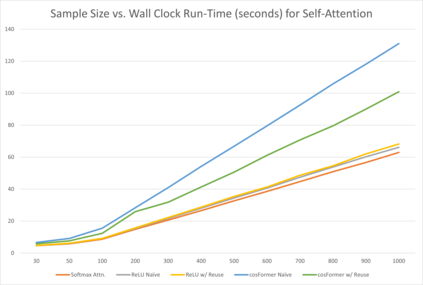

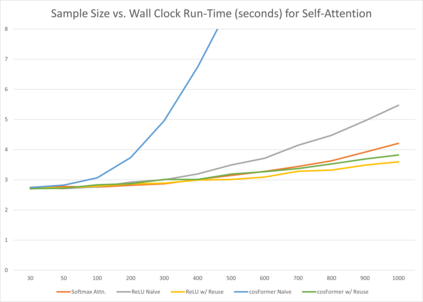

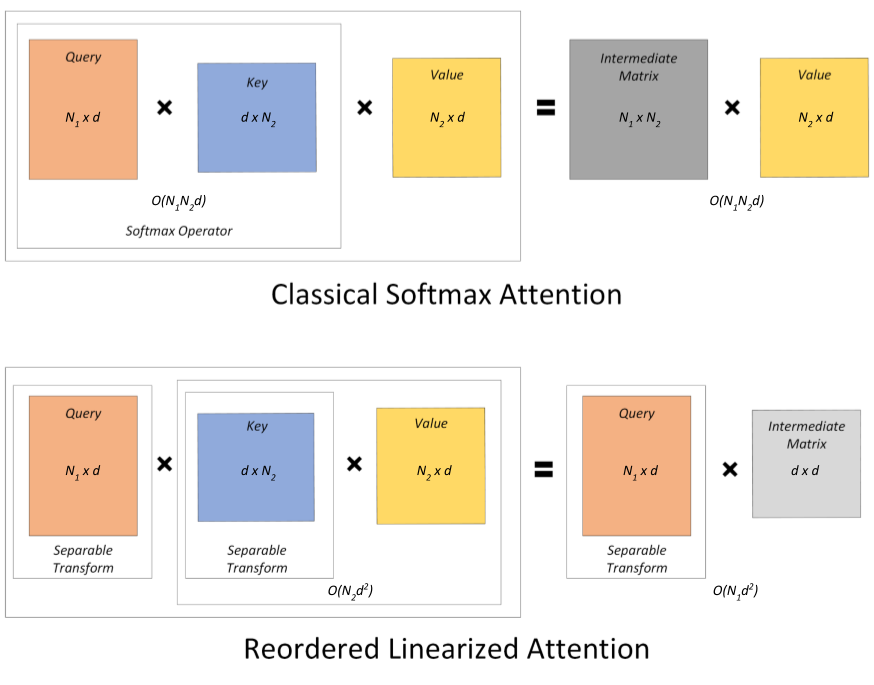

Various natural language processing (NLP) tasks necessitate models that are efficient and small based on their ultimate application at the edge or in other resource-constrained environments. While prior research has reduced the size of these models, increasing computational efficiency without considerable performance impacts remains difficult, especially for autoregressive tasks. This paper proposes \textit{modular linearized attention (MLA)}, which combines multiple efficient attention mechanisms, including cosFormer \cite{zhen2022cosformer}, to maximize inference quality while achieving notable speedups. We validate this approach on several autoregressive NLP tasks, including speech-to-text neural machine translation (S2T NMT), speech-to-text simultaneous translation (SimulST), and autoregressive text-to-spectrogram, noting efficiency gains on TTS and competitive performance for NMT and SimulST during training and inference.

翻译:各种自然语言处理(NLP)任务都需要高效且适用于边缘计算或其他资源受限环境的模型。尽管早期的研究已经减小了这些模型的大小,但在不对性能产生明显影响的情况下提高计算效率仍然很难,特别是对于自回归任务。本文提出了“模块化线性化注意力(MLA)”方法,它结合了多个高效的注意力机制,包括 cosFormer \cite{zhen2022cosformer},以最大化推理质量同时实现显著的加速。我们在多个基于自回归的 NLP 任务上验证了这种方法,包括语音到文本的神经机器翻译(S2T NMT)、语音到文本的同声传译(SimulST)和自回归文本到语谱图,注意到在 TTS 上的效率提升以及在训练和推理过程中 NMT 和 SimulST 的竞争性能。