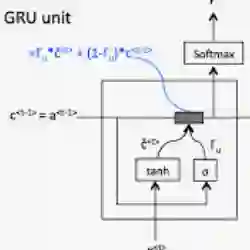

Automatic generation of video captions is a fundamental challenge in computer vision. Recent techniques typically employ a combination of Convolutional Neural Networks (CNNs) and Recursive Neural Networks (RNNs) for video captioning. These methods mainly focus on tailoring sequence learning through RNNs for better caption generation, whereas off-the-shelf visual features are borrowed from CNNs. We argue that careful designing of visual features for this task is equally important, and present a visual feature encoding technique to generate semantically rich captions using Gated Recurrent Units (GRUs). Our method embeds rich temporal dynamics in visual features by hierarchically applying Short Fourier Transform to CNN features of the whole video. It additionally derives high level semantics from an object detector to enrich the representation with spatial dynamics of the detected objects. The final representation is projected to a compact space and fed to a language model. By learning a relatively simple language model comprising two GRU layers, we establish new state-of-the-art on MSVD and MSR-VTT datasets for METEOR and ROUGE_L metrics.

翻译:自动生成视频字幕是计算机视觉的一个基本挑战。 最新技术通常在视频字幕中使用革命神经网络(CNNs)和神经神经网络(RNNs)的组合组合。 这些方法主要侧重于通过 RNNs 定制序列学习,以更好地生成字幕,而从CNNs 借用现成的视觉特征。 我们争辩说,仔细设计这项任务的视觉特征同样重要,并展示一种视觉特征编码技术,以利用Gated 经常单元生成精致丰富的字幕。 我们的方法在视觉特征中包含丰富的时间动态,从上层上将短发变换到整个视频的CNN特征。 此外,这些方法还从一个天体探测器中获取高层次的语义学,以丰富被检测到物体的空间动态。 最后的表述将投向一个紧凑空间,并输入到一个语言模型。 通过学习一个由两个GRUD层组成的相对简单的语言模型,我们为METEOR和ROUGE_L 衡量仪建立了关于MSVD和MSR-VTT数据集的新状态艺术。