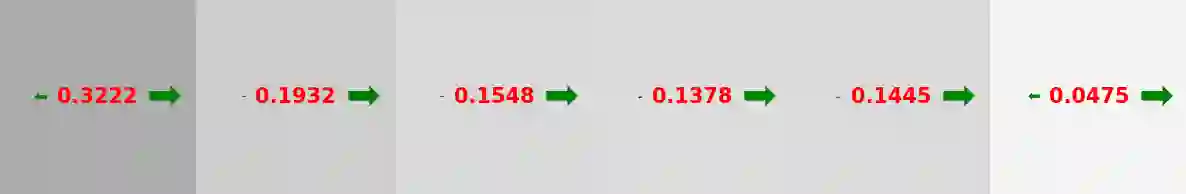

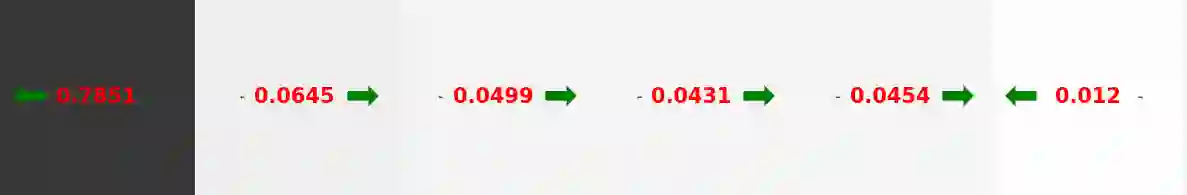

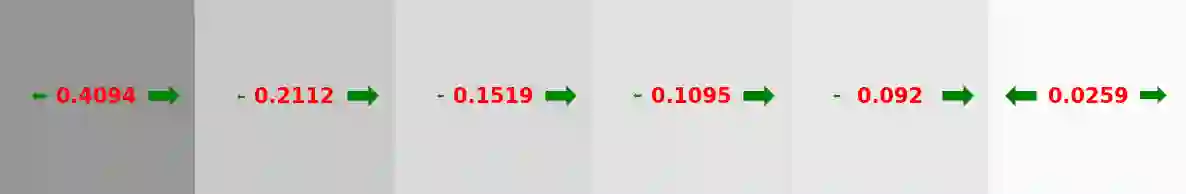

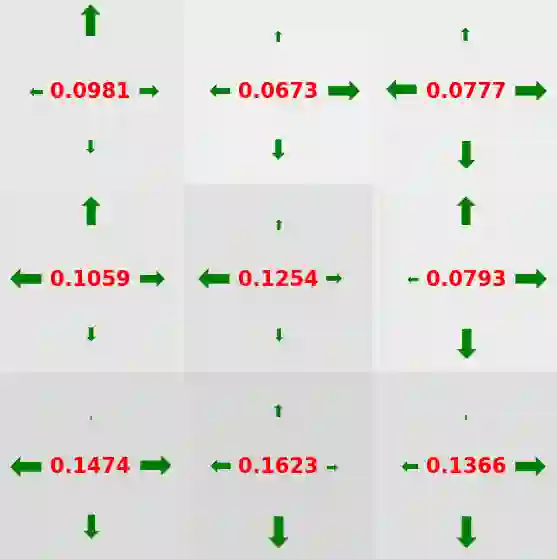

In reinforcement learning, we encode the potential behaviors of an agent interacting with an environment into an infinite set of policies, the policy space, typically represented by a family of parametric functions. Dealing with such a policy space is a hefty challenge, which often causes sample and computation inefficiencies. However, we argue that a limited number of policies are actually relevant when we also account for the structure of the environment and of the policy parameterization, as many of them would induce very similar interactions, i.e., state-action distributions. In this paper, we seek for a reward-free compression of the policy space into a finite set of representative policies, such that, given any policy $\pi$, the minimum R\'enyi divergence between the state-action distributions of the representative policies and the state-action distribution of $\pi$ is bounded. We show that this compression of the policy space can be formulated as a set cover problem, and it is inherently NP-hard. Nonetheless, we propose a game-theoretic reformulation for which a locally optimal solution can be efficiently found by iteratively stretching the compressed space to cover an adversarial policy. Finally, we provide an empirical evaluation to illustrate the compression procedure in simple domains, and its ripple effects in reinforcement learning.

翻译:在强化学习中,我们把与环境发生相互作用的代理人的潜在行为编成一套无限的政策,即政策空间,通常由一组参数功能所代表。处理这样一个政策空间是一项艰巨的挑战,往往导致抽样和计算效率低下。然而,我们争辩说,当我们还考虑到环境结构和政策参数化的结构时,为数有限的政策实际上具有相关性,因为其中许多政策会引发非常相似的互动,即国家行动分布。在本文中,我们寻求将政策空间无酬压缩成一套有限的有代表性的政策,例如,考虑到任何政策$\pi$,代表政策的国家行动分布与美元的国家行动分配之间的最小R\'enyi差异是捆绑在一起的。我们表明,这种政策空间的压缩可以作为一个固定的覆盖问题来形成,而且它本身是硬的。然而,我们提议一种游戏理论重新配方,通过迭接压缩空间以覆盖简单的对抗性政策的强化作用来有效地找到一种当地最佳解决办法。最后,我们提供了一种实验程序,以模拟形式来说明其强化的磁性。