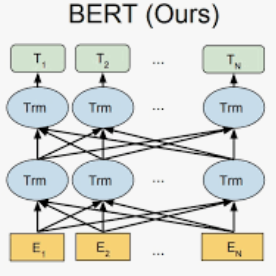

Comments, or natural language descriptions of source code, are standard practice among software developers. By communicating important aspects of the code such as functionality and usage, comments help with software project maintenance. However, when the code is modified without an accompanying correction to the comment, an inconsistency between the comment and code can arise, which opens up the possibility for developer confusion and bugs. In this paper, we propose two models based on BERT (Devlin et al., 2019) and Longformer (Beltagy et al., 2020) to detect such inconsistencies in a natural language inference (NLI) context. Through an evaluation on a previously established corpus of comment-method pairs both during and after code changes, we demonstrate that our models outperform multiple baselines and yield comparable results to the state-of-the-art models that exclude linguistic and lexical features. We further discuss ideas for future research in using pretrained language models for both inconsistency detection and automatic comment updating.

翻译:源代码的自然语言描述是软件开发者的标准做法。通过传达代码的重要方面,例如功能和使用,评论有助于软件项目维护。然而,当代码在修改时没有同时对评论进行更正,评论和代码之间可能会出现不一致,从而有可能出现开发者混淆和错误。在本文中,我们提出了基于BERT(Devlin等人,2019年)和Longfore(Beltagy等人,2020年)的两种模型,以发现自然语言推理(NLI)背景下的这种不一致之处。通过对此前建立的一套评论方法组合进行评价,在代码修改期间和之后,我们证明我们的模型优于多种基线,并产生与排除语言和词汇特征的最新模型相类似的结果。我们进一步讨论今后研究如何使用预先培训的语言模型进行不一致的检测和自动评论更新。