CIKM 2021 | Deep Retrieval:字节跳动深度召回模型论文精读

©作者 | 杰尼小子

单位 | 字节跳动

研究方向 | 推荐算法

文章动机/出发点

这是一篇字节跳动发表在 CIKM 2021 的论文,这一项工作在字节很多业务都上线了,效果很不错。但是这篇文章整体读下来,感觉有挺多地方让人挺迷茫的,有可能是因为文章篇幅有限。值得一提的是,这篇 paper 曾经投过 ICLR,有 open review,所以最后也会总结一下 reviewer 的问题同时写一下自己的一些思考。

论文名称:

To the best of our knowledge, DR is among the first non-ANN algorithms successfully deployed at the scale of hundreds of millions of items for industrial recommendation systems.

-

基于 ANN 的召回模型通常是双塔模型 ,User Tower 跟 Item Tower 通常是分开的,这就导致了用户的交互比较简单,只在最后內积的时候进行交互 -

而 ANN 跟 MIPS 算法比如:LSH, IVF-PQ, HNSW。是为了近似 Top K 或者搜索最大內积而设计的,并不是直接优化 user-item 训练样本。 另一个就是召回模型与索引构建是分开的,容易存在召回模型跟索引的版本不一致的问题,特别是新 item 进来的时候。

作者针对內积模型的一些问题,所以 DR 是直接 end-to-end 的学习训练样本的,针对树模型的一些问题,所以 DR 可以让一个 item 属于多个 path/cluster。但其实 DR 的架构加了挺多东西,既没有做消融实验去分解每一部分的作用,也没有通过实验去解释如何解决了这些问题,只通过了一些“直觉上”的分析来解释为什么要 xxx,如果作者可以加上这一部分的实验分析个人感觉就是一个特别棒的的工作了,毕竟他的实际效果很有保证。

模型框架

2.1 总体概述

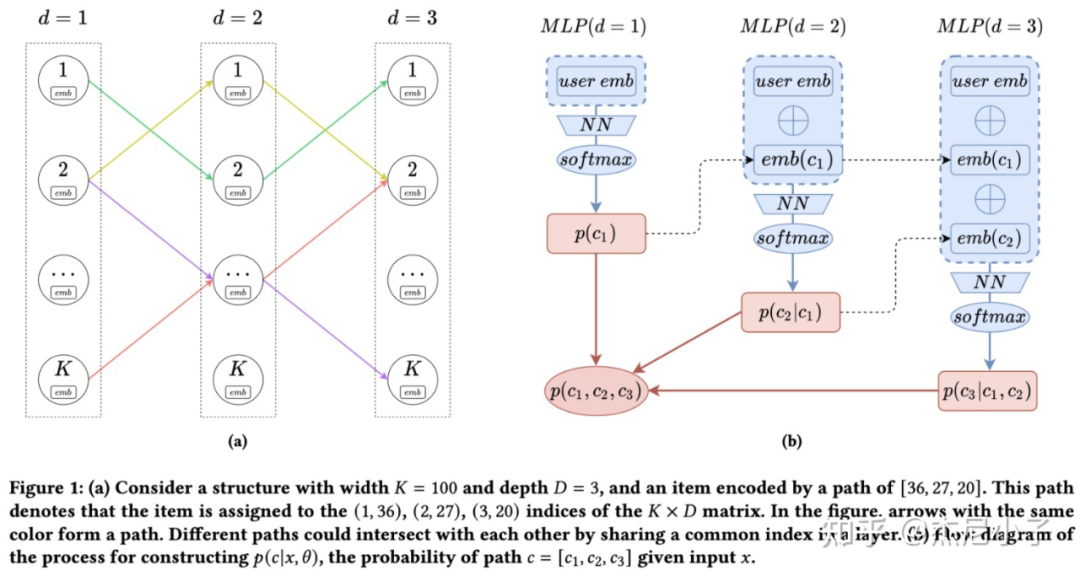

rerank 这一小节的名字叫做:Multi-task Learning and Reranking with Softmax Models,我其实没搞明白为啥是 multi-task 了。另外在 rerank 这里进行控量而不是在粗排那里过滤的原因是通常 DR 给的 item 很多,粗排阶段容易挤压别的路的候选集从而主导粗排的学习?

-

DR 的输入只用了 user 的信息,没有使用 item 的信息,与 YouTube DNN 类似 DR 的训练集只有正样本,没有负样本。

-

DR 不但要学习每一层网络的参数,也要学习给定 user 如何把一个 item 映射到某一个 cluster/path 里面(item-to-path mapping )。

之前 introduction 说基于內积的召回模型 user 跟 item 交互比较少,然后 DR 根本没用到 item 的信息……

-

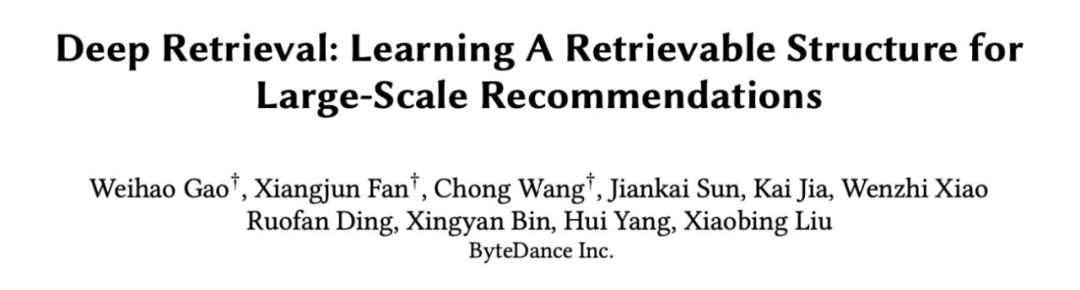

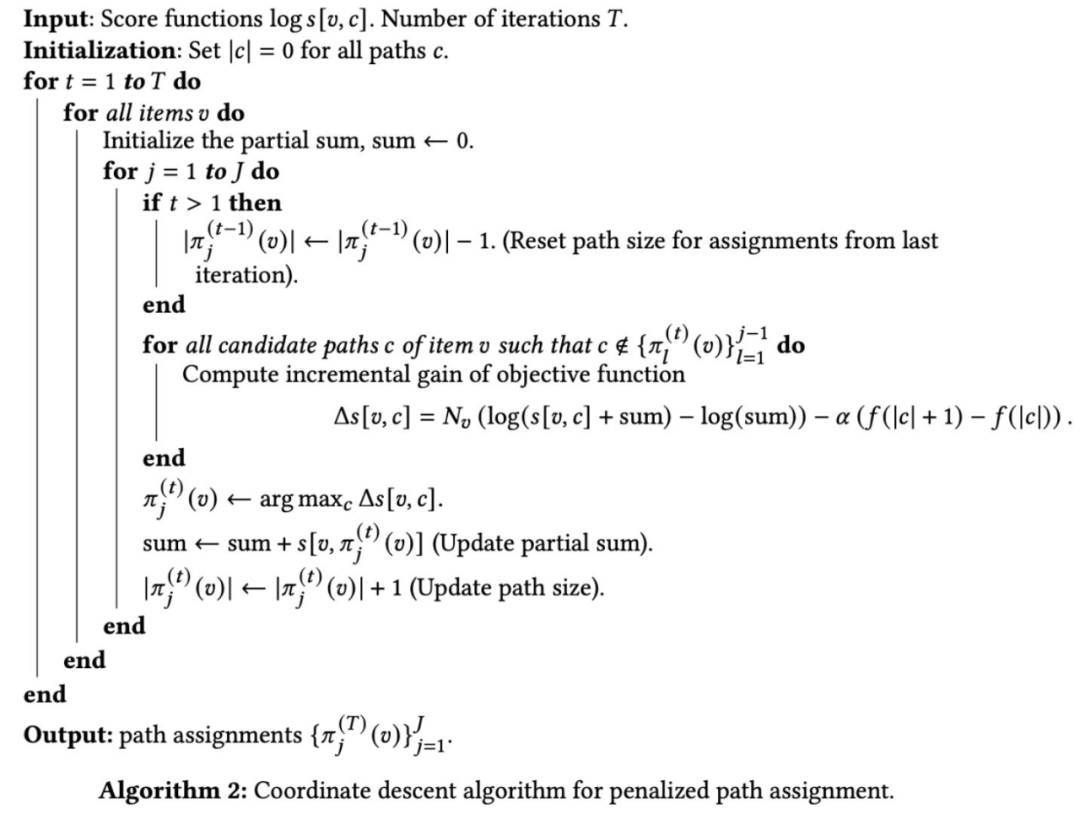

一共 D 层 MLP,每层 MLP 有 K 个 node -

是所有 item 的 label -

item-to-path mapping : 代表如何将一个 item 映射成 里面的某几条 path。 -

(x, y) 代表用户 x 与 item y 的一条正样本(比如点击,转化,点赞等)。 -

代表将 item y 映射成一条 path,其中 . -

每一条 path 的概率是 D 层 MLP 每一层 node 概率的连乘。

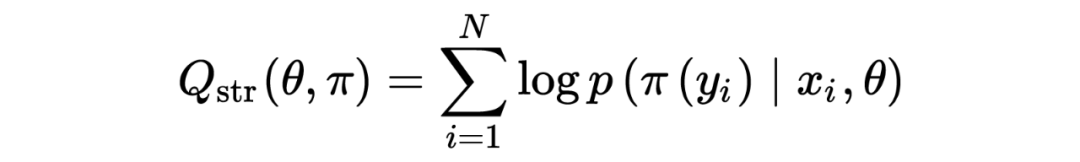

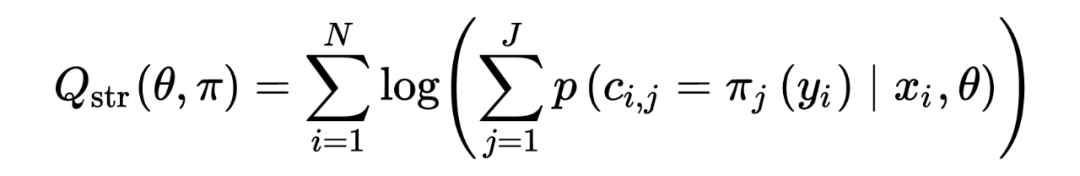

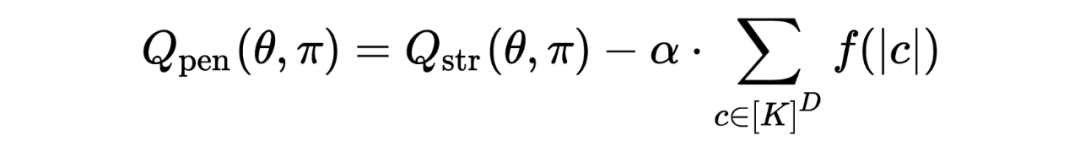

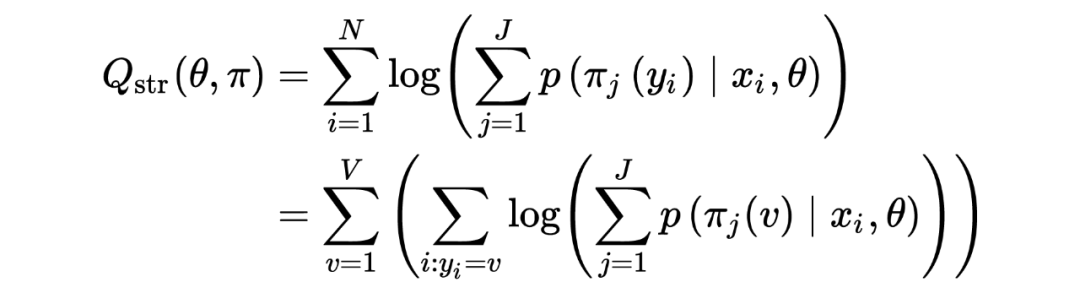

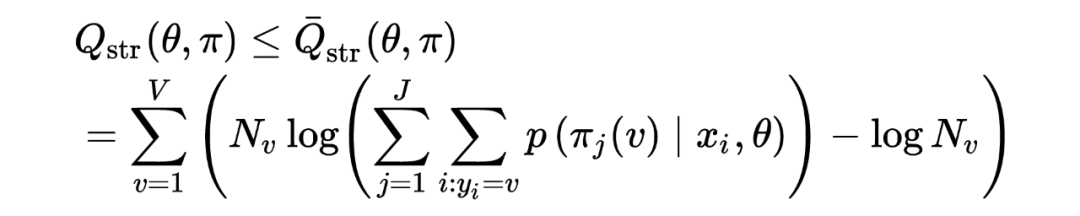

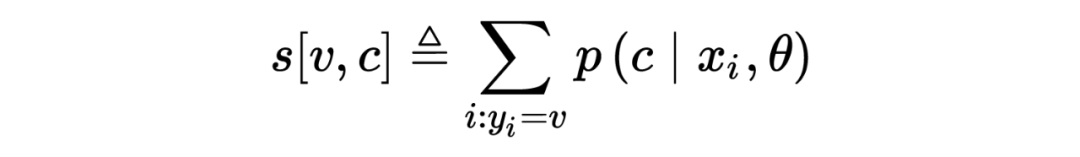

2.2 网络参数的学习

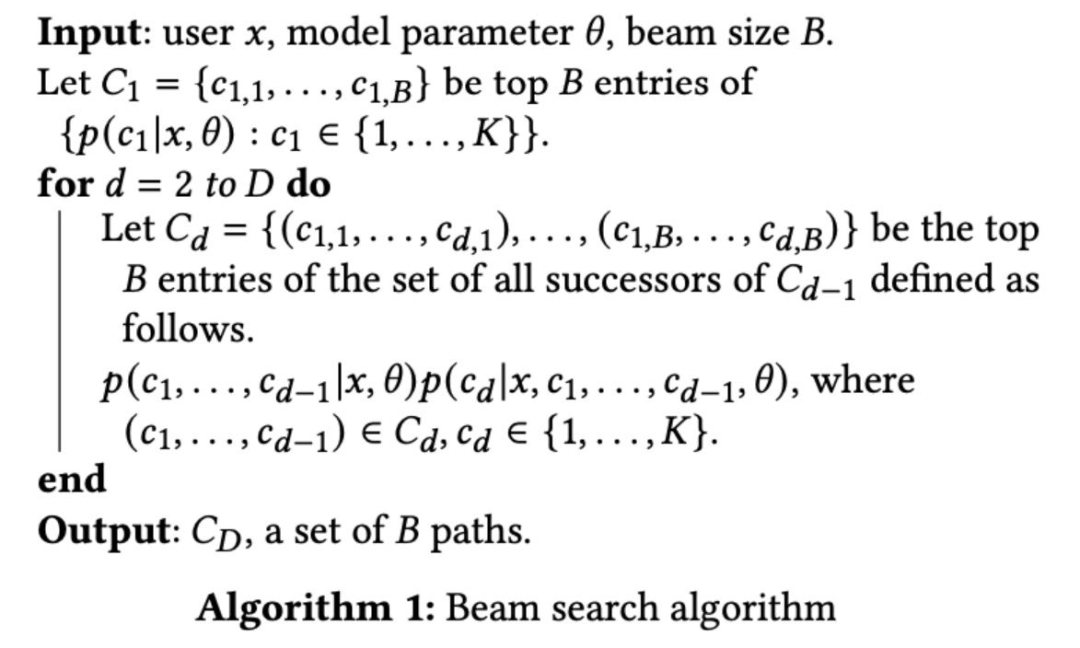

2.3 Beam Search for Inference

2.4 item-to-path learning

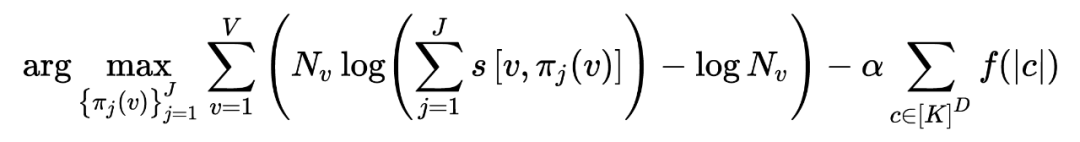

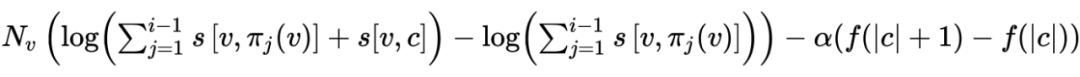

下面来介绍重头戏,就是一个 item 如何确定去哪几个 path,这个跟网络参数不一样的是,他不是连续的不用能梯度下降进行优化, 因此作者使用 EM 的形式交替进行优化。首先随机初始化

-

E-step:锁住 ,通过最大化 structure objective 优化 。 -

M-step:同样通过最大化 structure objective 更新 。

一般是最大化一个函数的下界,最小化一个函数的上界来优化这个函数,这里是相反的,作者指出这里不能保证可以优化函数,但是他们实验是有效果的,因此这样去优化。

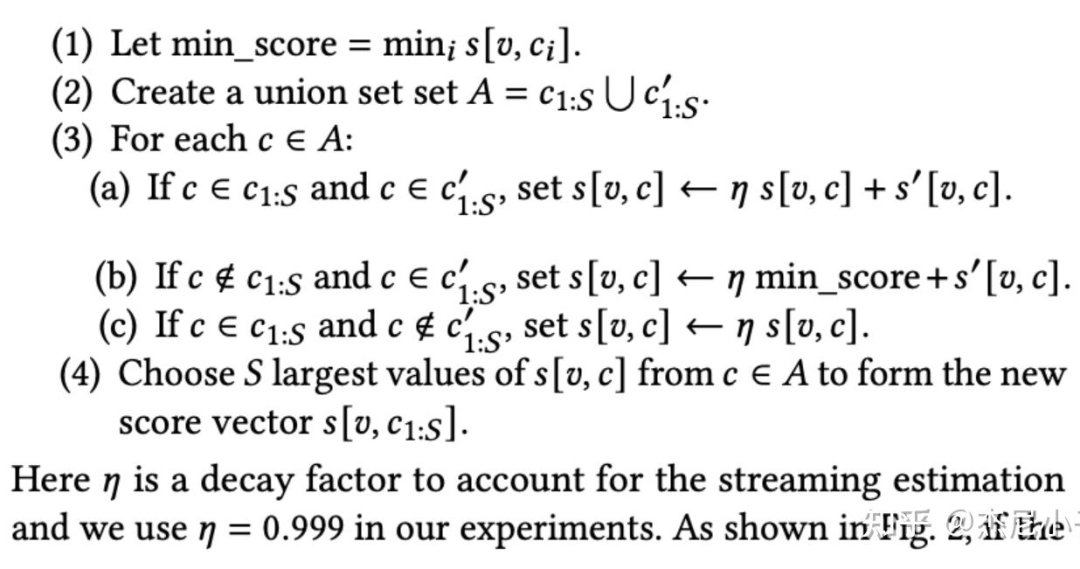

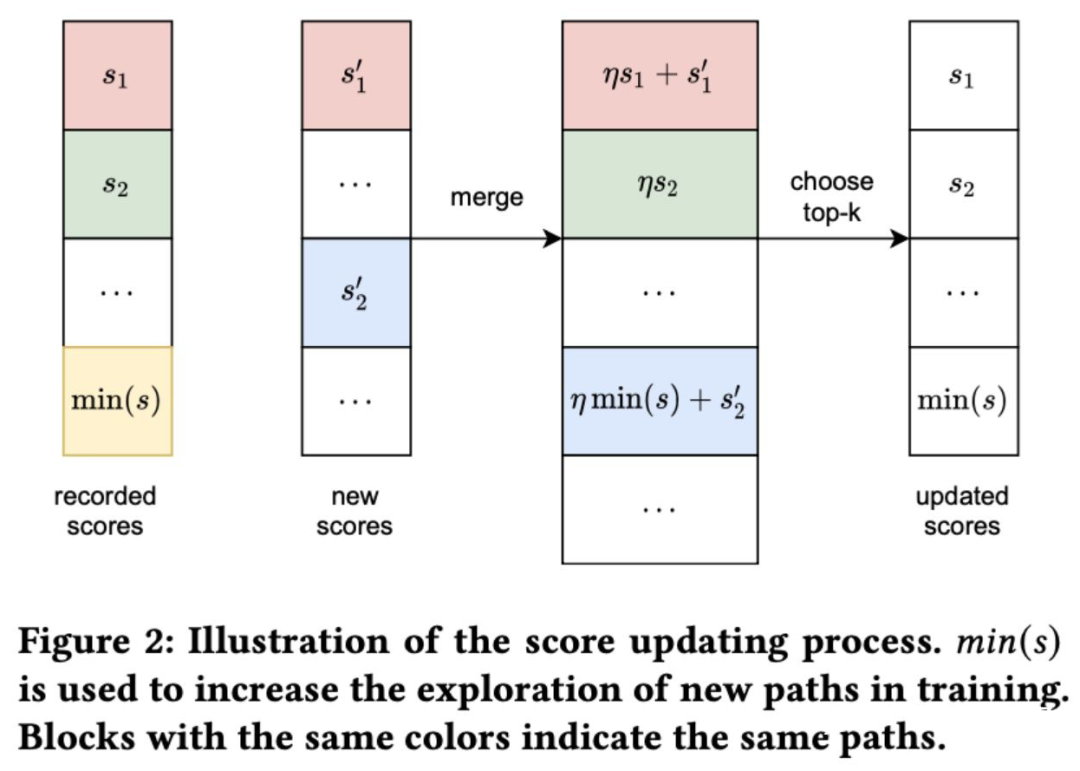

2.5 两个数据流合并score

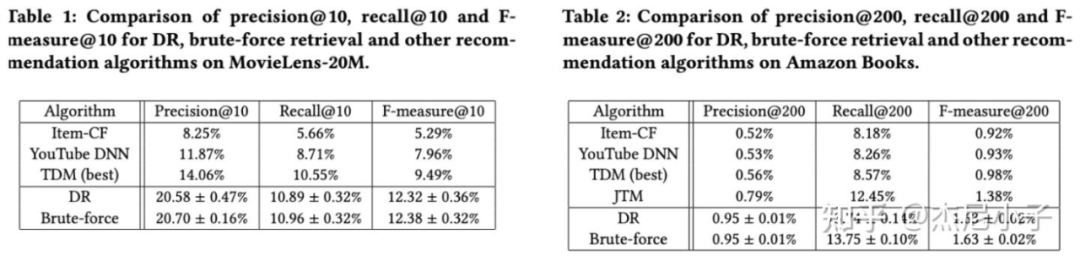

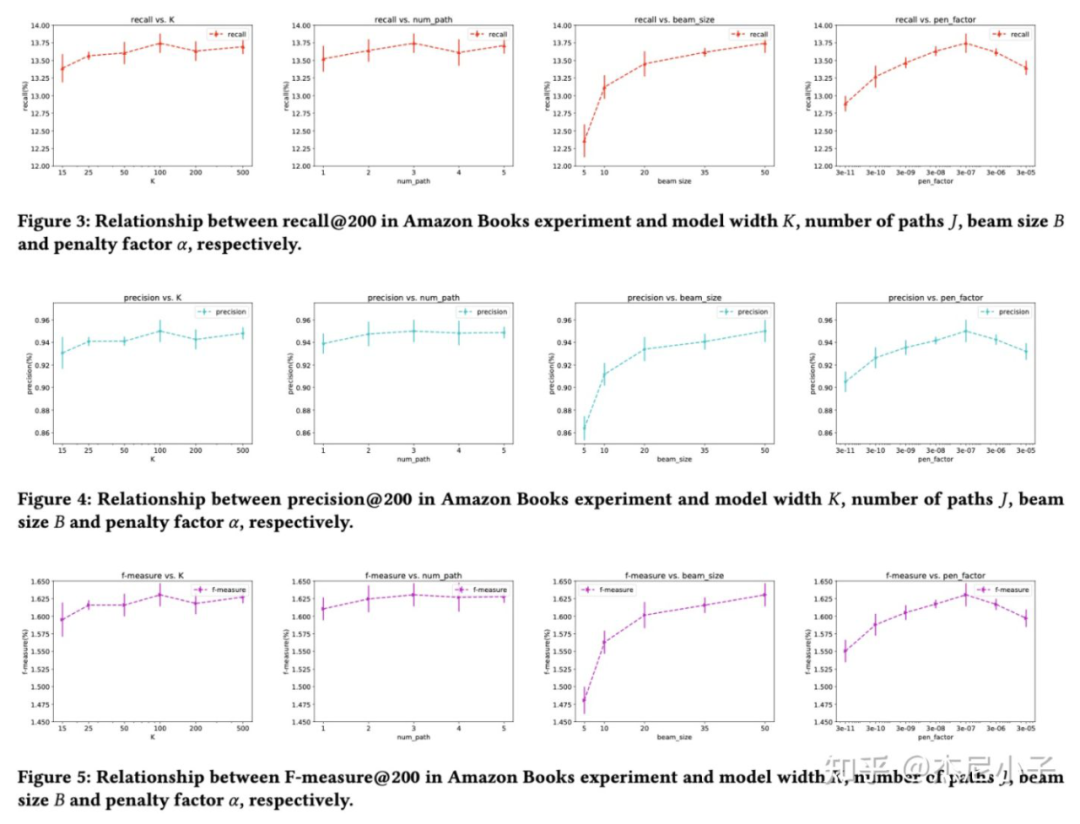

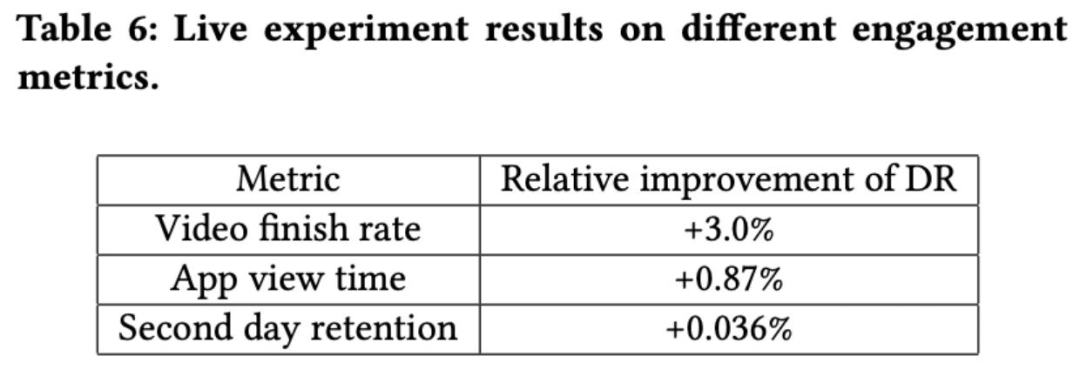

实验

ICLR review

The paper lacks a motivation for using the proposed scheme. It says that for tree-based models, the number of parameters is proportional to the number of clusters and hence it is a problem. This is not clear why this is such a problem. Successful application of tree-structure for large-scale problem has been demonstrated in [1,2]. Also, it is not clear how the proposed method addresses data scarcity, which according to the paper happens only in tree-based methods, and not in the proposed method as there are no leaves.

It is not clear how the proposed structure model (of using K \times D matrix) is different from the Chen etal 2018. The differences and similarities compared to this work should be clearly specified. Also, what seems to be missing is why such an architecture of using stacked multi-layer perceptrons should lead to better performance especially in positive data-scarcity situations where most of the users 'like' or 'buy' only few items.

The experimental comparison looks unclear and incomplete. The comparison should also be done with the approach proposed in Zhou etal 2020 ICML paper. At the end of Page 6 it is said that the results of JTM were only available for Amazon Books. How do you make sure that same training and test split (as in JTM) is used as the description says that test and validation set is done randomly. Also, it would also be good to see the code and be able to reproduce the results.

References of some key papers are based on arxiv versions, such as Chen etal 2018 and Zhou etal. 2020, where both the papers have been accepted in ICML conference of respective years.

The authors mainly claim that the objective of learning vector representation and good inner-product search are not well aligned, and the dependence on inner-products of user/item embeddings may not be sufficient to capture their interactions. This is an ongoing research discussion on this domain. I'd recommend the authors to refer to a recent paper, proposing the opposite direction from this submission: Neural Collaborative Filtering vs. Matrix Factorization Revisited (RecSys 2020) arxiv.org/abs/2005.0968

According to Figure 1, a user embedding is given as an input, and the proposed model outputs probability distribution over all possible item codes, which in turn interpreted as items. That being said, it seems the user embedding is highly important in this model. A user can be modeled in a various ways, e.g., as a sequence of items consumed, or using some meta-data. If the user embeddings are not representative enough, the proposed model may not work, and on the other hand, if the user embedding is strong, it will estimate the probs more precisely. We would like to see more discussion on this.

In the experiment, there are multiple points that can be addressed. (a) Related to the point #2, the quality of embeddings is not controlled. Thus, comparing DR against brute-force proves that the proposed method is effective on MIPS, but not on the end-to-end retrieval. Ideally, we'd like to see experiments with multiple SOTA embeddings to see if applying DR to those embeddings still improves end-to-end retrieval performance. See examples below. (b) The baselines used in the experiment are not representing the current SOTA. Item-based CF is quite an old method, and YouTube DNN is not fully reproducible due to the discrepancy on input features (which are not publicly available outside of YouTube). We recommend comparing against / using embeddings of LLORMA (JMLR'16), EASE^R (WWW'19), and RecVAE (WSDM'20). (c) Evaluation metrics are somewhat arbitrary. The authors used only one k for P@k, R@k, and F1@k, arbitrarily chosen for each dataset. This may look like a cherry-picking, so we recommend to report scores with multiple k, e.g., {1, 5, 10, 50, 100}. Taking a metric like MAP or NDCG is another option.

The main contribution of this paper seems faster retrieval on MIPS. Overall, the paper is well-written. We recommend adding more intuitive description why the proposed mathematical form guarantees / leads to the optimal / better alignment to the retrieval structure. That is, how/why the use of greedy search leads to the optimal selection of item codes.

The significant feature of the DR model (from the claim in the abstract) to encode all candidates in a discrete latent space. However, there are some previous attempts in this direction that are not discussed. For example VQ-VAE[1] also learns a discrete space. Another more related example is HashRec[2], which (end-to-end) learns binary codes for users and items for efficient hash table retrieval. It's not clear of the connections and why the proposed discrete structure is more suitable.

The experiments didn't show the superiority of the proposed method. As a retrieval method, the most common comparison method (e.g. github.com/erikbern/ann) is the plot of performance-retrieval time, which is absent in this paper. The paper didn't compare the efficiency against the baselines like TDM, JTM, or ANN-based models, which makes the experiments less convincing as the better performance may due to the longer retrieval time.

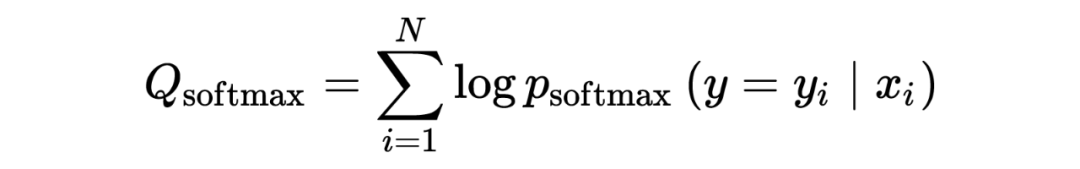

What's the performance of purely using softmax?

It seems only DR uses RNNs for sequential behavior modeling, while the baselines didn't. This'd be a unfair comparison, and sequential methods should be included if DR uses RNN and sequential actions for training.

I didn't understand the motivation of using the multi-path extension. As you already encode each item in D different clusters, this should be enough to express different aspects with a larger D. Why a multi-path variant is needed for making the model more expressive?

-

The Beam Search may not guarantee sub-linear time complexity due to the new hyper-parameter B. It's possible that a very large B is needed for retrieving enough candidates.

一些疑问

总结

更多阅读

#投 稿 通 道#

让你的文字被更多人看到

如何才能让更多的优质内容以更短路径到达读者群体,缩短读者寻找优质内容的成本呢?答案就是:你不认识的人。

总有一些你不认识的人,知道你想知道的东西。PaperWeekly 或许可以成为一座桥梁,促使不同背景、不同方向的学者和学术灵感相互碰撞,迸发出更多的可能性。

PaperWeekly 鼓励高校实验室或个人,在我们的平台上分享各类优质内容,可以是最新论文解读,也可以是学术热点剖析、科研心得或竞赛经验讲解等。我们的目的只有一个,让知识真正流动起来。

📝 稿件基本要求:

• 文章确系个人原创作品,未曾在公开渠道发表,如为其他平台已发表或待发表的文章,请明确标注

• 稿件建议以 markdown 格式撰写,文中配图以附件形式发送,要求图片清晰,无版权问题

• PaperWeekly 尊重原作者署名权,并将为每篇被采纳的原创首发稿件,提供业内具有竞争力稿酬,具体依据文章阅读量和文章质量阶梯制结算

📬 投稿通道:

• 投稿邮箱:hr@paperweekly.site

• 来稿请备注即时联系方式(微信),以便我们在稿件选用的第一时间联系作者

• 您也可以直接添加小编微信(pwbot02)快速投稿,备注:姓名-投稿

△长按添加PaperWeekly小编

🔍

现在,在「知乎」也能找到我们了

进入知乎首页搜索「PaperWeekly」

点击「关注」订阅我们的专栏吧