【泡泡一分钟】语义分割与目标检测的对抗性样本生成(ICCV2017-143)

每天一分钟,带你读遍机器人顶级会议文章

标题:Adversarial Examples for Semantic Segmentation and Object Detection

作者:Cihang Xie, JianYu Wang, Zhishuai Zhang, Yuyin Zhou, Lingxi Xie, Alan Yuille

来源:International Conference on Computer Vision (ICCV 2017)

编译:王嫣然

审核:颜青松

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

已经充分证明,添加了视觉上不可察觉的干扰的自然图像等对抗性样本会导致深层网络在图像分类上的失败。本文将对抗性实例扩展到语义分割和目标检测,此类问题更具有挑战性。

由于分割与检测都基于对图像上的多个目标进行分类(例如,分割的目标是像素或感受野,检测中的目标是OP)。因此可以在一组目标上优化损失函数以产生对抗扰动。基于此,本文提出了一种新的密集对抗样本生成算法(DGA),它适用于最先进的网络进行分割与检测。

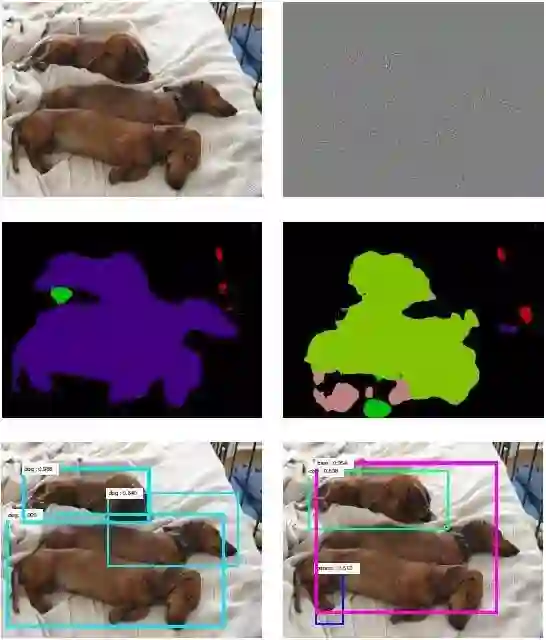

图1 语义分割(FCN)与目标检测(Faster-RCNN)的对抗示例。左侧为未加扰动的分割与检测结果,右侧为添加扰动后的结果。右侧粉色区域表示识别为人。可见添加扰动后,分割与检测效果都不尽人意。

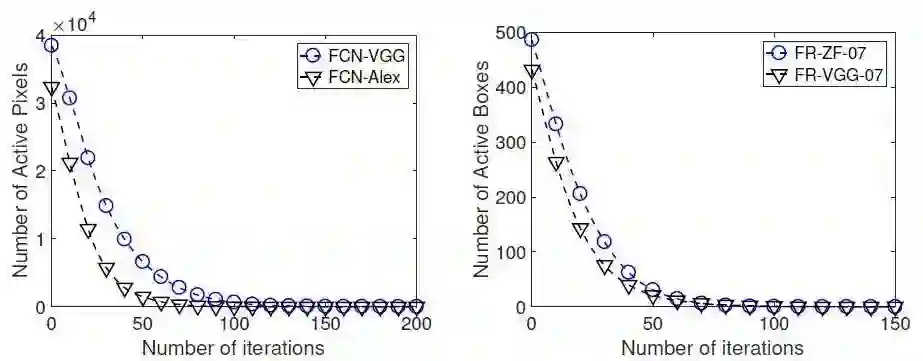

图2 以激活目标数衡量DGA的收敛性

实验表明,基于不同的体系结构,甚至针对不同的识别任务,对抗性扰动都可以通过具有不同训练数据的网络进行传递。特别是具有相同架构的网络之间的传递更为显著。此外,本文表明,总结异构扰动往往会带来更好的传递性能,为黑盒对抗攻击提供了一种有效方法。

Abstract

It has been well demonstrated that adversarial examples, i.e., natural images with visually imperceptible perturba- tions added, cause deep networks to fail on image classi- fication. In this paper, we extend adversarial examples to semantic segmentation and object detection which are much more difficult. Our observation is that both segmentation and detection are based on classifying multiple targets on an image (e.g., the target is a pixel or a receptive field in segmentation, and an object proposal in detection). This inspires us to optimize a loss function over a set of targets for generating adversarial perturbations. Based on this, we propose a novel algorithm named Dense Adversary Generation (DAG), which applies to the state-of-the-art networks for segmentation and detection. We find that the adversarial perturbations can be transferred across networks with different training data, based on different architectures, and even for different recognition tasks. In particular, the transfer ability across networks with the same architecture is more significant than in other cases. Besides, we show that summing up heterogeneous perturbations often leads to better transfer performance, which provides an effective method of black-box adversarial attack.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号(paopaorobot_slam)。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com