【泡泡一分钟】观看、关注、分割:基于关注和分割的显著性检测(ICCV2017-110)

每天一分钟,带你读遍机器人顶级会议文章

标题:Look, Perceive and Segment: Finding the Salient Objects in Images via Two-stream Fixation-Semantic CNNs

作者:Xiaowu Chen, Anlin Zheng, Jia Li, Feng Lu

来源:ICCV 2017 ( IEEE International Conference on Computer Vision)

编译:颜青松

审核:陈世浪

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

近年以来,基于CNN的算法已经在图片显著性检测(SOD)上取得了令人惊叹的效果。无数的学者都努力寻找更加适合SOD任务的网络构架。

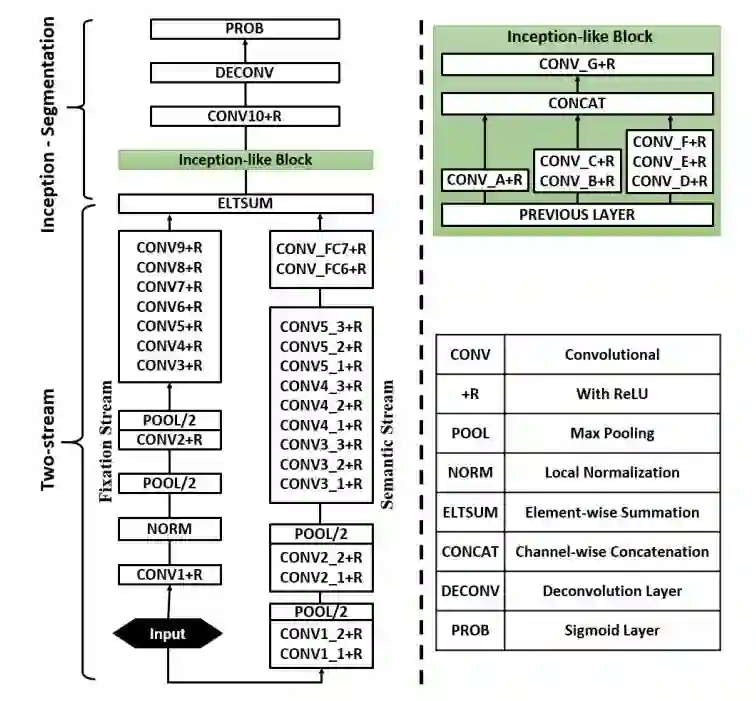

本文作者为了到达此目标,巧妙的使用了基于眼球跟踪获取的关注热力图(fixation density map),并将其与分割网络融合,最后得到了一个双输入的网络架构。在整个网络架构中,关注热力图是使用眼球跟踪数据集进行训练,分割图是使用带有分割标签的数据训练;最后再将两个网络的结果融合在一起,并用显著性检测数据集训练一个inception分割网络。

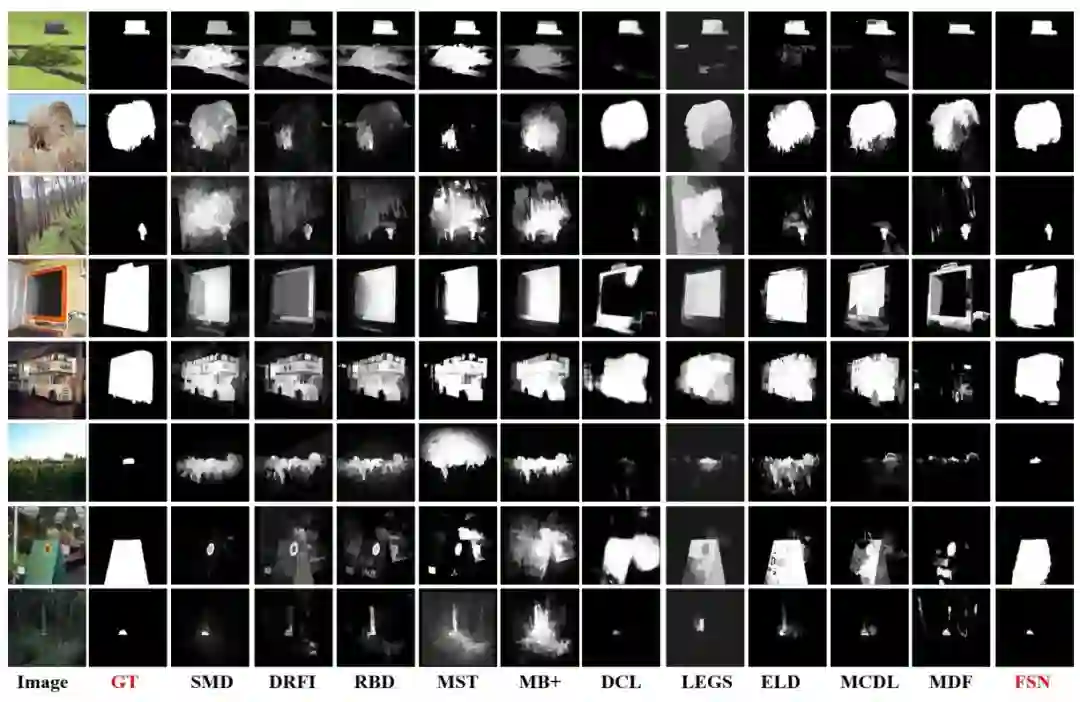

实验表面,本文提出的网络架构非常赞!在4个公开数据集上,打败了10种当前最厉害的算法。

欲知详情如何,请点击链接获取原文。

图1 整个网络架构的流程图,基于关注热力图能够有效的提高显著性检测的精度。

图2 本文的网络框架结构

图3 本文算法的一些结果,第一列是输入图片,第二列是ground truth,最后一列是本文算法的结果。

Abstract

Recently, CNN-based models have achieved remarkable success in image-based salient object detection (SOD). In these models, a key issue is to find a proper network architecture that best fits for the task of SOD.

Toward this end, this paper proposes two-stream fixation-semantic CNNs, whose architecture is inspired by the fact that salient objects in complex images can be unambiguously annotated by selecting the pre-segmented semantic objects that receive the highest fixation density in eye-tracking experiments.

In the two-stream CNNs, a fixation stream is pre-trained on eye-tracking data whose architecture well fits for the task of fixation prediction, and a semantic stream is pre-trained on images with semantic tags that has a proper architecture for semantic perception.

By fusing these two streams into an inception-segmentation module and jointly fine-tuning them on images with manually annotated salient objects, the proposed networks show impressive performance in segmenting salient objects. Experimental results show that our approach outperforms 10 state-of-the-art models (5 deep, 5 non-deep) on 4 datasets.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号(paopaorobot_slam)。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com