【泡泡一分钟】深度直接回归方法在多角度场景文本检测的应用(ICCV2017-72)

每天一分钟,带你读遍机器人顶级会议文章

标题:Deep Direct Regression for Multi-Oriented Scene Text Detection

作者:Wenhao He, Xu-Yao Zhang, Fei Yin, Cheng-Lin Liu

来源:International Conference on Computer Vision (ICCV 2017)

播音员:糯米

编译:刘梦雅 周平(78)

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

在本文中,我们首先提供一种新的视角,将现有的高性能目标检测方法分类为直接法和间接回归法,直接回归通过预测给定点的偏移量来执行边界回归,而间接回归则通过框定区域块来预测偏移量。

在面向多角度的场景文本检测的背景下,我们分析了间接回归的缺点,其中包括以最先进的检测结构Faster-RCNN 和SSD作为实例,并指出直接回归的潜在优势。为了验证这一观点,我们提出了一种基于深度直接回归的多角度场景文本检测方法。我们的检测框架只使用了一个全卷积网络以及一步后处理,简单有效。框架如下图:

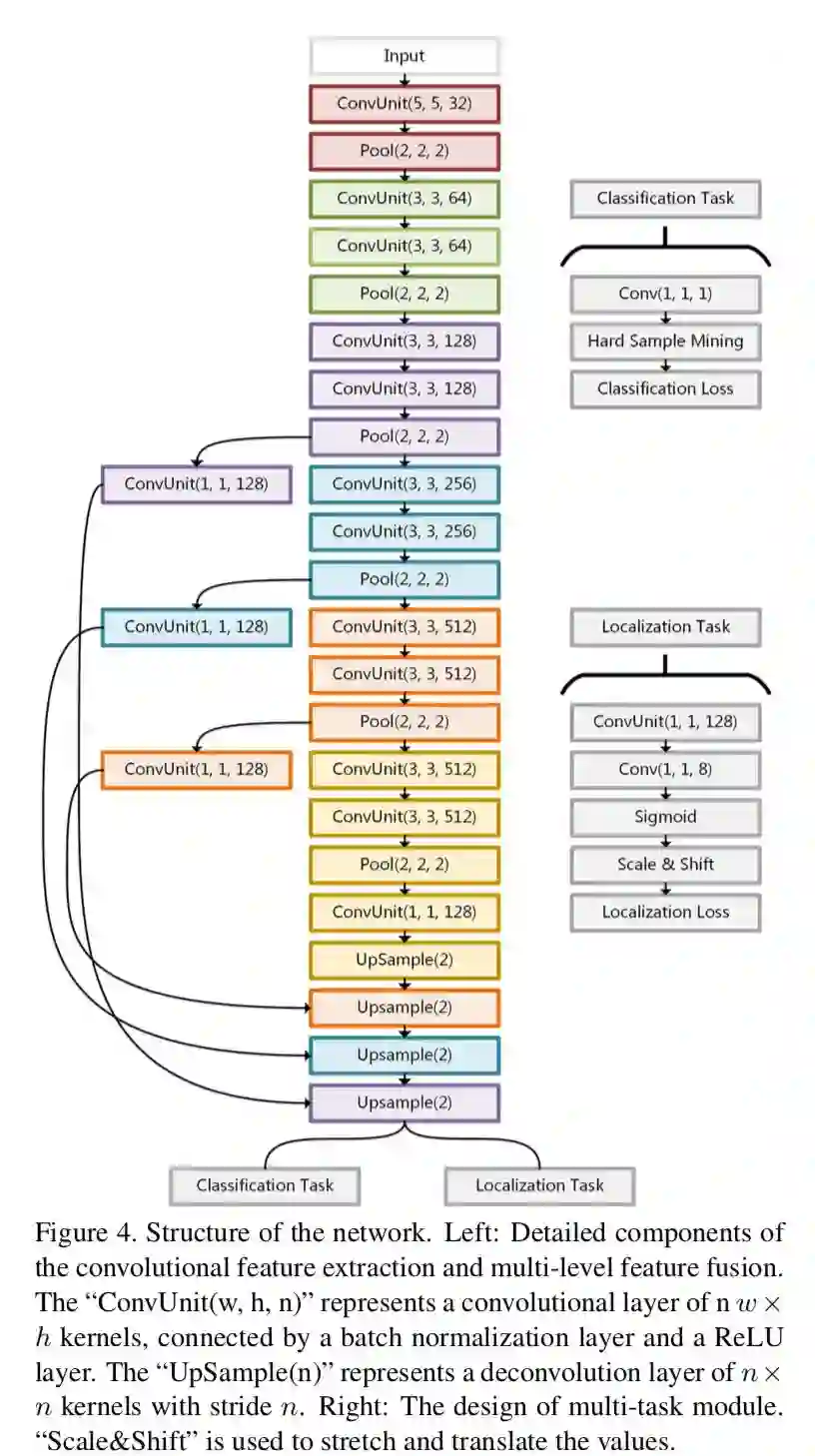

全卷积网络以端到端的方式进行了优化,并且具有双任务输出,其中一个是文本与非文本之间的像素级分类,另一个则是利用直接回归来确定文本的四边形边界的顶点坐标。全卷积网络结构如下:

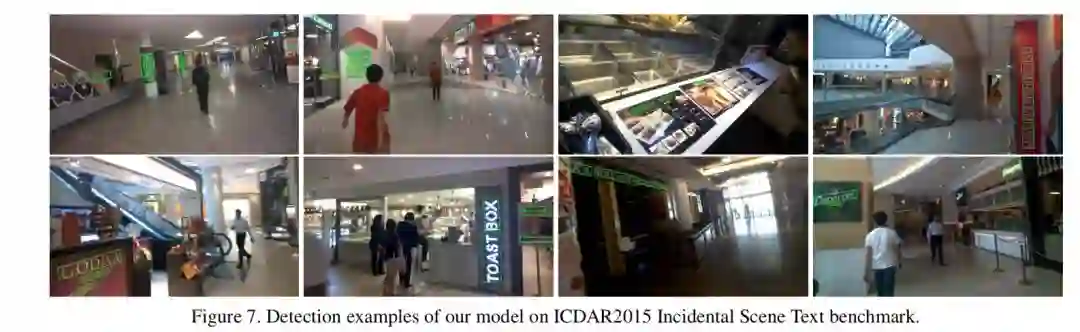

本文所提出的方法在定位非主要的场景文本非常有效。以ICDAR2015场景文本数据集基准进行测试,我们的方法实现了81%的F值。可以看出,这是一种新的先进技术,并且明显优于以往的方法。下图是本文在ICDAR2015数据集上的测试结果:

Abstract

In this paper, we first provide a new perspective to divide existing high performance object detection methodsinto direct and indirect regressions. Direct regression per-forms boundary regression by predicting the offsets froma given point, while indirect regression predicts the offsetsfrom some bounding box proposals. In the context of multi-oriented scene text detection, we analyze the drawbacks ofindirect regression, which covers the state-of-the-art detection structures Faster-RCNN and SSD as instances, andpoint out the potential superiority of direct regression. Toverify this point of view, we propose a deep direct regression based method for multi-oriented scene text detection.Our detection framework is simple and effective with a fullyconvolutional network and one-step post processing. Thefully convolutional network is optimized in an end-to-endway and has bi-task outputs where one is pixel-wise clas-sification between text and non-text, and the other is directregression to determine the vertex coordinates of quadri-lateral text boundaries. The proposed method is particularly beneficial to localize incidental scene texts. On theICDAR2015 Incidental Scene Text benchmark, our method achieves the F-measure of 81%, which is a new state-of-the-art and significantly outperforms previous approaches.On other standard datasets with focused scene texts, ourmethod also reaches the state-of-the-art performance.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号(paopaorobot_slam)。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com