【泡泡一分钟】用于深度双目的非监督适应方法(ICCV-2017)

每天一分钟,带你读遍机器人顶级会议文章

标题:Unsupervised Adaptation for Deep Stereo

作者:Alessio Tonioni, Matteo Poggi, Stefano Mattoccia, Luigi Di Stefano

来源:International Conference on Computer Vision (ICCV 2017)

编译:马可

审核:颜青松 陈世浪

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

最近的突破性工作展示了直接从图像对中训练端到端回归稠密视差图的深度神经网络的可行性。计算机生成的图像库可以被用于为该网络提供大量训练数据,同时额外的精调工作能够使模型在真实场景以及可能的复杂环境下具有良好的表现。然而,除了从一些公共数据集如Kitti获取之外,实际上获取使网络适应于新场景的真实值是十分困难的。这篇文章中我们提出了一个用于精调深度学习模型的非监督适应方法且不需要任何真实值信息。我们借助于结合现有的双目算法与最新的置信测度,后者能够确定受前者约束的测度的正确性。这样,我们给予一个新的损失函数训练网络,该损失函数惩罚不接受算法提供的高置信度视差的预测值,同时确保一个平滑约束。

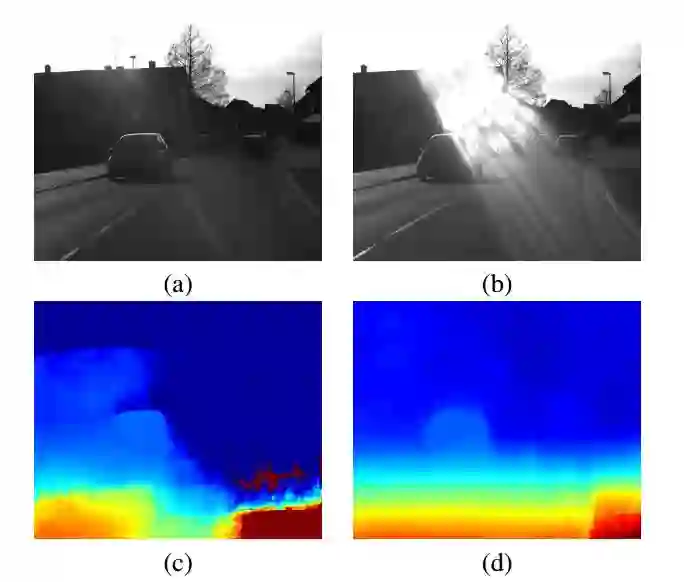

图1.非监督适应方法的有效性。(a)(b):左右图为不含真实值的数据库[11]中的双目图像对。(c):Dispnet-Corr1D的输出。(d):加入非监督适应方法的Dispnet-Corr1D的输出

图2.数据库Middlebury 14中的PianoL图像的数值结果,其中括号中的数字为平均误差。从左到右:真实视差图(白点为未定义点),三种不同双目算法获取的视差图。

Abstract

Recent ground-breaking works have shown that deep neural networks can be trained end-to-end to regress dense disparity maps directly from image pairs. Computer generated imagery is deployed to gather the large data corpus required to train such networks, an additional finetuning allowing to adapt the model to work well also on real and possibly diverse environments. Yet, besides a few public datasets such as Kitti, the groundtruth needed to adapt the network to a new scenario is hardly available in practice. In this paper we propose a novel unsupervised adaptation approach that enables to fine-tune a deep learning stereo model without any ground-truth information. We rely on off-the-shelf stereo algorithms together with state-of-the-art confidence measures, the latter able to ascertain upon correctness of the measurements yielded by former. Thus, we train the network based on a novel loss-function that penalizes predictions disagreeing with the highly confident disparities provided by the algorithm and enforces a smoothness constraint. Experiments on popular datasets (KITTI 2012, KITTI 2015 and Middlebury 2014) and other challenging test images demonstrate the effectiveness of our proposal.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号(paopaorobot_slam)。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com