CVPR2019 | 旷视科技提出PSENet文本检测算法

点击上方“CVer”,选择加"星标"或“置顶”

重磅干货,第一时间送达

作者:柏林Designer

https://zhuanlan.zhihu.com/p/63074253

本文已授权,未经允许,不得二次转载

Shape Robust Text Detection with Progressive Scale Expansion Network

KeyWords Plus: CVPR2019 Curved Text Face++

paper :https://arxiv.org/abs/1903.12473

Github: https://github.com/whai362/PSENet

Introduction

PSENet 分好几个版本,最新的一个是19年的CVPR,这是一篇南京大学和face++合作的文章(好像还有好几个机构的人),19年出现了很多不规则文本检测算法,TextMountain、Textfield等等,不过为啥我要好好研究这个(因为这篇文章开源了代码。。。)。

1、论文创新点

1、Propose a novel kernel-based framework, namely, Progressive Scale Expansion Network (PSENet)

2、Adopt a progressive scale expansion algorithm based on Breadth-First-Search (BFS):

(1)、Starting from the kernels with minimal scales (instances can be distinguished in this step)

(2)、Expanding their areas by involving more pixels in larger kernels gradually

(3)、Finish- ing until the complete text instances (the largest kernels) are explored.

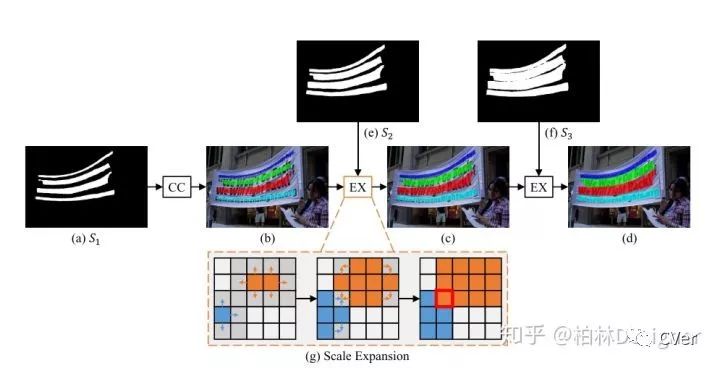

这个文章主要做的创新点大概就是预测多个分割结果,分别是S1,S2,S3…Sn代表不同的等级面积的结果,S1最小,基本就是文本骨架,Sn最大。然后在后处理的过程中,先用最小的预测结果去区分文本,再逐步扩张成正常文本大小。。。

2、算法主体

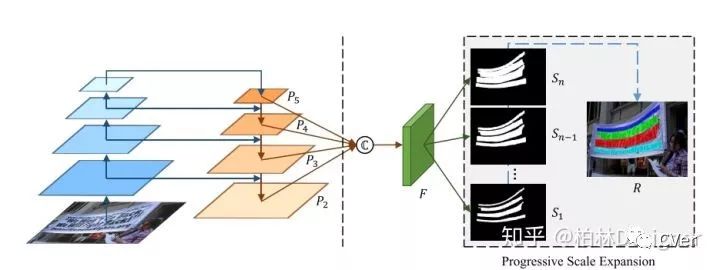

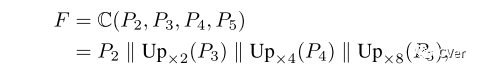

We firstly get four 256 channels feature maps(i.e. P2, P3, P4, P5)from the backbone. To further combine the semantic features from low to high levels, we fuse the four feature maps to getfeature map F with 1024 channelsvia the function C(·) as:

先backbone下采样得到四层的feature maps,再通过fpn对四层feature分别进行上采样2,4,8倍进行融合得到输出结果。

如上图所示,网络有三个分割结果,分别是S1,S2,S3.首先利用最小的kernel生成的S1来区分四个文本实例,然后再逐步扩张成S2和S3

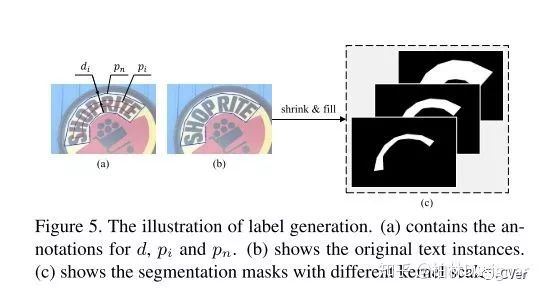

3、label generation

产生不同尺寸的S1….Sn需要不同尺寸的labels

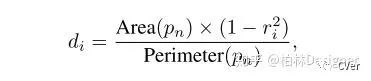

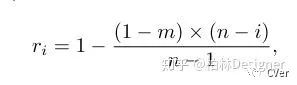

不同尺寸的labels生成如上图所示,缩放比例可以用下面公式计算得出:

这个

m是最小mask的比例,n在m到1之间的值,成线性增加。

4、Loss Function

Loss 主要分为分类的text instance loss和shrunk losses,L是平衡这两个loss的参数。分类loss主要用了交叉熵和dice loss。

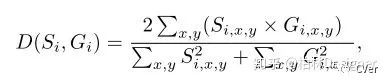

The dice coefficientD(Si, Gi)被计算如下:

4、Datasets

SynthText

Contains about 800K synthetic images.

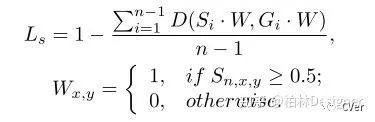

TotalText

Newly-released benchmark for text detection. Besides horizontal and multi-Oriented text instances.The dataset is split into training and testing sets with 1255 and 300 images, respectively.

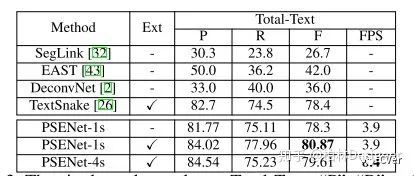

CTW1500

CTW1500 dataset mainly consisting of curved text. It consists of 1000 training images and 500 test images. Text instances are annotated with polygons with 14 vertexes.

ICDAR 2015

Icdar2015 is a commonly used dataset for text detection. It contains a total of 1500 pictures, 1000 of which are used for training and the remaining are for testing. The

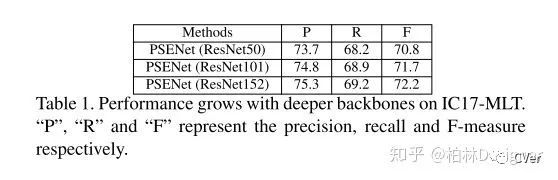

ICDAR 2017 MLT

ICDAR 2017 MIL is a large scale multi-lingual text dataset, which includes 7200 training im- ages, 1800 validation images and 9000 testing images.

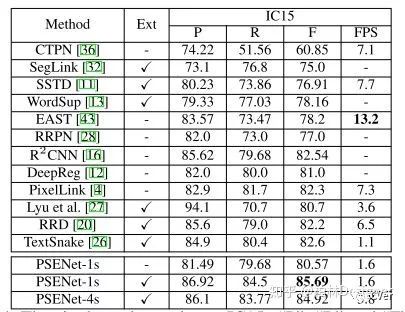

5、Experiment Results

Implementation Details

All the networks are optimized by using stochastic gradientdescent (SGD).Thedata augmentationfor training data is listed as follows: 1) the images are rescaled with ratio {0.5, 1.0, 2.0, 3.0} randomly; 2) the images are horizon- tally flipped and rotated in the range [−10◦, 10◦] randomly; 3) 640 × 640 random samples are cropped from the trans- formed images.

6、Conclusion and Future work

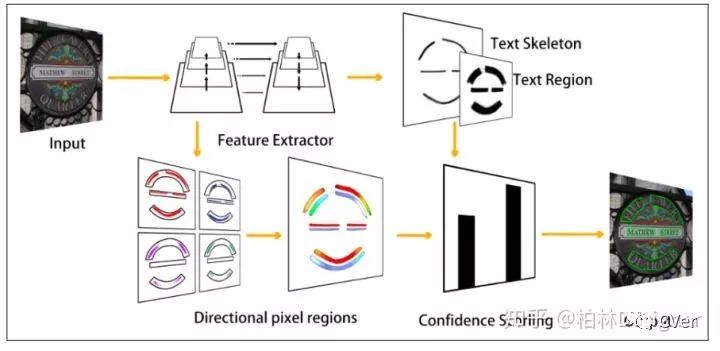

个人观点:这个文章其实做的只是一件事情,就是用预测得到的小的mask区分文本,然后逐渐扩张形成正常大小的文本mask,个人最近发了一篇比较水的会议论文也是检测不规则文本的:TextCohesion: Detecting Text for ArbitraryShapes,其实本质是和这个文章是差不多的(我发之前还没看过这个文章,好像也没有被收录),不过算法主体是不一样的,我这个文章过几天也会挂到arxiv上,主要也是用小的mask区分文本实例,但是我不是进行扩展,我是讲除了文本骨架外的文本像素给不同的方向预测,使得四周的文本像素对文本骨架有一个类似于“聚心力”的作用,最终形成一个文本实例。pipeline如下(在total和ctw1500上实验指标蛮高,暂时第一)

反馈与建议

微博:@柏林designer

邮箱:weijia_wu@yeah.net

CVer文本检测交流群

扫码添加CVer助手,可申请加入CVer-文本检测交流群。一定要备注:文本检测+地点+学校/公司+昵称(如文本检测+上海+上交+卡卡)

▲长按加群

这么硬的论文,麻烦给我一个在看

▲长按关注我们

麻烦给我一个在看!