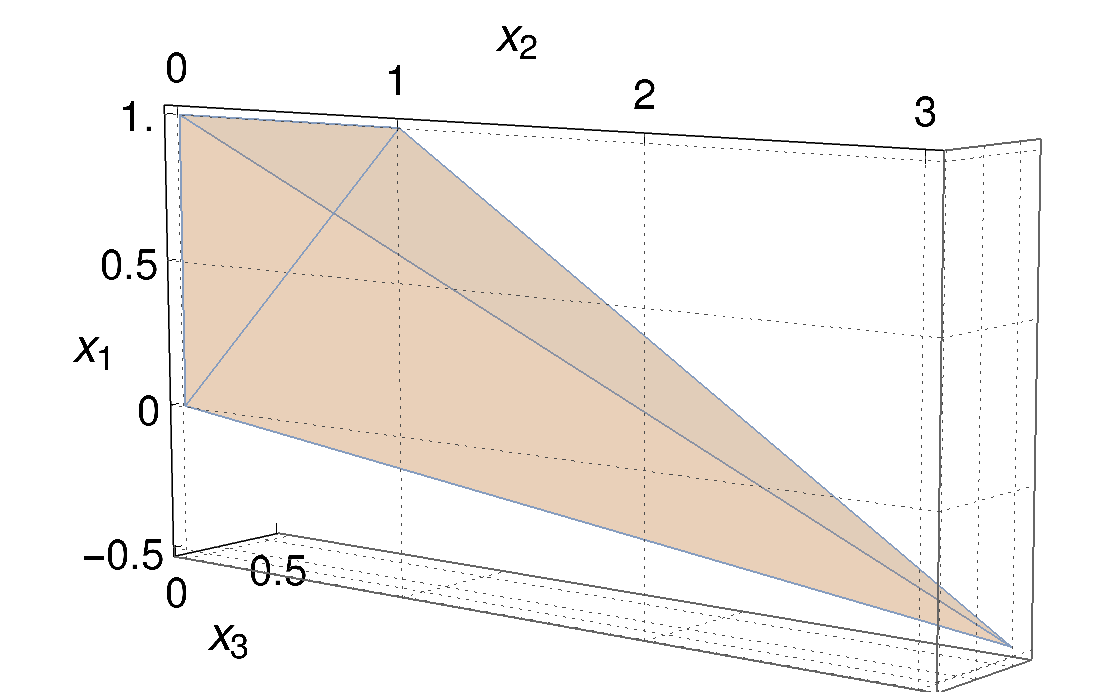

Cut selection is a subroutine used in all modern mixed-integer linear programming solvers with the goal of selecting a subset of generated cuts that induce optimal solver performance. These solvers have millions of parameter combinations, and so are excellent candidates for parameter tuning. Cut selection scoring rules are usually weighted sums of different measurements, where the weights are parameters. We present a parametric family of mixed-integer linear programs together with infinitely many family-wide valid cuts. Some of these cuts can induce integer optimal solutions directly after being applied, while others fail to do so even if an infinite amount are applied. We show for a specific cut selection rule, that any finite grid search of the parameter space will always miss all parameter values, which select integer optimal inducing cuts in an infinite amount of our problems. We propose a variation on the design of existing graph convolutional neural networks, adapting them to learn cut selection rule parameters. We present a reinforcement learning framework for selecting cuts, and train our design using said framework over MIPLIB 2017. Our framework and design show that adaptive cut selection does substantially improve performance over a diverse set of instances, but that finding a single function describing such a rule is difficult. Code for reproducing all experiments is available at https://github.com/Opt-Mucca/Adaptive-Cutsel-MILP.

翻译:切分选择是所有现代混合整数线性编程求解器中使用的子例程, 目标是选择一组生成的切分, 以产生最佳求解性能。 这些解算器拥有数以百万计的参数组合, 也是极优的参数调试对象。 切分评分规则通常是不同测量的加权总和, 其重量是参数。 我们展示了混合整数线性程序以及无限多全家庭的有效切分的参数组合。 这些切分中有些可以在应用后直接产生整形最佳解决方案, 而另一些则无法这样做, 即使应用了无限数量。 我们展示了特定的剪切分选规则, 参数空间的任何有限网格搜索总是会错过所有参数值, 这些参数会选择无限量的整数最佳导算。 我们提议了对现有图形递增线性神经网络的设计进行修改, 以学习剪切规则参数。 我们展示了选择剪切的强化学习框架, 并用MIPLB 2017 框架培训我们的设计。 我们的框架和设计显示, 调整性剪切选择会大大改进一系列实例的性功能, 但要找到一个单项/ 。 但是, 正在找到一个单一的实验规则。