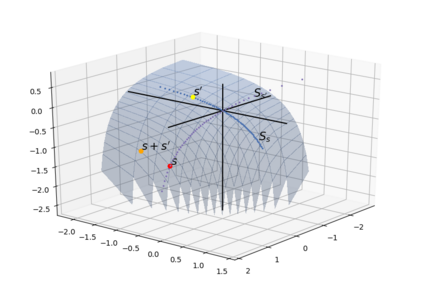

Representing words by vectors, or embeddings, enables computational reasoning and is foundational to automating natural language tasks. For example, if word embeddings of similar words contain similar values, word similarity can be readily assessed, whereas judging that from their spelling is often impossible (e.g. cat /feline) and to predetermine and store similarities between all words is prohibitively time-consuming, memory intensive and subjective. We focus on word embeddings learned from text corpora and knowledge graphs. Several well-known algorithms learn word embeddings from text on an unsupervised basis by learning to predict those words that occur around each word, e.g. word2vec and GloVe. Parameters of such word embeddings are known to reflect word co-occurrence statistics, but how they capture semantic meaning has been unclear. Knowledge graph representation models learn representations both of entities (words, people, places, etc.) and relations between them, typically by training a model to predict known facts in a supervised manner. Despite steady improvements in fact prediction accuracy, little is understood of the latent structure that enables this. The limited understanding of how latent semantic structure is encoded in the geometry of word embeddings and knowledge graph representations makes a principled means of improving their performance, reliability or interpretability unclear. To address this: 1. we theoretically justify the empirical observation that particular geometric relationships between word embeddings learned by algorithms such as word2vec and GloVe correspond to semantic relations between words; and 2. we extend this correspondence between semantics and geometry to the entities and relations of knowledge graphs, providing a model for the latent structure of knowledge graph representation linked to that of word embeddings.

翻译:以矢量或嵌入方式代表单词,可以进行计算推理,并且是自然语言任务自动化的基础。例如,如果类似单词的字嵌入包含类似的值,则可以很容易地评估词相似性,而判断其拼写往往不可能(例如猫/猫线),预先确定和储存所有单词之间的相似性则过于耗时、记忆密集和主观。我们侧重于从文本公司和知识图表中学习的字嵌入。一些著名的算法在不监督的基础上从文本中学习字嵌入。一些著名的算法通过学习预测每个单词周围的字嵌入,例如Word2vec和GloVe。从这些词嵌入的参数可以很容易地评估,以反映单词共值统计数据,但是它们如何捕捉到词义含义的含义是模糊的。 知识图形代表模型既学习实体(词、人、地点等),也学习它们之间的关系,通常通过训练一个模型来以监督的方式预测已知的事实。尽管在事实模型中不断改进准确性,但对于这些字嵌入的字面关系很少理解其直系关系,也就是结构结构结构结构结构结构中我们如何理解,从而了解如何理解其精确结构的精确解释。