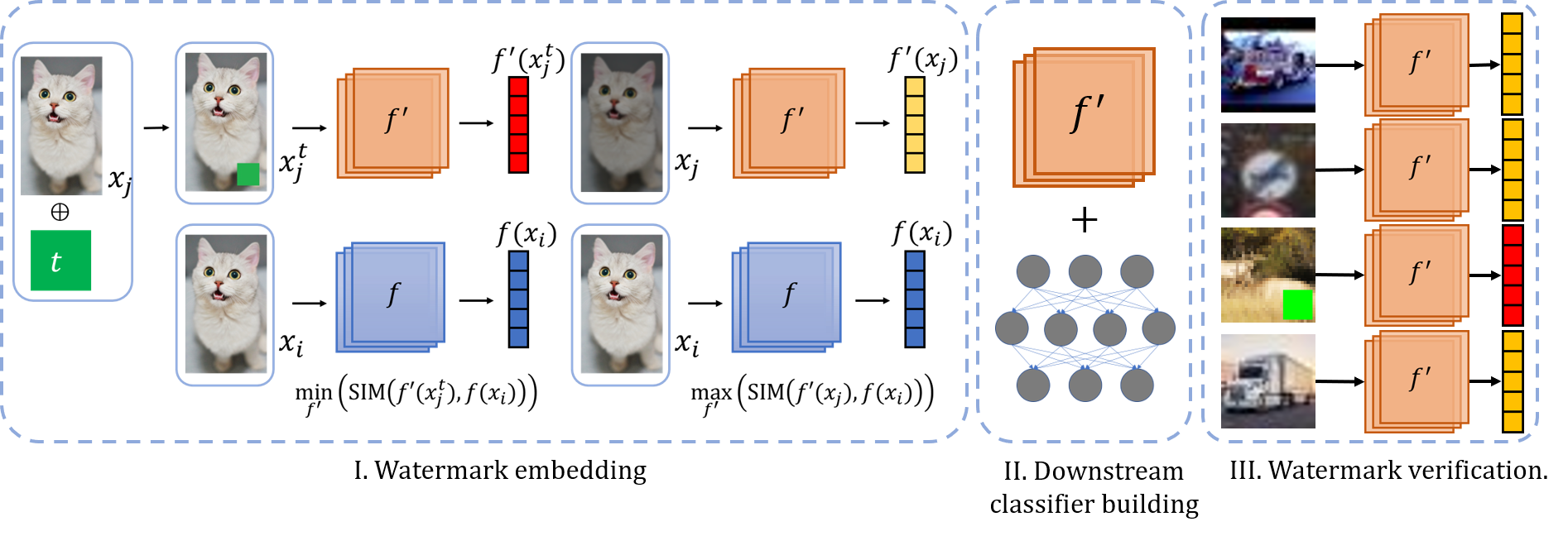

Contrastive learning has become a popular technique to pre-train image encoders, which could be used to build various downstream classification models in an efficient way. This process requires a large amount of data and computation resources. Hence, the pre-trained encoders are an important intellectual property that needs to be carefully protected. It is challenging to migrate existing watermarking techniques from the classification tasks to the contrastive learning scenario, as the owner of the encoder lacks the knowledge of the downstream tasks which will be developed from the encoder in the future. We propose the \textit{first} watermarking methodology for the pre-trained encoders. We introduce a task-agnostic loss function to effectively embed into the encoder a backdoor as the watermark. This backdoor can still exist in any downstream models transferred from the encoder. Extensive evaluations over different contrastive learning algorithms, datasets, and downstream tasks indicate our watermarks exhibit high effectiveness and robustness against different adversarial operations.

翻译:对比性学习已成为一种常见的预培训图像编码器技术,可用于高效地建立各种下游分类模型。 这一过程需要大量的数据和计算资源。 因此, 预先培训的编码器是一个重要的知识产权, 需要仔细保护。 将现有的水标记技术从分类任务迁移到对比性学习假想中是很困难的, 因为编码器的拥有者缺乏关于下游任务的知识, 而下游任务将在未来从编码器中开发出来。 我们为预先培训的编码器提议了 \ textit{ first} 水标记方法。 我们引入了任务识别损失功能, 以便有效地嵌入编码器后门作为水标记。 这种后门仍可能存在于从编码器中传输的任何下游模型中。 对不同的对比性学习算法、 数据集和下游任务进行广泛的评估, 表明我们的水标记相对于不同的对抗性操作具有很高的效力和坚固性。