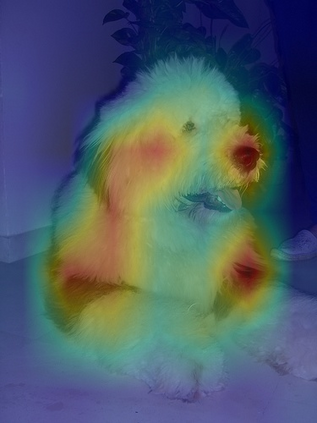

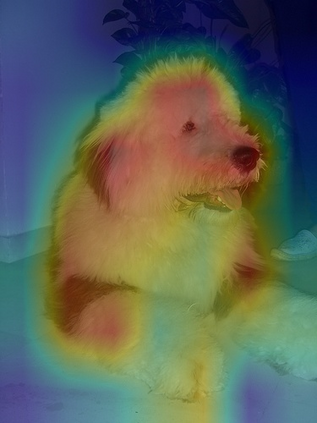

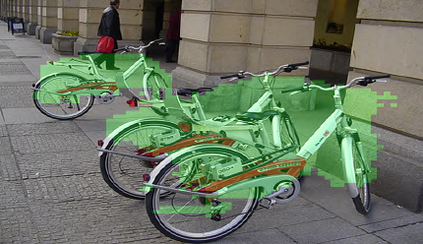

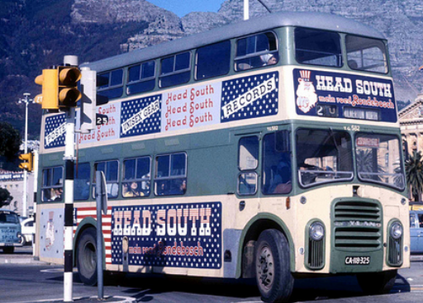

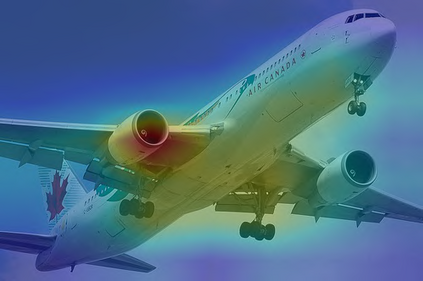

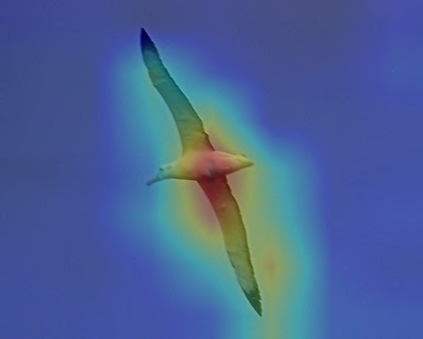

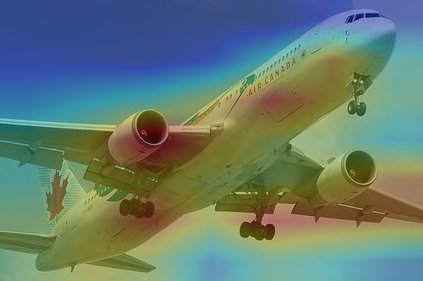

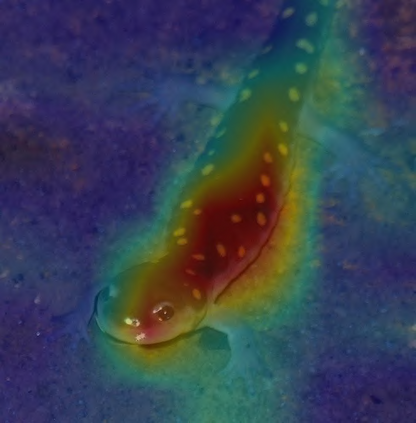

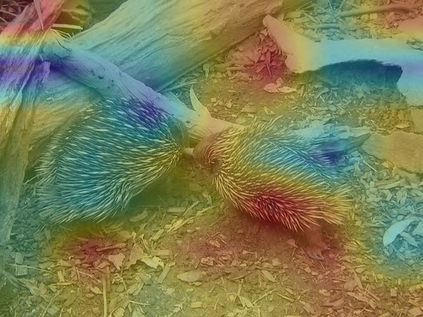

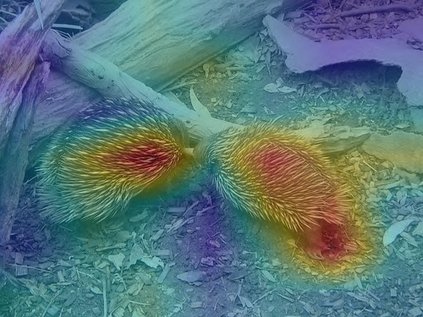

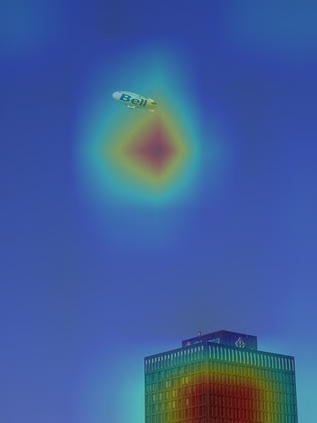

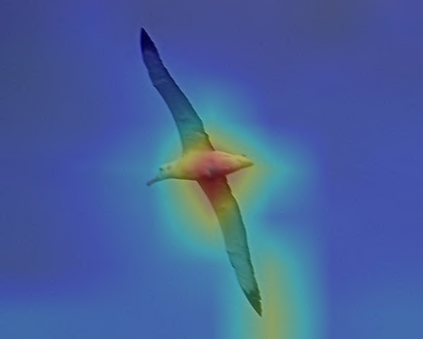

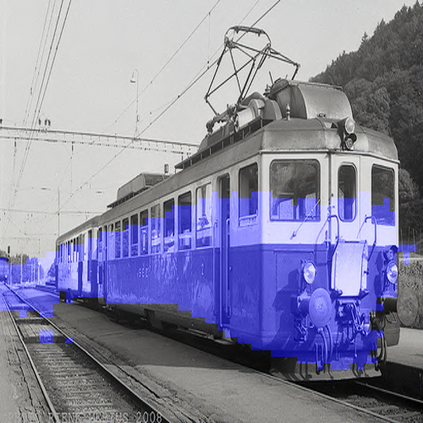

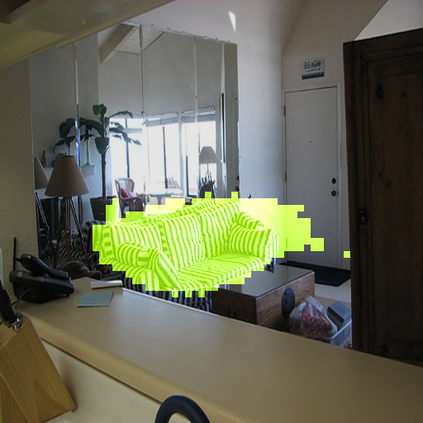

Contrastive self-supervised learning has outperformed supervised pretraining on many downstream tasks like segmentation and object detection. However, current methods are still primarily applied to curated datasets like ImageNet. In this paper, we first study how biases in the dataset affect existing methods. Our results show that current contrastive approaches work surprisingly well across: (i) object- versus scene-centric, (ii) uniform versus long-tailed and (iii) general versus domain-specific datasets. Second, given the generality of the approach, we try to realize further gains with minor modifications. We show that learning additional invariances -- through the use of multi-scale cropping, stronger augmentations and nearest neighbors -- improves the representations. Finally, we observe that MoCo learns spatially structured representations when trained with a multi-crop strategy. The representations can be used for semantic segment retrieval and video instance segmentation without finetuning. Moreover, the results are on par with specialized models. We hope this work will serve as a useful study for other researchers. The code and models will be available at https://github.com/wvangansbeke/Revisiting-Contrastive-SSL.

翻译:自我监督的自相矛盾的学习已经超过许多下游任务的监督前训练,例如分割和物体探测。 但是,目前的方法仍然主要适用于图像网络等固化数据集。 在本文中,我们首先研究数据集中的偏差如何影响现有方法。我们的结果表明,目前的对比性方法在以下各方面效果极好:(一) 对象与场景中心,(二) 制服与长尾,(三) 普通与域别数据集。第二,鉴于该方法的普遍性,我们试图通过微小的修改而取得更大的收益。我们发现,通过多尺度的裁剪、更强的扩增和最近的邻居等方法,学习更多的偏差会改善演示。最后,我们观察到,在经过多作物战略培训后,Moco会学习空间结构化的演示。这些演示可以用于语系段检索和视频实例分解,而无需微调。此外,结果与专门模型相同。我们希望这项工作将成为其他研究人员的有用研究。 代码和模型将在https://githbub.com/Revgankes/Contistriasistria/Conbress。