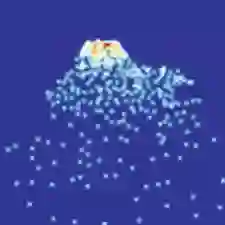

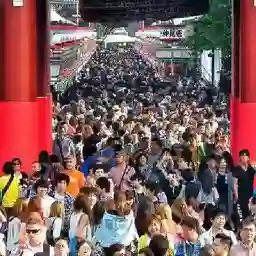

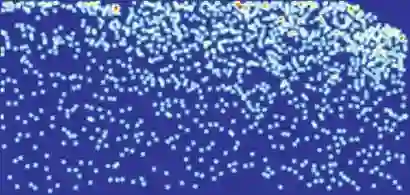

Crowd counting usually addressed by density estimation becomes an increasingly important topic in computer vision due to its widespread applications in video surveillance, urban planning, and intelligence gathering. However, it is essentially a challenging task because of the greatly varied sizes of objects, coupled with severe occlusions and vague appearance of extremely small individuals. Existing methods heavily rely on multi-column learning architectures to extract multi-scale features, which however suffer from heavy computational cost, especially undesired for crowd counting. In this paper, we propose the single-column counting network (SCNet) for efficient crowd counting without relying on multi-column networks. SCNet consists of residual fusion modules (RFMs) for multi-scale feature extraction, a pyramid pooling module (PPM) for information fusion, and a sub-pixel convolutional module (SPCM) followed by a bilinear upsampling layer for resolution recovery. Those proposed modules enable our SCNet to fully capture multi-scale features in a compact single-column architecture and estimate high-resolution density map in an efficient way. In addition, we provide a principled paradigm for density map generation and data augmentation for training, which shows further improved performance. Extensive experiments on three benchmark datasets show that our SCNet delivers new state-of-the-art performance and surpasses previous methods by large margins, which demonstrates the great effectiveness of SCNet as a single-column network for crowd counting.

翻译:由于在视频监视、城市规划和情报收集方面的广泛应用,通常由密度估计处理的人群计数通常是一个日益重要的计算机愿景主题。然而,由于物体的大小差别很大,加上极小的个人的高度排斥和模糊外观,它基本上是一项具有挑战性的任务。现有的方法在很大程度上依赖多语言学习结构来提取多尺度特征,然而,这些特征的计算成本却很高,特别是对于人群计数而言尤为不理想。在本文中,我们建议单语言计数网络(SCNet)用于高效的人群计数,而不必依赖多语言网络。 SCNet由多规模地貌提取的残余聚变模块(RFM)组成,一个金字塔集模块(PPM)用于信息聚合,以及一个次等同革命模块(SPCM),随后又有一个分辨率恢复的双向增压层标本层。这些模块使我们的SCNet网络能够在一个压缩的单一语言结构架构中充分捕捉到多层次的特征,并高效地估算高分辨率密度地图。此外,我们提供了一个用于进行大规模密度计数计算和高密度模型模型的模型化模型的模型模型模型模型,用以展示了我们在网络生成的模型的模型的模型的模型,用以展示了我们的大规模数据生成和SSC-SLSBSBS-BS-BS-BS-BS-BS-S-B-S-B-B-B-S-S-B-B-S-S-S-S-S-S-S-B-B-B-B-S-S-S-S-S-S-S-S-S-S-S-S-B-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-B-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-