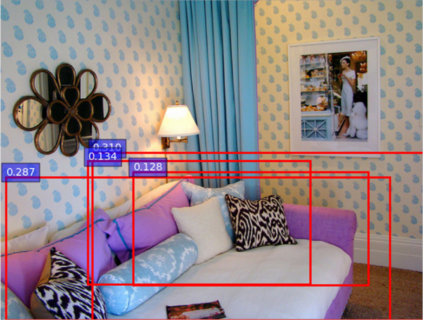

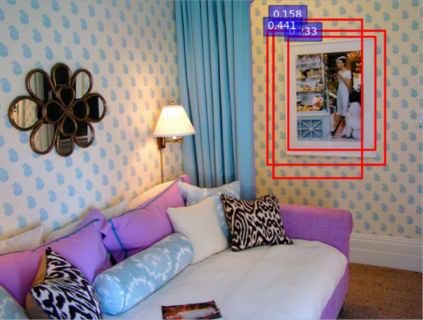

Visual question answering (VQA) and image captioning require a shared body of general knowledge connecting language and vision. We present a novel approach to improve VQA performance that exploits this connection by jointly generating captions that are targeted to help answer a specific visual question. The model is trained using an existing caption dataset by automatically determining question-relevant captions using an online gradient-based method. Experimental results on the VQA v2 challenge demonstrates that our approach obtains state-of-the-art VQA performance (e.g. 68.4% on the Test-standard set using a single model) by simultaneously generating question-relevant captions.

翻译:视觉答题( VQA) 和图像字幕需要共享一系列连接语言和视觉的一般知识。 我们展示了一种改进 VQA 性能的新颖方法,通过联合制作旨在帮助回答特定视觉问题的字幕来利用这一联系。 该模型使用现有的字幕数据集接受培训,通过在线梯度法自动确定与问题相关的字幕。 VQA v2 挑战的实验结果显示,我们的方法通过同时生成与问题相关的字幕,获得了最新的艺术 VQA 性能(例如,使用单一模型在测试标准集上达到68.4%)。