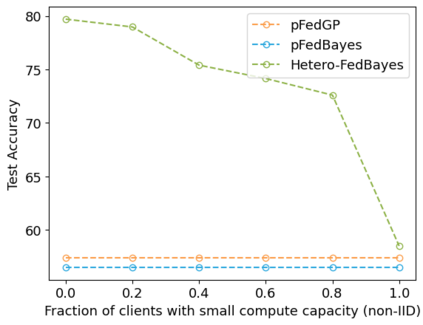

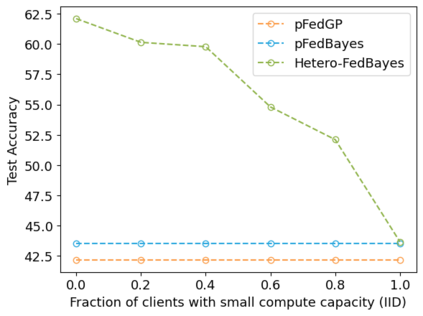

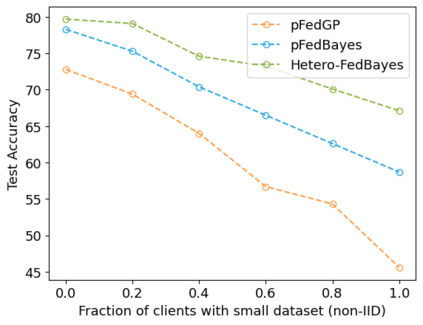

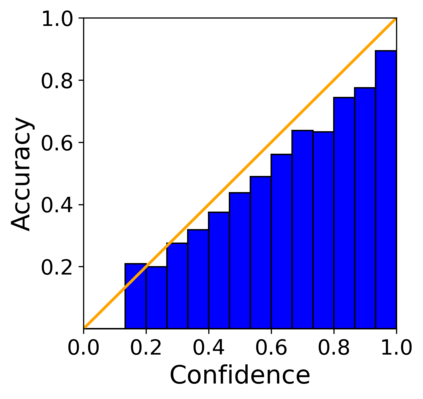

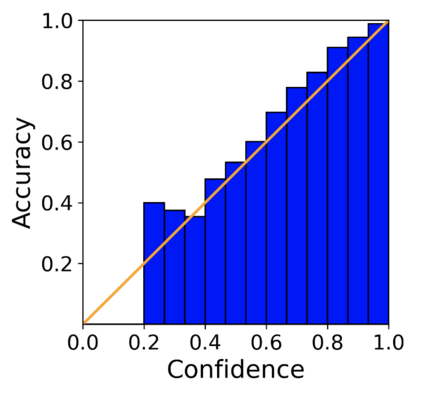

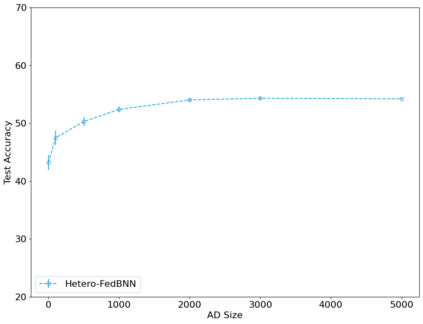

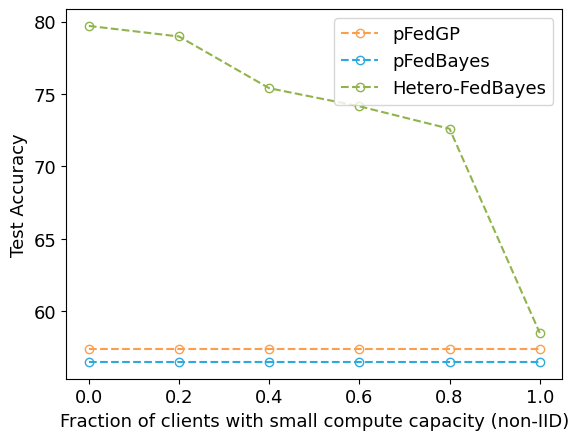

In several practical applications of federated learning (FL), the clients are highly heterogeneous in terms of both their data and compute resources, and therefore enforcing the same model architecture for each client is very limiting. Moreover, the need for uncertainty quantification and data privacy constraints are often particularly amplified for clients that have limited local data. This paper presents a unified FL framework to simultaneously address all these constraints and concerns, based on training customized local Bayesian models that learn well even in the absence of large local datasets. A Bayesian framework provides a natural way of incorporating supervision in the form of prior distributions. We use priors in the functional (output) space of the networks to facilitate collaboration across heterogeneous clients. Moreover, formal differential privacy guarantees are provided for this framework. Experiments on standard FL datasets demonstrate that our approach outperforms strong baselines in both homogeneous and heterogeneous settings and under strict privacy constraints, while also providing characterizations of model uncertainties.

翻译:暂无翻译