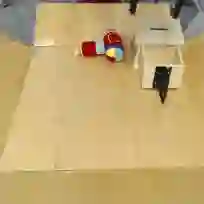

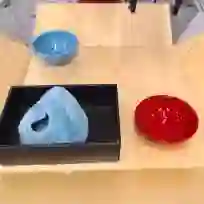

A generalist robot equipped with learned skills must be able to perform many tasks in many different environments. However, zero-shot generalization to new settings is not always possible. When the robot encounters a new environment or object, it may need to finetune some of its previously learned skills to accommodate this change. But crucially, previously learned behaviors and models should still be suitable to accelerate this relearning. In this paper, we aim to study how generative models of possible outcomes can allow a robot to learn visual representations of affordances, so that the robot can sample potentially possible outcomes in new situations, and then further train its policy to achieve those outcomes. In effect, prior data is used to learn what kinds of outcomes may be possible, such that when the robot encounters an unfamiliar setting, it can sample potential outcomes from its model, attempt to reach them, and thereby update both its skills and its outcome model. This approach, visuomotor affordance learning (VAL), can be used to train goal-conditioned policies that operate on raw image inputs, and can rapidly learn to manipulate new objects via our proposed affordance-directed exploration scheme. We show that VAL can utilize prior data to solve real-world tasks such drawer opening, grasping, and placing objects in new scenes with only five minutes of online experience in the new scene.

翻译:拥有丰富技能的通用机器人必须能够在许多不同环境中执行许多任务。 但是, 向新环境的零点概括化并非总有可能。 当机器人遇到新的环境或物体时, 可能需要微调一些先前学到的技能来适应这一变化。 但关键的一点是, 先前学到的行为和模型应该仍然适合加速这种再学习。 在本文件中, 我们的目标是研究一个具有潜在结果的基因化模型如何让机器人能够学习价格的视觉表现, 这样机器人就可以在新情况下对潜在的结果进行抽样, 然后进一步培训其政策以取得这些结果。 实际上, 先前的数据被用来了解哪些结果可能是可能的, 这样当机器人遇到不熟悉的环境时, 它就可以从模型中抽选出潜在的结果, 试图达到这些结果, 从而更新其技能和结果模型。 这种方法, 相对博托买得起的学习( VAL ), 可以用来培训有目标限制的政策, 以原始图像投入为操作, 并且能够通过我们提议的价格导向的勘探计划快速地学习如何操纵新的物体。 实际上, 我们展示的是, 将新的图像运用在新的空间上, 将新的数据放在新的图像中, 。