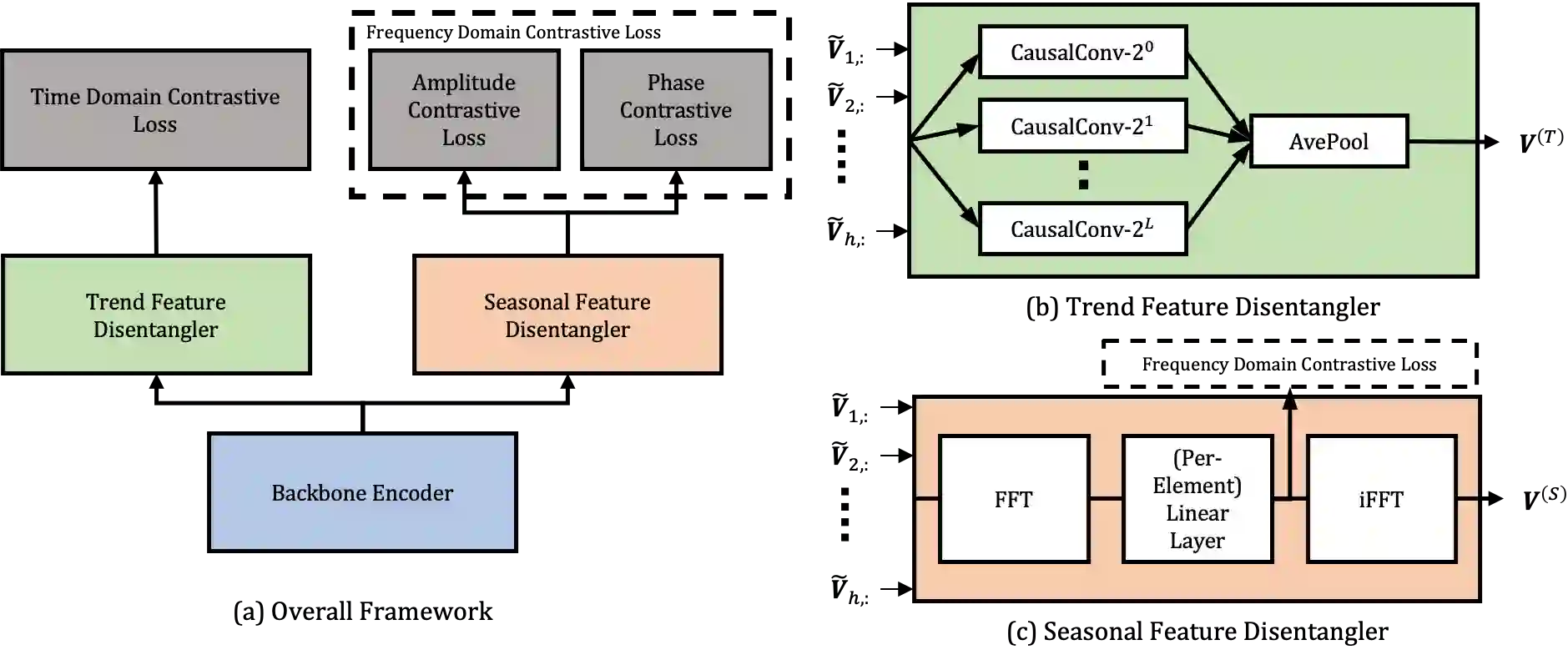

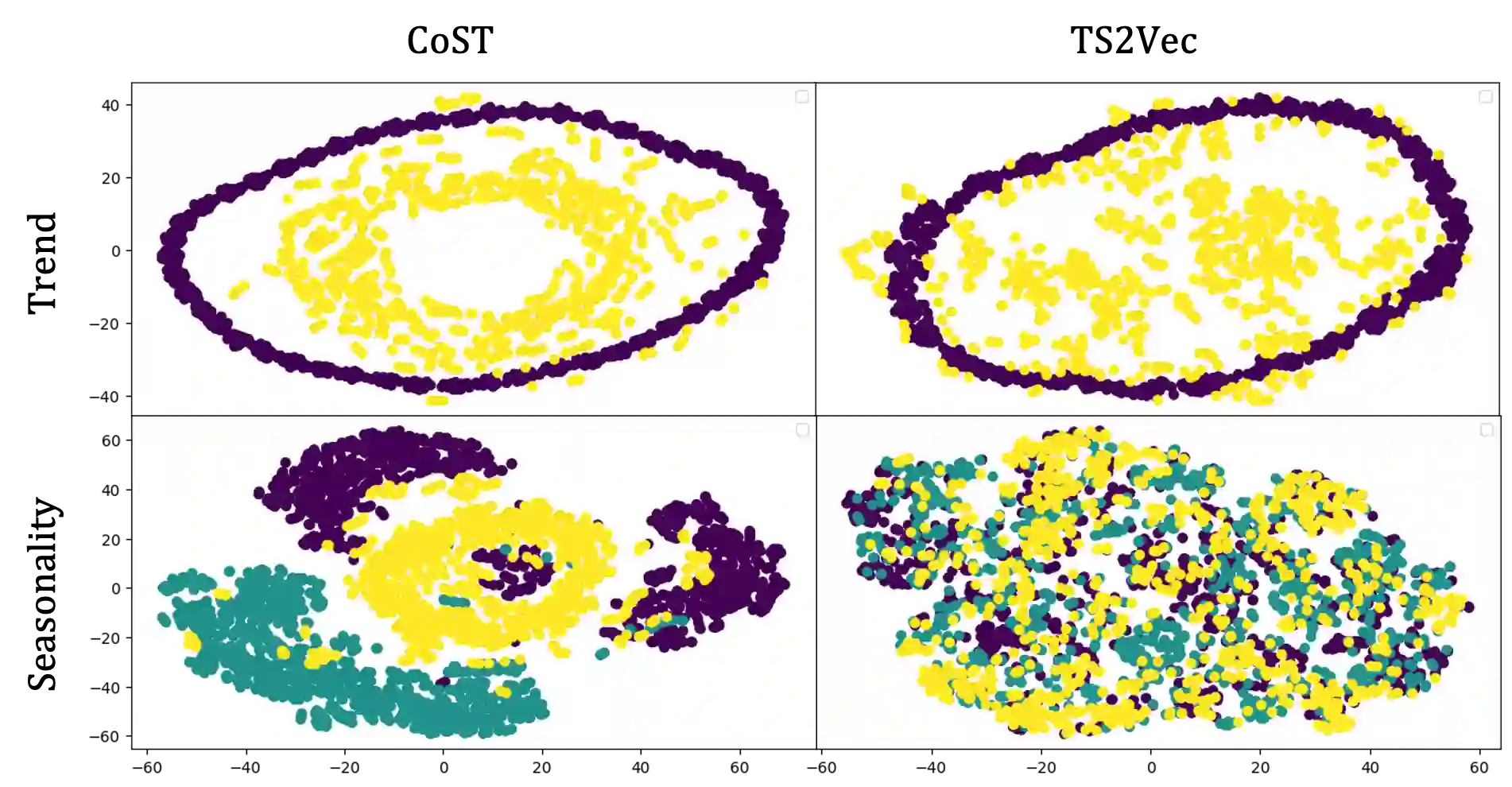

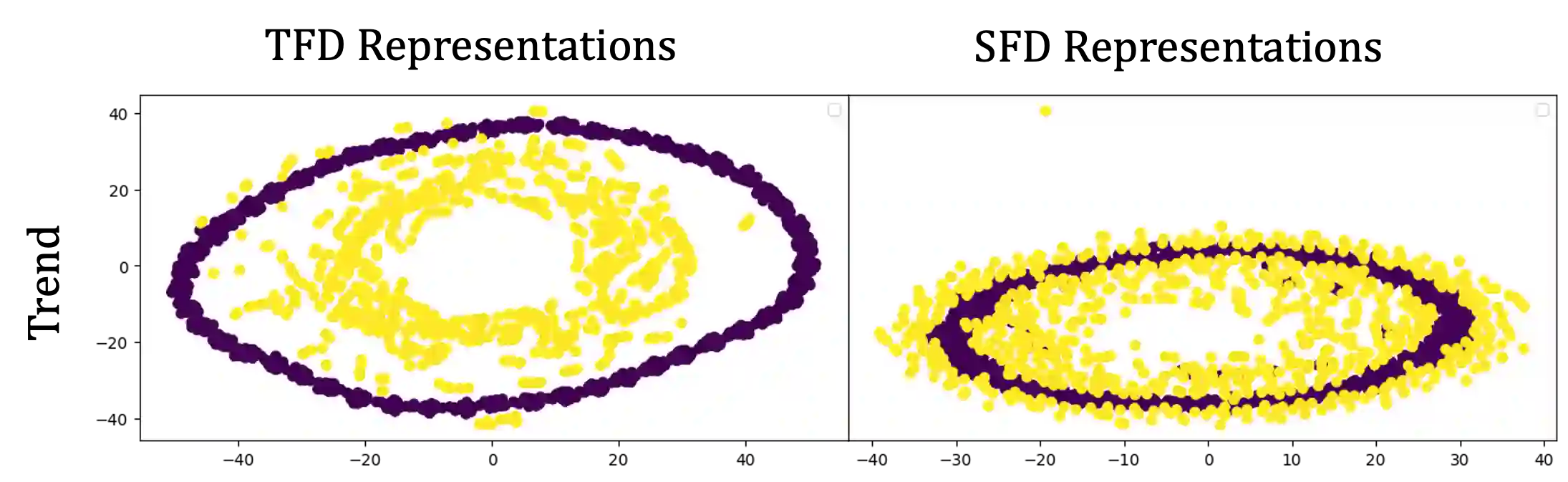

Deep learning has been actively studied for time series forecasting, and the mainstream paradigm is based on the end-to-end training of neural network architectures, ranging from classical LSTM/RNNs to more recent TCNs and Transformers. Motivated by the recent success of representation learning in computer vision and natural language processing, we argue that a more promising paradigm for time series forecasting, is to first learn disentangled feature representations, followed by a simple regression fine-tuning step -- we justify such a paradigm from a causal perspective. Following this principle, we propose a new time series representation learning framework for time series forecasting named CoST, which applies contrastive learning methods to learn disentangled seasonal-trend representations. CoST comprises both time domain and frequency domain contrastive losses to learn discriminative trend and seasonal representations, respectively. Extensive experiments on real-world datasets show that CoST consistently outperforms the state-of-the-art methods by a considerable margin, achieving a 21.3\% improvement in MSE on multivariate benchmarks. It is also robust to various choices of backbone encoders, as well as downstream regressors.

翻译:为时间序列预测积极研究了深度学习,主流模式以神经网络结构的端到端培训为基础,从古典LSTM/RNNs到最近的TCNs和变异器不等。受最近在计算机视觉和自然语言处理方面的代表性学习的成功激励,我们认为,时间序列预测的一个更有希望的模式是首先学习分解的特征表现,然后是简单的回归微调步骤 -- -- 我们从因果关系的角度为这种模式辩护。遵循这一原则,我们提议一个新的时间序列代表学习框架,用于名为COST的时间序列预测,采用对比式学习方法学习分解的季节-趋势表现。COST包括时间域和频率域对比损失,以分别学习有区别的趋势和季节性表现。对真实世界数据集的广泛实验表明,CST始终以相当大的幅度超越了最新方法,在多变量基准上实现了MSE21.3 ⁇ 的改进。它对于骨架的选选用也很有力,也是下游的累列。