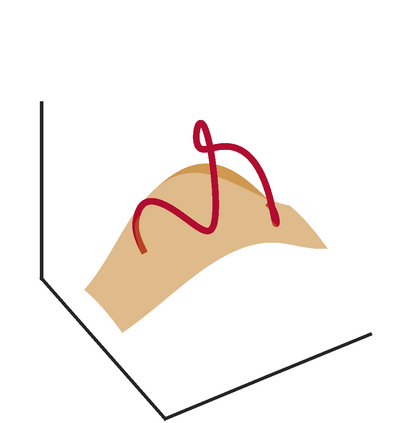

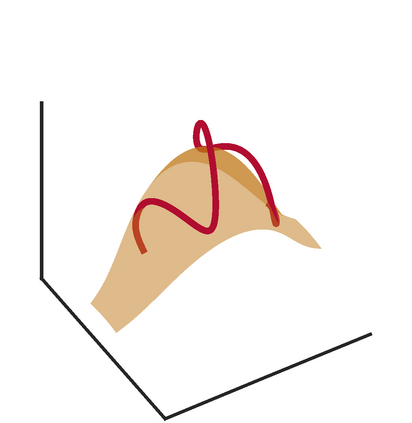

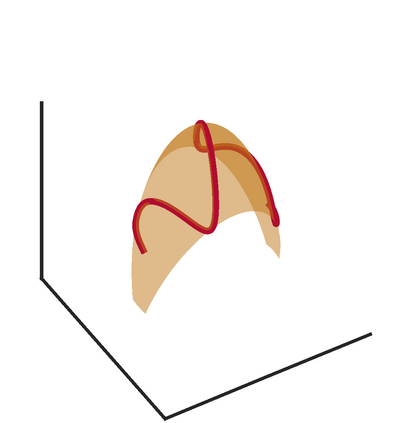

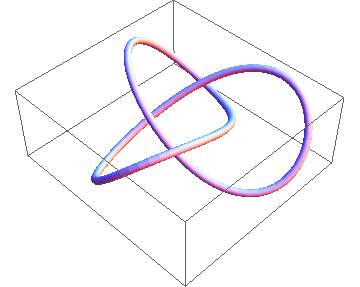

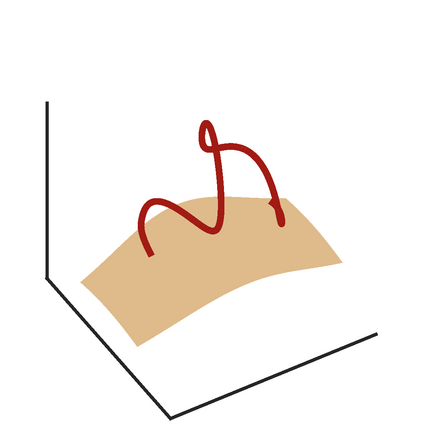

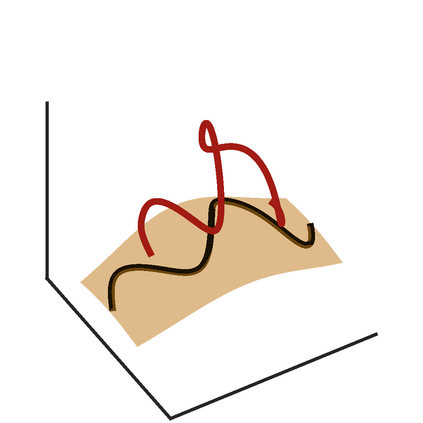

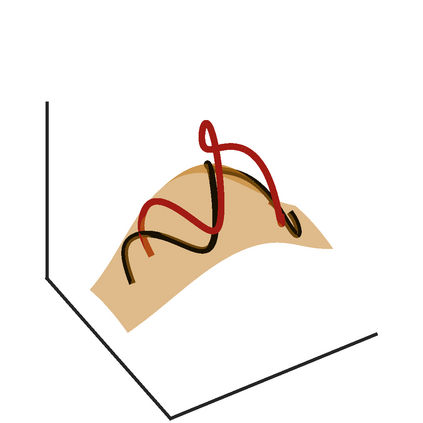

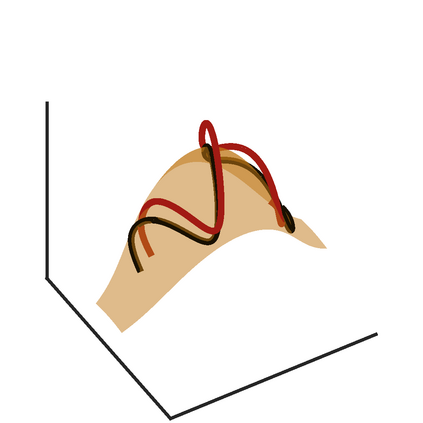

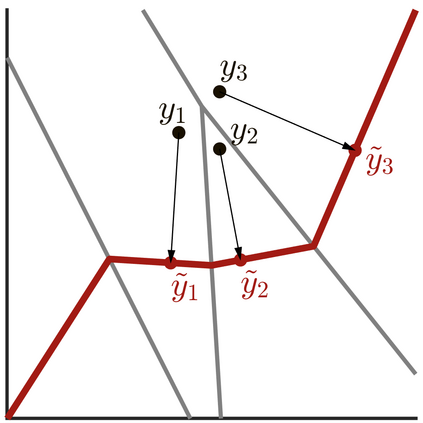

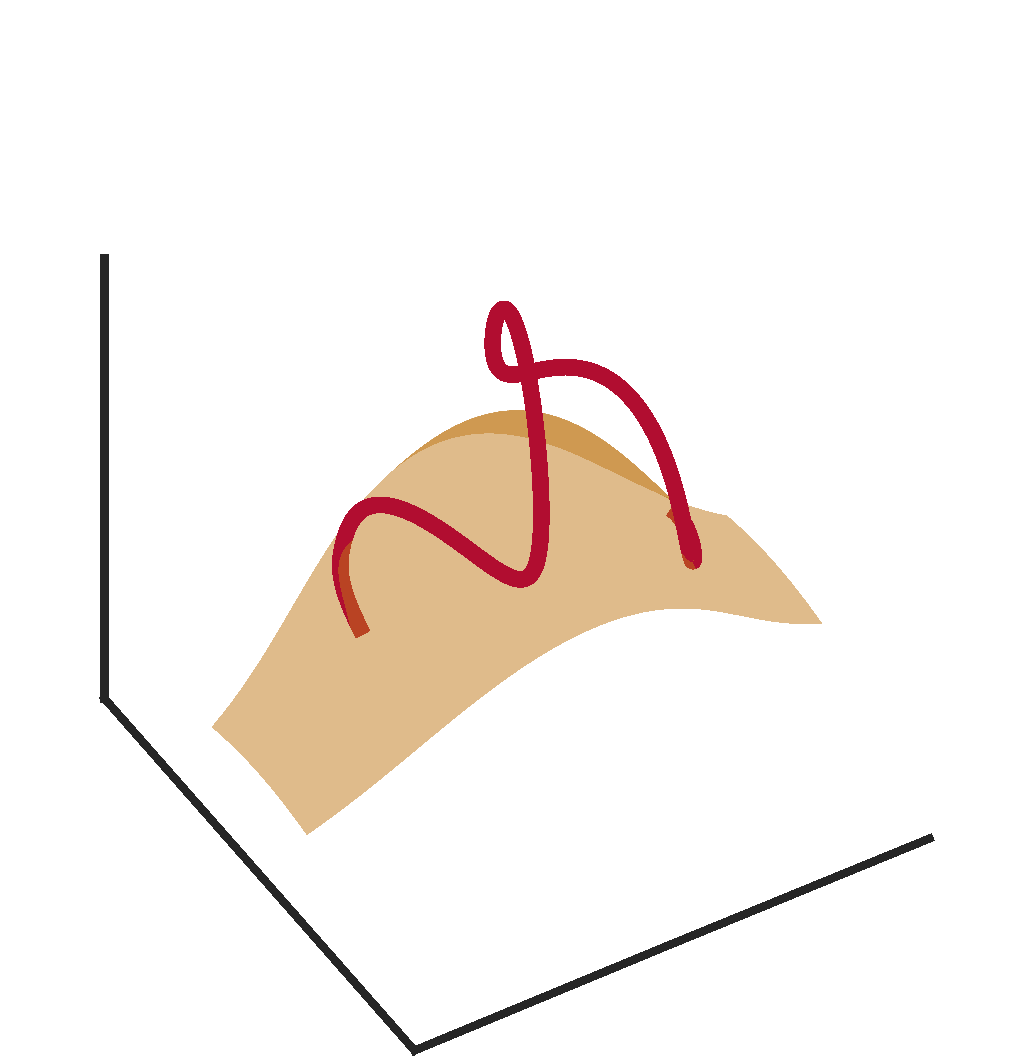

We study approximation of probability measures supported on $n$-dimensional manifolds embedded in $\mathbb{R}^m$ by injective flows -- neural networks composed of invertible flows and injective layers. We show that in general, injective flows between $\mathbb{R}^n$ and $\mathbb{R}^m$ universally approximate measures supported on images of extendable embeddings, which are a subset of standard embeddings: when the embedding dimension m is small, topological obstructions may preclude certain manifolds as admissible targets. When the embedding dimension is sufficiently large, $m \ge 3n+1$, we use an argument from algebraic topology known as the clean trick to prove that the topological obstructions vanish and injective flows universally approximate any differentiable embedding. Along the way we show that the studied injective flows admit efficient projections on the range, and that their optimality can be established "in reverse," resolving a conjecture made in Brehmer and Cranmer 2020.

翻译:我们研究用输入流 -- -- 由不可逆流和注入层组成的神经网络。我们发现,一般来说,在可扩展嵌入图象上支持的“美元”维度测量值的近似值,这是标准嵌入的子集:当嵌入维度微小时,地形障碍可能会排除某些多元值作为可接受目标。当嵌入维度足够大时,我们将使用被称为“清洁技巧”的“代数”表层表层学的论据来证明,表层障碍消失,而预测性流动普遍接近任何不同的嵌入。此外,我们证明研究的“指导流”接受对范围的有效预测,其最佳性可以被确定为“反向”,解决布雷默和克兰默(2020年)的预测值。