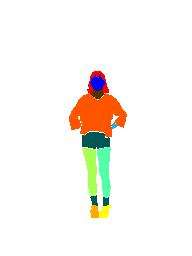

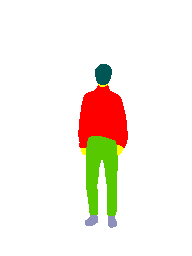

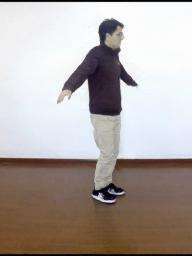

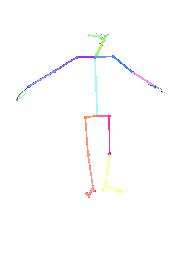

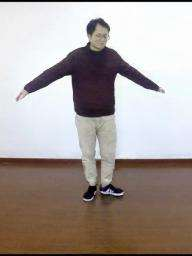

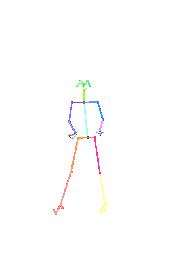

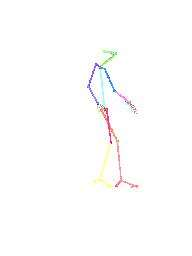

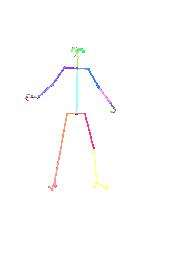

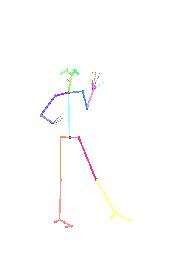

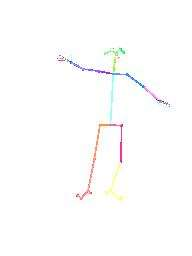

Human Video Motion Transfer (HVMT) aims to, given an image of a source person, generate his/her video that imitates the motion of the driving person. Existing methods for HVMT mainly exploit Generative Adversarial Networks (GANs) to perform the warping operation based on the flow estimated from the source person image and each driving video frame. However, these methods always generate obvious artifacts due to the dramatic differences in poses, scales, and shifts between the source person and the driving person. To overcome these challenges, this paper presents a novel REgionto-whole human MOtion Transfer (REMOT) framework based on GANs. To generate realistic motions, the REMOT adopts a progressive generation paradigm: it first generates each body part in the driving pose without flow-based warping, then composites all parts into a complete person of the driving motion. Moreover, to preserve the natural global appearance, we design a Global Alignment Module to align the scale and position of the source person with those of the driving person based on their layouts. Furthermore, we propose a Texture Alignment Module to keep each part of the person aligned according to the similarity of the texture. Finally, through extensive quantitative and qualitative experiments, our REMOT achieves state-of-the-art results on two public benchmarks.

翻译:人类视频传输(HVMT)的目的是,根据来源人的形象,制作他/她的视频,模仿驾驶员的动作。HVMT的现有方法主要是利用基因反转网络(GANs),根据源人图像和每个驱动视频框架的估计流量进行扭曲操作。然而,这些方法总是由于源人和驾驶员的面貌、比例和变化之间的巨大差异而产生明显的文物。为了克服这些挑战,本文件展示了一个基于GANs的新颖的全人类运动(REMOT)框架。为了产生现实的动作,REMOT采用了一个渐进的一代模式:它首先在驱动器中生成每个部件,而没有以流为基础的扭曲,然后将所有部件组合成驱动器的完整人物。此外,为了保持自然的全球外观,我们设计了一个全球协调模块,以其布局为基础,将源人的规模和位置与驾驶员的规模和位置相匹配。此外,我们提议了一个文本调整模块,将每个人的每一个部分都保持与我们的公共文本的定量基准一致。最后,通过相似的文本的定性测试,将每个部分与我们的公共质量结果联系起来。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem