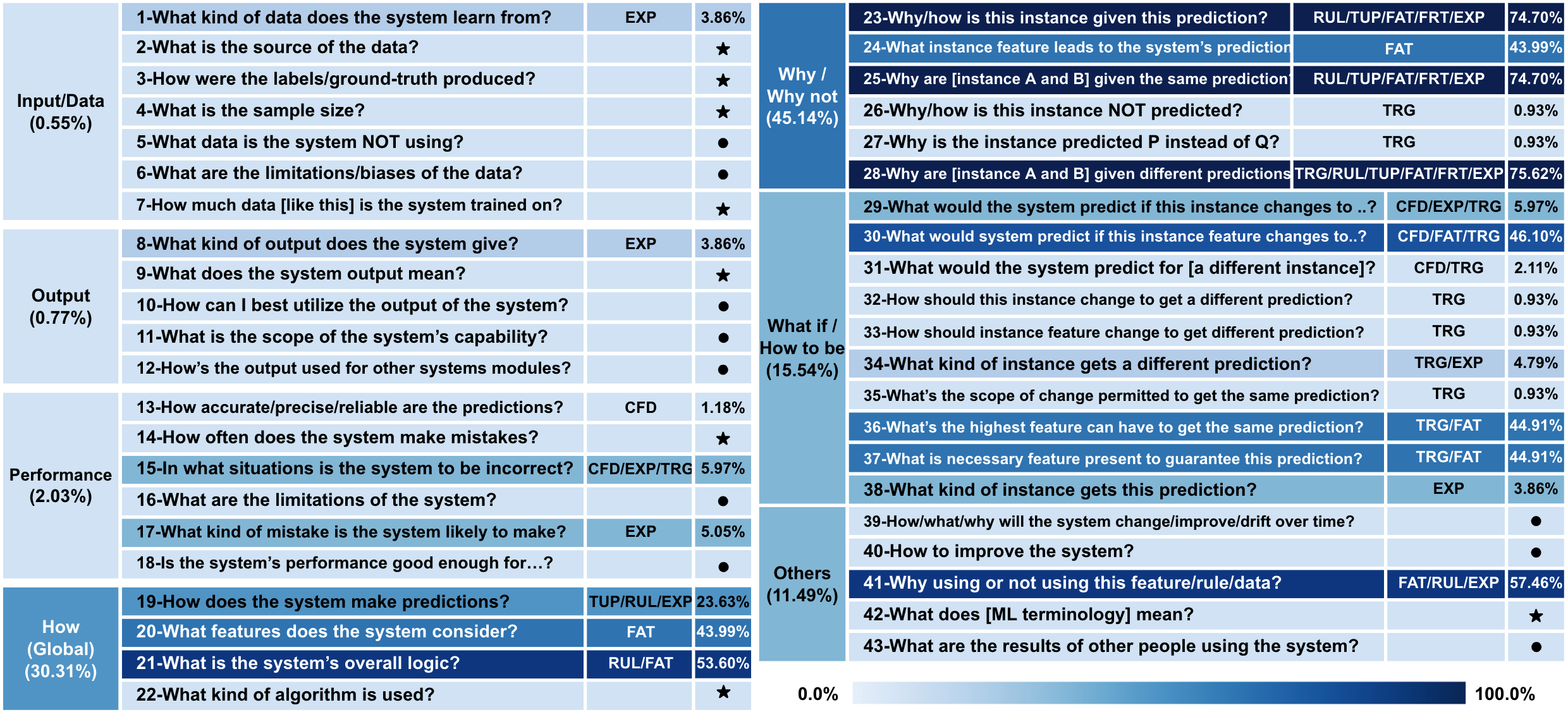

It is unclear if existing interpretations of deep neural network models respond effectively to the needs of users. This paper summarizes the common forms of explanations (such as feature attribution, decision rules, or probes) used in over 200 recent papers about natural language processing (NLP), and compares them against user questions collected in the XAI Question Bank. We found that although users are interested in explanations for the road not taken -- namely, why the model chose one result and not a well-defined, seemly similar legitimate counterpart -- most model interpretations cannot answer these questions.

翻译:目前尚不清楚对深神经网络模型的现有解释是否有效地满足了用户的需要,本文件总结了200多份关于自然语言处理的最新论文(NLP)中使用的共同解释形式(例如特征归属、决定规则或探测器),并将这些解释与XAI问题库收集的用户问题作比较,我们发现,虽然用户有兴趣解释没有走的路 -- -- 即为什么模型选择了一个结果,而不是一个定义明确、似乎相似的合法对应方 -- -- 大多数示范解释无法回答这些问题。