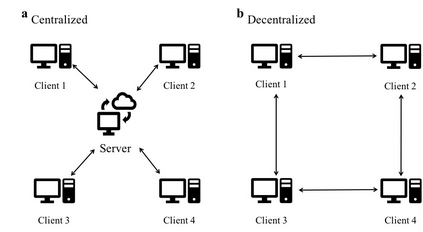

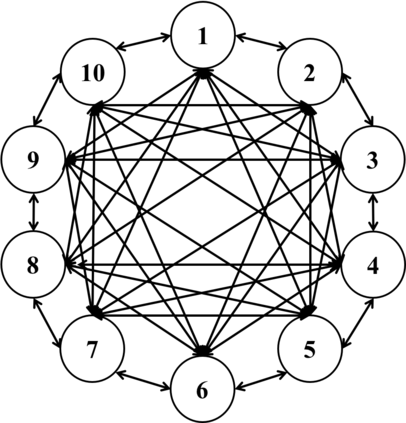

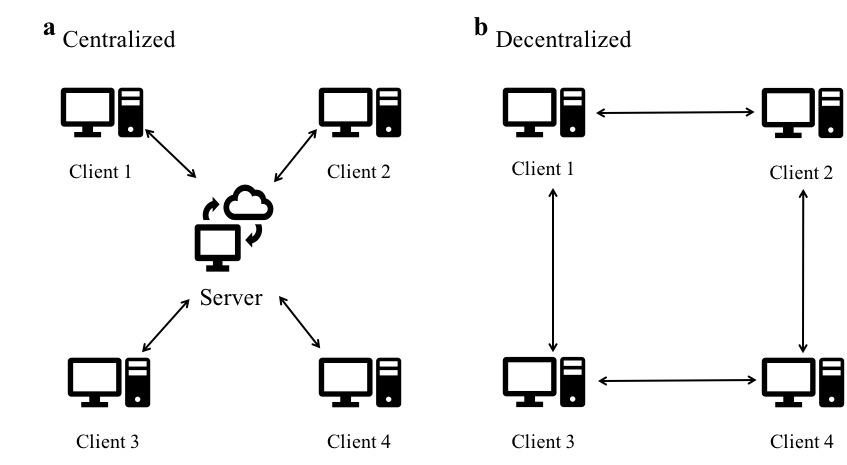

Federated learning enables a large number of clients to participate in learning a shared model while maintaining the training data stored in each client, which protects data privacy and security. Till now, federated learning frameworks are built in a centralized way, in which a central client is needed for collecting and distributing information from every other client. This not only leads to high communication pressure at the central client, but also renders the central client highly vulnerable to failure and attack. Here we propose a principled decentralized federated learning algorithm (DeFed), which removes the central client in the classical Federated Averaging (FedAvg) setting and only relies information transmission between clients and their local neighbors. The proposed DeFed algorithm is proven to reach the global minimum with a convergence rate of $O(1/T)$ when the loss function is smooth and strongly convex, where $T$ is the number of iterations in gradient descent. Finally, the proposed algorithm has been applied to a number of toy examples to demonstrate its effectiveness.

翻译:联邦学习使大量客户能够参与学习共享模式,同时保持每个客户储存的培训数据,从而保护数据隐私和安全。到目前为止,联邦学习框架是以集中方式建立的,需要有一个中央客户来收集和传播来自其他每个客户的信息。这不仅导致中央客户的通信压力很大,而且使中央客户极易受到失败和攻击的伤害。我们在这里提议了一个原则性分散化的联邦学习算法(DeFed),它消除了古典联邦verage(FedAvg)设置中的中央客户,只依赖客户与其当地邻居之间的信息传输。如果损失功能平滑和强烈的连接,拟议的联邦算法已证明达到全球最低汇合率1美元/T,而美元是梯度下降的倍数。最后,拟议的算法被应用于一些微小的例子,以证明其有效性。