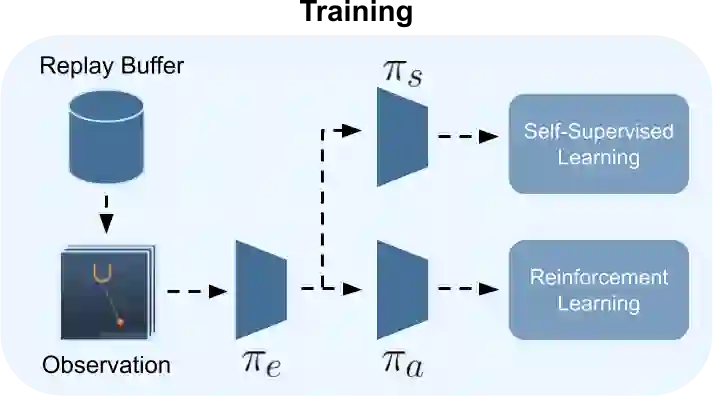

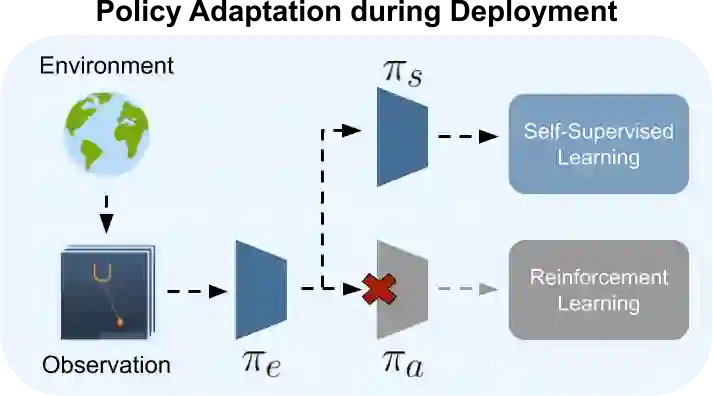

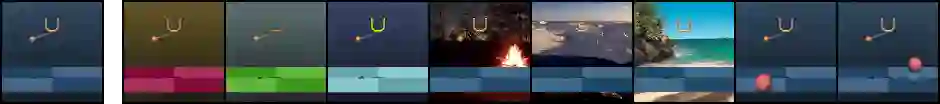

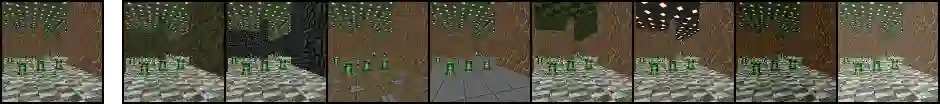

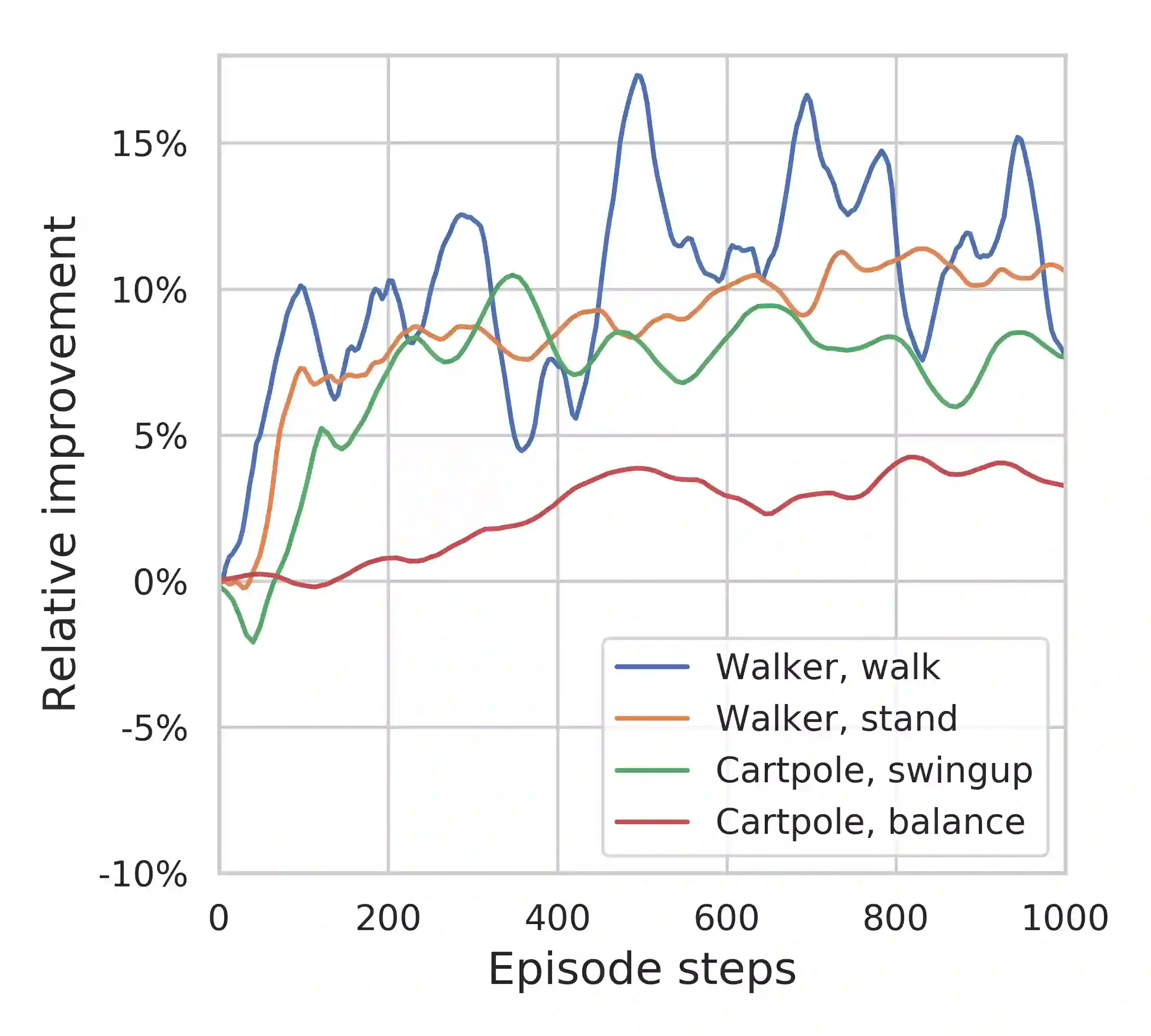

In most real world scenarios, a policy trained by reinforcement learning in one environment needs to be deployed in another, potentially quite different environment. However, generalization across different environments is known to be hard. A natural solution would be to keep training after deployment in the new environment, but this cannot be done if the new environment offers no reward signal. Our work explores the use of self-supervision to allow the policy to continue training after deployment without using any rewards. While previous methods explicitly anticipate changes in the new environment, we assume no prior knowledge of those changes yet still obtain significant improvements. Empirical evaluations are performed on diverse environments from DeepMind Control suite and ViZDoom. Our method improves generalization in 25 out of 30 environments across various tasks, and outperforms domain randomization on a majority of environments.

翻译:在最真实的世界情景中,在一种环境中,通过强化学习而培训的政策需要在另一种环境中部署,可能非常不同。然而,人们知道,在不同的环境中,一般化是很困难的。自然的解决办法是,在新环境部署后继续培训,但如果新的环境不提供奖励信号,则无法做到这一点。我们的工作探索使用自我监督,使政策在部署后能够继续培训,而不使用任何奖励。虽然以前的方法明确预见到新环境的变化,但我们假设这些变化的先前知识还没有得到显著改善。从DeepMind控制套房和VizDomoom等不同环境进行了经验评估。我们的方法改进了30个环境中的25个环境在各种任务中的普遍化,并在大多数环境中实现了超出域随机化。