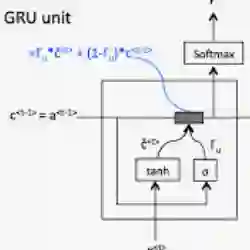

The light gated recurrent units (Li-GRU) is well-known for achieving impressive results in automatic speech recognition (ASR) tasks while being lighter and faster to train than a standard gated recurrent units (GRU). However, the unbounded nature of its rectified linear unit on the candidate recurrent gate induces an important gradient exploding phenomenon disrupting the training process and preventing it from being applied to famous datasets. In this paper, we theoretically and empirically derive the necessary conditions for its stability as well as engineering mechanisms to speed up by a factor of five its training time, hence introducing a novel version of this architecture named SLi-GRU. Then, we evaluate its performance both on a toy task illustrating its newly acquired capabilities and a set of three different ASR datasets demonstrating lower word error rates compared to more complex recurrent neural networks.

翻译:在自动语音识别(ASR)任务方面,光锁的常规单位(Li-GRU)在比标准封闭的经常性单位(GRU)更轻、培训速度更快的同时,在自动语音识别(ASR)任务方面取得了令人印象深刻的成果,这是众所周知的。然而,在候选的经常性门上,其纠正线性单位的无限制性质导致一个重要的梯度爆炸现象,扰乱了培训过程,并阻止将其应用于著名的数据集。在本文件中,我们从理论上和经验上为它的稳定创造了必要的条件,并建立了工程机制,以加快其培训时间,从而引入了名为SLi-GRU的这一结构的新版本。然后,我们评估了它在一个玩具任务上的性能,说明它新近获得的能力,以及一套由三套不同的ASR数据集显示的字差率比更复杂的经常性神经网络要低。